There are many confusions over the internet and multiple errors we were facing while running stable Diffusion on AMD machine. After reading and following multiple repository and discussions on the web, we are sharing problems and have listed, discussed all the errors and solutions to fix it.

Error: Torch is not able to use GPU

One day while running the webui-user.bat file, we were getting error “Torch is not able to use GPU“. For this we searched about the error and got that the actual repository shifted to CUDA.

1. So, to fix this, first move to the “stable-diffusion-webui” root folder or wherever you have installed Stable Diffusion WebUI.

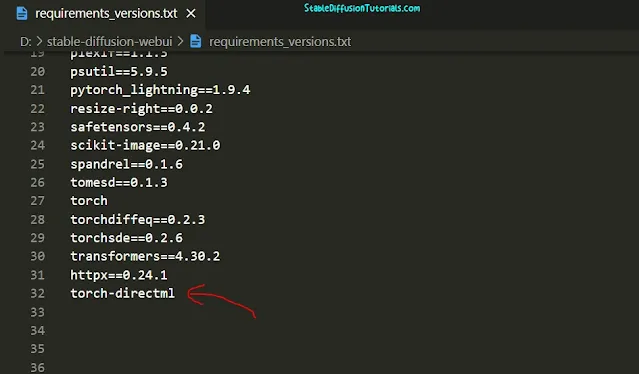

2. Here, we need to edit two files. Search for file named “requirements_versions.txt“, select right click and open with any editor like Notepad or Notepad++. We are using here Vs Code.

3. Move to the last line of the file and add this library name “torch-directml” in the file. This will add direct ml library to get installed.

4. Now just save the file.

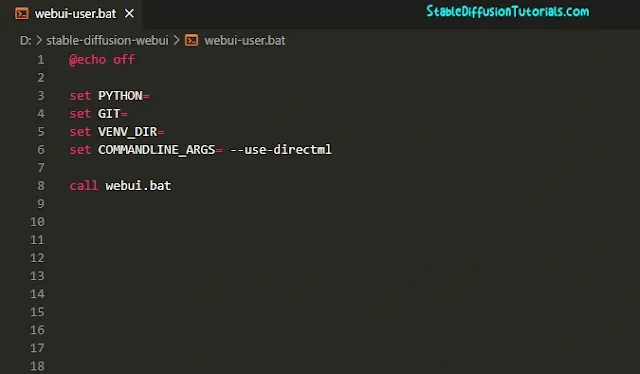

5. Again search for another file named “webui-user.bat” file and do right click and open with any editor. You will get command “set COMMANDLINE_ARGS=“. Add arguments “–use-directml” after it and save the file. This will instruct your Stable Diffusion Webui to use directml in the background.

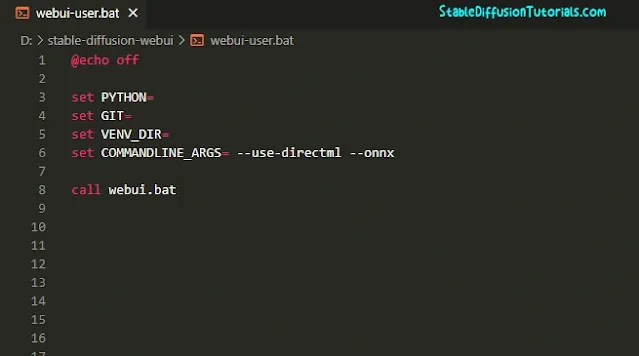

Now, here if you want to leverage the support provided by Microsoft Olive for optimization, then add this argument “–use-directml –onnx” after “set COMMANDLINE_ARGS=” command. But this is optional.

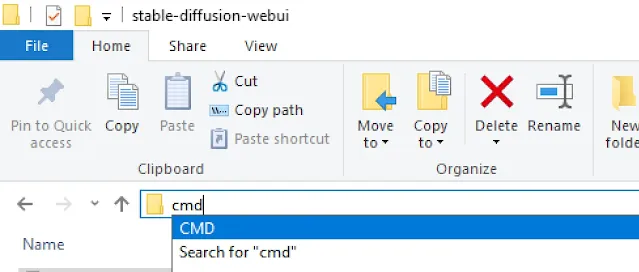

6.Move to the Stable Diffusion root folder wherever its installed. Now go to the address bar and type “cmd” and press Enter to open command prompt.

7. Here you need to activate the virtual environment. Here, “venv” is the name of the Python virtual environment. Type this command and press enter:

venvScriptsactivate

Now if you see (venv) at the starting of the new line, its mean that its been activated.

8. Again type this command to upgrade pip:

python.exe -m pip install –upgrade pip

After getting upgrade, you will get a successful message.

9.Now upgrade httpx library by using this command:

pip install httpx==0.24.1

10. At last install all the perquisites using requirements.txt file:

pip install -r requirements.txt

11. Execute to open WebUI:

webui-user.bat

Now once again, you can run stable diffusion on AMD enabled machine by copying on the Local URL into your browser.

Error: AMD GPU not recognized by Stable-Diffusion-WebUI

If you are facing this type of error, then its mean you got wrong Torch version installed on your machine. Follow the procedure to fix this issue:

1. First move to the Stable Diffusion WEbUI root folder wherever you have installed it.

python3 -m venv venv

source venv/bin/activate

pip uninstall torch torchvision torchaudio

pip install –pre torch torchvision torchaudio –index-url https://download.pytorch.org/whl/nightly/rocm5.7

2. Create a file and add the following command provided below using Notepad/Notepad++ or any text editor :

########COPY AND PASTE ##########

#!/bin/sh

source venv/bin/activate

export HSA_OVERRIDE_GFX_VERSION=10.3.0

export HIP_VISIBLE_DEVICES=0

export PYTORCH_HIP_ALLOC_CONF=garbage_collection_threshold:0.8,max_split_size_mb:512

python3 launch.py –enable-insecure-extension-access –opt-sdp-attention

#########################

Save it as ” launch.sh ” file.

Here, you need to take care that ” HSA_OVERRIDE_GFX_VERSION=10.3.0 ” is the exact AMD GFX version, in our case its using RX 6800 (RDNA2 based architecture), so we have set it to 10.3.0 version. In your case it can be different, just search AMD GFX version for your GPU from the official AMD page.

3. At last open your command prompt by moving into Stable Diffusion WebUI folder and type ” cmd ” in address bar, again type this command in command prompt:

bash launch.sh

It will take some time to install the necessary libraries. Now, you have fixed the problem.

Conclusion:

If you don’t get your solution fixed then you can directly ask your query from the official Stable Diffusion WebUI GitHub repository by arising the problem under issue section. We regularly fix our real life problems by putting the valid query, and many people are there to support in community.

You definitely need to check that out!