There are many ways to train a Stable Diffusion model but training LoRA models is way much better in terms of GPU power consumption, time consumption or designing large data sets from scratch is like a nightmare for us. Not only that, this procedure needs lesser number of images for fine tuning a model which is the most interesting part.

Training techniques like LoRA, Dreambooth can also be trained on Cloud like Google Colab, Kaggle, etc. but that’s the alternative which has some restrictions as well like limited VRAM, storage, time taking process.

Well, using LoRA models you can generate your own image style with different pose, clothing, face expression, art style like Anime, Paint brush, Cinematic etc.

Here, we will see how to train LoRA models using Kohya. Kohya is a Gradio python library based GUI application used to do LoRA training.

Requirement:

1. Windows /Linux based OS

2. Nvidia RTX 3000/4000 series GPU

3. VRAM minimum 12GB (16 or 24GB is best)

4. RAM 12GB or more

Steps to Install Kohya for LoRA Training:

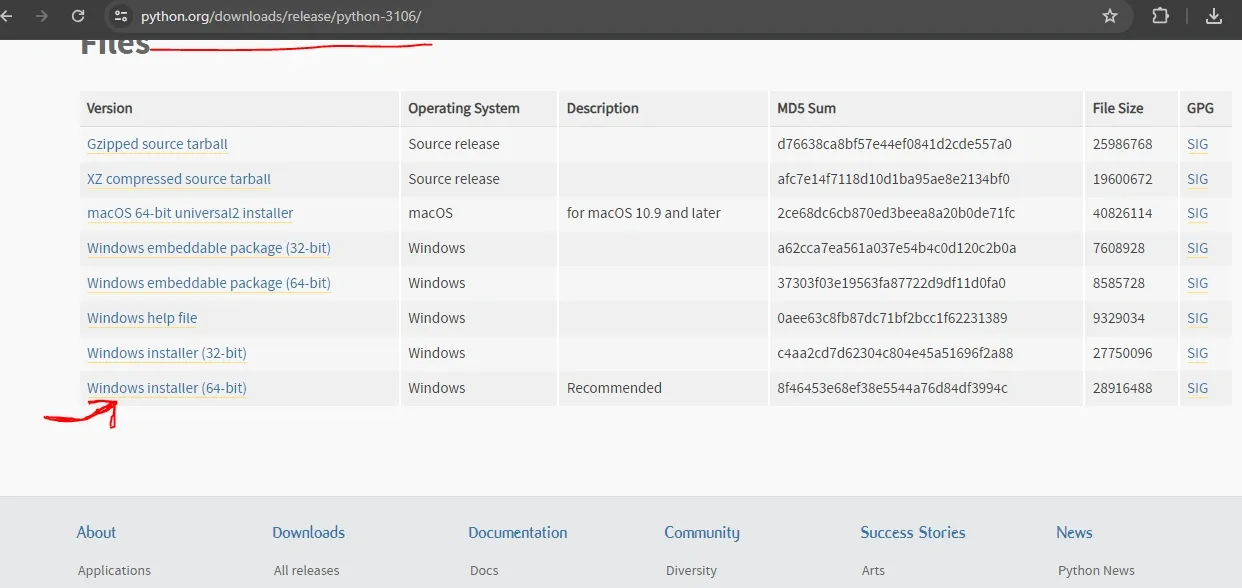

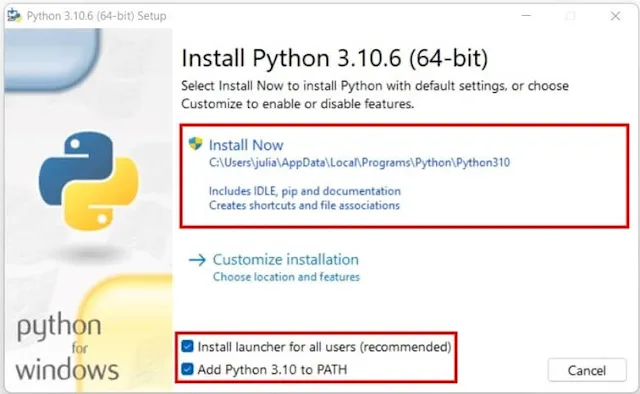

1. First install the Python 3.10.6 (other version currently not supported) and during installation check the box to “Add to Path” option for setting up the environment variables.

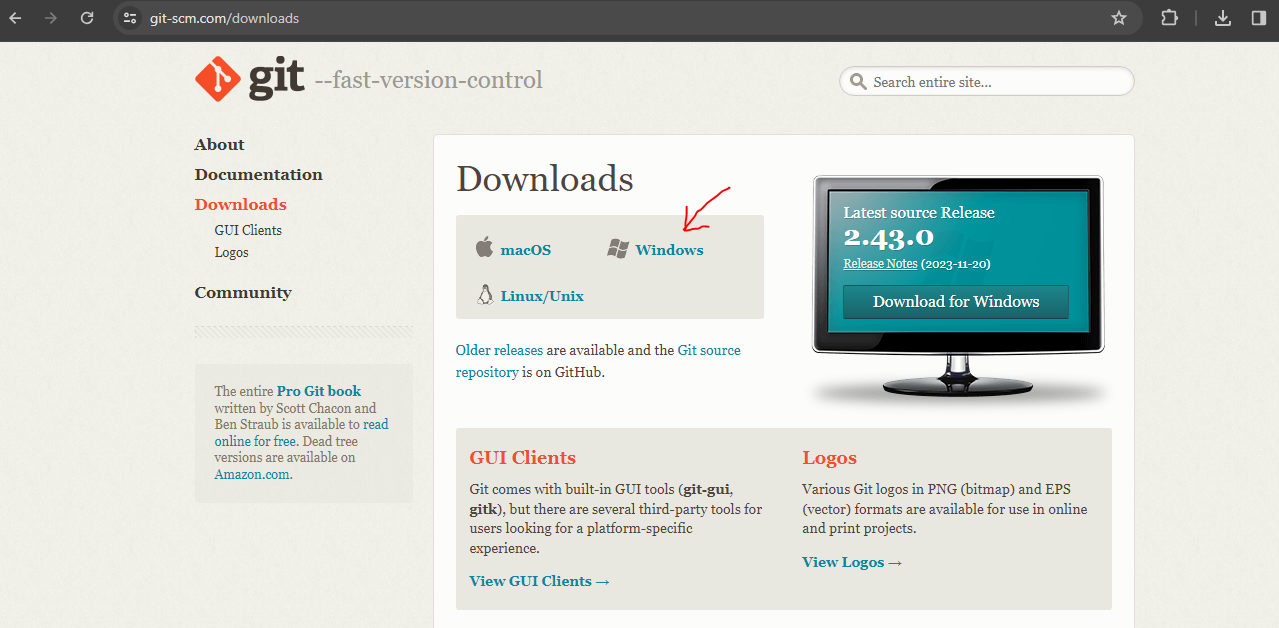

And next is to install Git from their official page.

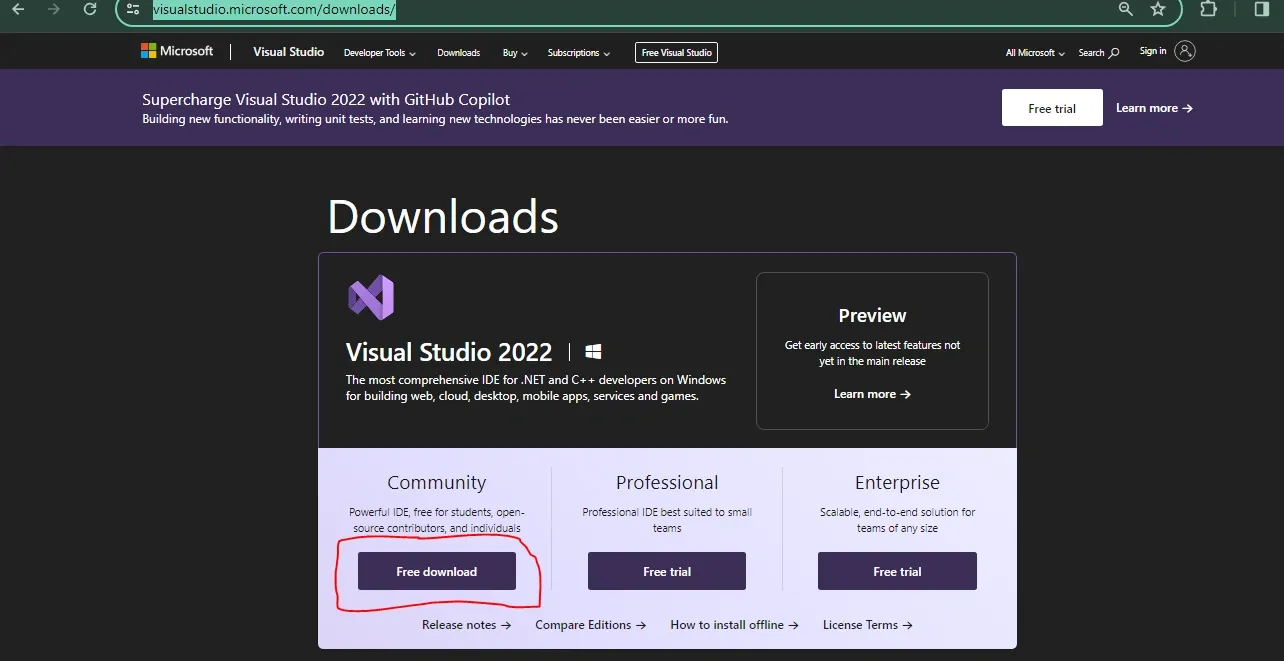

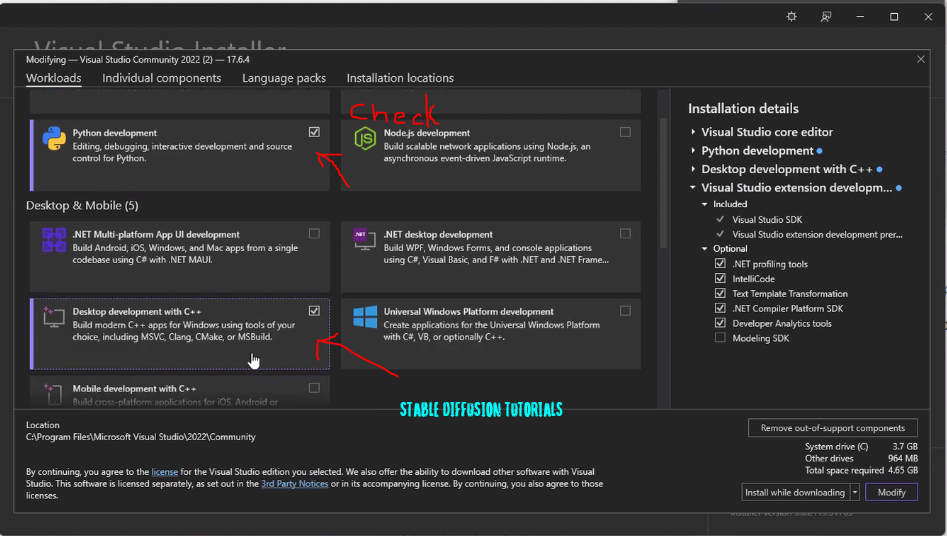

2. Now, go to the official page of Visual Studio, and download and install it and make sure to check the c++ package during the installation.

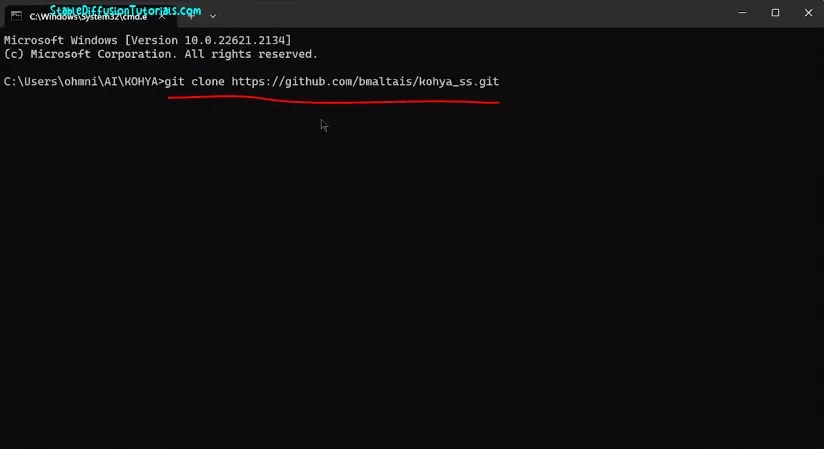

3. Next is to install the Kohya from its Github link (provided below). For that just copy the its repo link and paste on your command prompt. Now using 7zip or WinRAR, unzip the downloaded folder.

Kohya_ss github link:git clone https://github.com/bmaltais/kohya_ss.git

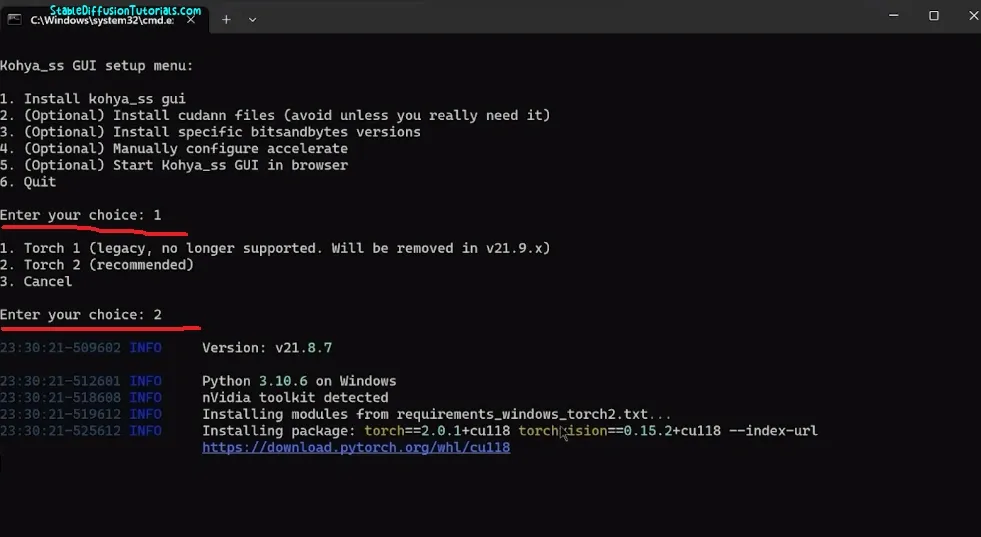

4. After that open the Kohya downloaded folder and then open “setup.bat” file. A command prompt will be opened with multiple options. Just select first option “Install kohya_ss gui“. For that press 1. Then press 2 for “Torch 2(recommeded)” option. This will initiate the installation process. It will take some time as depends on the internet speed and your machine configuration.

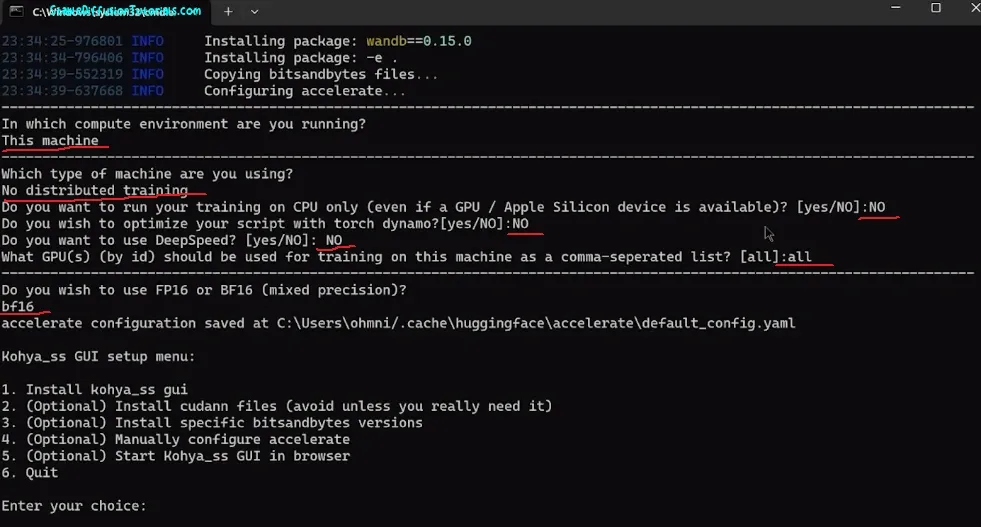

5. Now, after few minutes of installation, you will be prompted as (Simply select using up, down arrow key). Just do these setting which we have mentioned below:

–In which environment are you running ?”- This machine

–“What type of machine are you using?”- No distributed training.

– CPU to start training ? : NO (In our case we have GPU installed. So, if you don’t have GPU installed then CPU will work but will take longer time.)

–Do you want to optimize the script with torch dynamo? :NO

–Do you want to use deep speed?: NO

–What GPU should be used for training: All

–Do you wish to use FP16 or BF16? : choose FP16(for normal GPU); Choose BF16(for NVIDIA 3000series or more)

Now, just close the command prompt , because our setup has been completed.

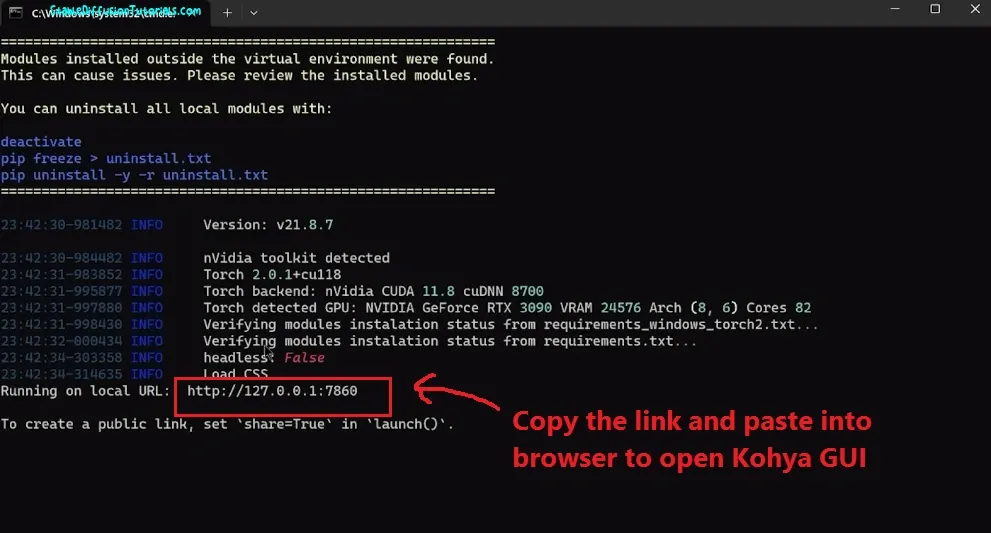

6. Go back to the downloaded folder of Kohya, and click on “gui.bat” file to open command prompt which provides you a local host URL:

http://127.0.0.1:7860

Just copy the link and paste into your browser.

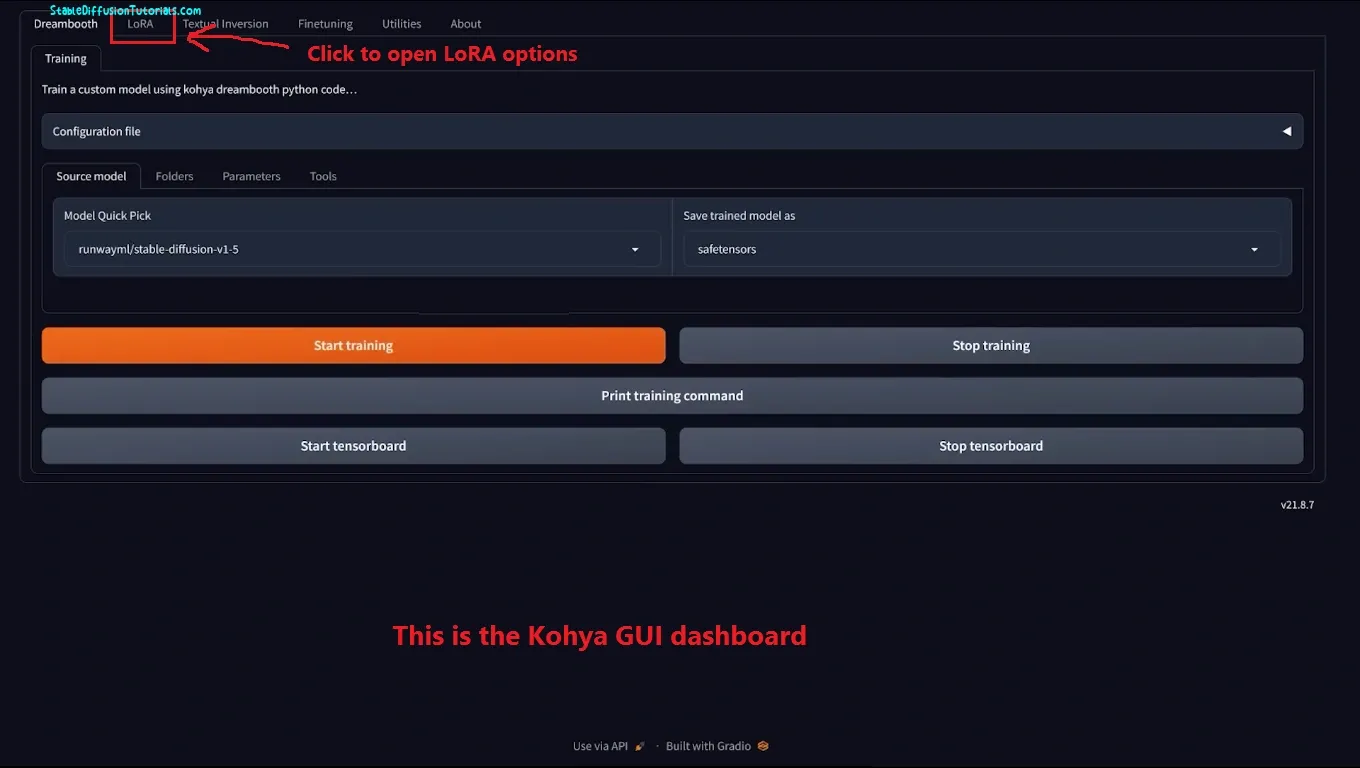

7. This will open the Kohya GUI on to your browser. Using this you can do training using Dreambooth, LoRA, Textual Inversion and even fine tuning as well.

Here, we have to do LoRA training so we will deal with “LoRA” tab. Now, we have to collect and form the data set.

For that you can get images from various image platforms like-

-Wikipedia images (non copyrights images)

-Pixel, Pixabay or Unsplash- You can search the type of images or objects you want to search in different aspect ratio.

-Google Images

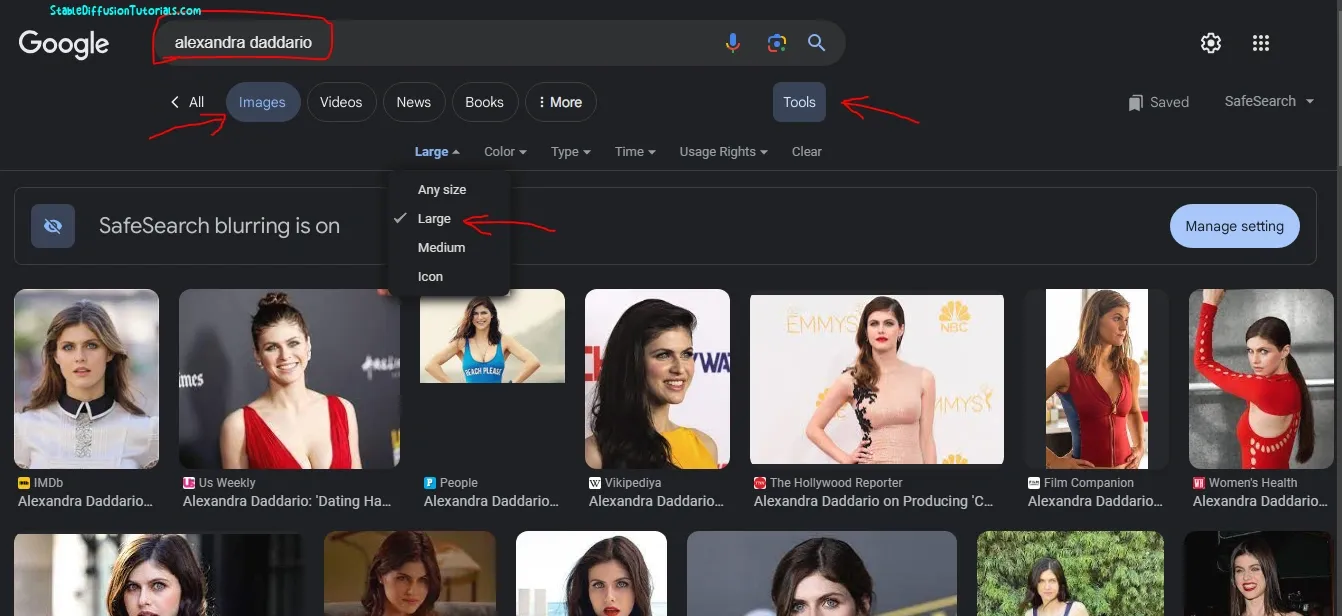

For illustration we are using Alexandra Daddario’s images from the Google images.

First search your character name on google and click images to show only images, then into “Tools” section sort the images files in large size by choosing the “Large” option on the drop down menu.

Please choose any images responsibly that are non-copyright with the consent while preparing data sets from any platform.

Important points while making a data set:

1. Make sure you select the images with high resolution. Always go for Ultra High definition resolution( like 4k).

2. Character with different random face image (pose, shots, face expression, gesture, clothing etc.), helps to make your data set more accurate precise.

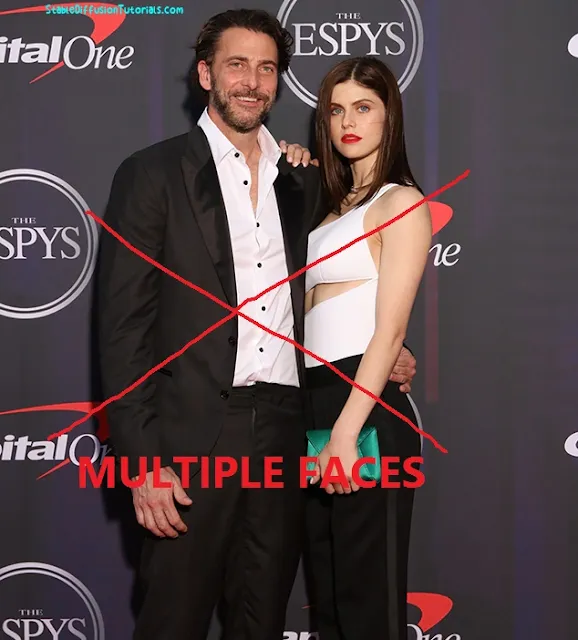

3. The images should not have the multiple faces.

4. It should have the single focused face of your selected character.

5. Collect random image dimensions for better flexible results.

6. Minimum 15-25 images will be quite good for training a LoRA model.

|

| Don’t use images with multiple faces, as its bad for our model training. |

|

| Here, in this image also two person’s presence make our model to not a perfectly trained model. |

|

| In this image the subject’s face is not focused tends to get bad results after LoRA training. |

Again here, the image is not focused and background objects adding confusion to identify the subject. So, these types of images are not good for our LoRA model which creates a hallucination effect in generating output.

Now, after doing lots of training and taking tons of hours to create humungous data sets, we experienced that using images with different dimensions gives way better results.

Steps to do LoRA Training:

Now, time to move into the training part in the Kohya GUI. Before doing the LoRA training you need to know what each feature does in Kohya GUI. Lets see what we need to learn.

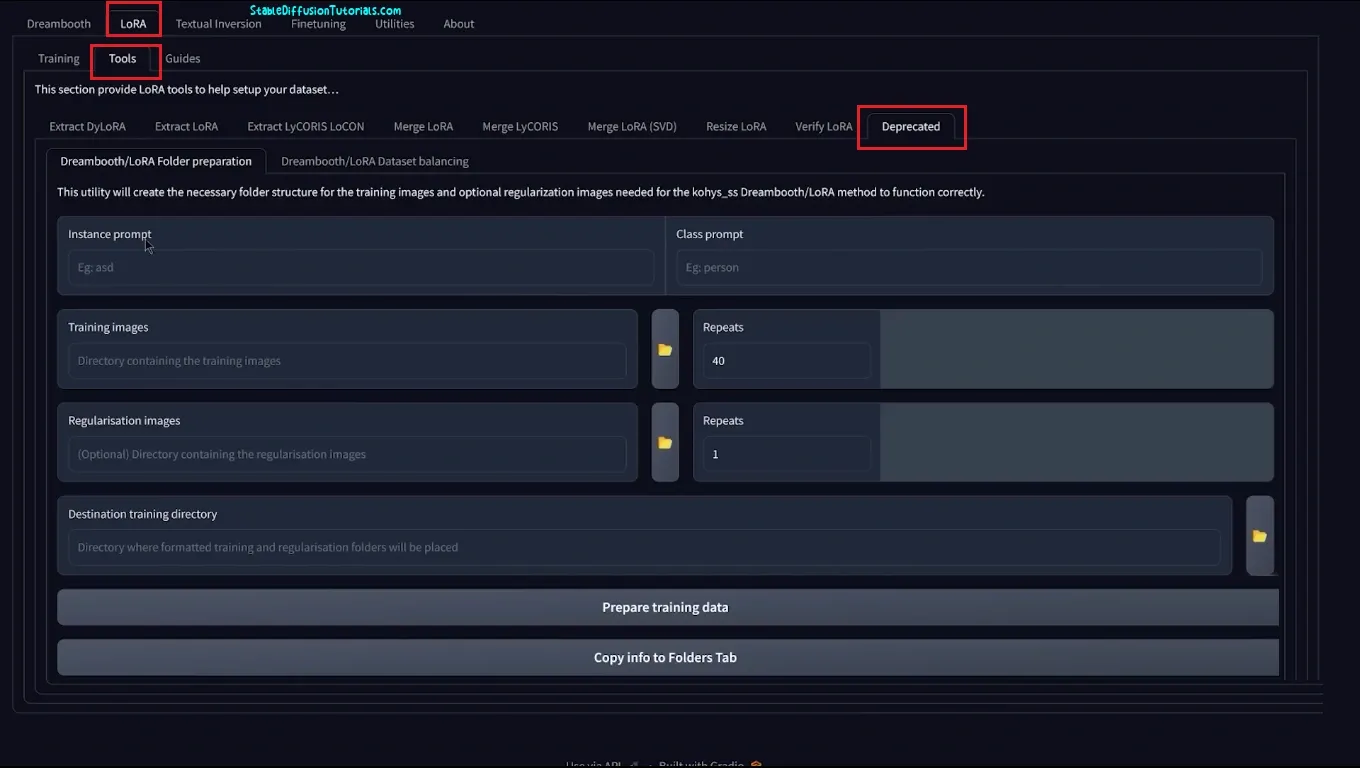

First select “LoRA” tab, then go to “Tools“, then “Deprecated“. Here, you will get a lot of features which we have explained below:

1. Instance Prompt: This is the box where you have to use the character name. Since for training, we are using the Stable Diffusion base model 1.5 which actually trained on the humungous data sets over that internet, it knows well the famous personality. So, putting the name of the character in this field helps the version 1.5 model to operate and prepare the task in more detailed fashion.

It helps the model not to do the training from scratch which consumes less time and GPU power. But, when you are trying to train using your own face the model will try to start from scratch(in this case, simply use your name).

Here, we are putting “Alexandra Daddario“.

We noticed in various stable diffusion forums that community are using “ohnx” tokens in model training using Dreambooth as an instance prompt, so they are thinking to use these same concept on LoRA as well, but you will get broke. So, keep it in mind that use character name instead.

2. Class prompt: In this input box describe what type of object you want to train. Like girl, boy, lion, tiger cat etc. Because our character is a female human, so we are adding “woman“.

3. Training images: Here, put your character folder (having 15-25 images) which you want to train with. We have download 25 images and chosen the folder containing Alexendra Daddario images.

4. Reglarisation images: This section tells you to upload all the images related to your character but not of your character’s images. Means here we are training model for a woman, so we have to upload all the images of women with different shots poses, face expressions, color complexions, clothing, art styles.

For example: you want to train images on cat, then you have make data set of wide breeds of cats, in different color, different angles etc.

And you will be amazed to know that you will need a bunch of images for this feature. We mean almost 3000-5000 images.

Yes, you are right!

We have collected 3459 total images of women in different pose, art style, facial expression, clothing. But, you will be thinking how can you get these a lot of images.

The first option is to use the python library which download the images from internet.

And if you don’t know the python programming then point this task to Google Gemini (Bard)or ChatGPT.

Use this prompt to generate python code: “You are a master in python programming having 30 years of experience. Help me to generate a python code which can download images from Google using python library and all the images should be of higher quality(Ultra HD)”.

Now copy the code from ChatGPT and open the Text document file, paste the code and save it as any name with “.py” extension(Ex- downloader.py).

Make sure you have python installed and open the command prompt by typing “cmd” on the address bar where you have saved the python file. Then just run python file using command prompt by inputting

“python <your-file-name.py>” like we have illustrated in the above example.

Or another way is to download a set of dataset from the Github or Kaggle(well known sources for machine learning data sets).

Other alternatives is to use Automatic1111 to generate multiple images in one go. For this, right click on “Generate” button.

5. Repeats: This option defines that how many passes each training images will receive. On the first Repeats box put “20” and on the other Repeats box to “1“.

6. Destination Traning Directory: Its the destination folder for saving the regularisation images with new folders structure.

Now, just click on “Prepare training data” to do setup.

Just open the regularisation folders, you will see folders as “log“, “img“, “model“, and “reg“. Into the “img” folder you will see an image folder storing a lots of images. In our case its named as “20_alexandra_daddario woman” (yours can be different if you chosen different character, class and repeats).

Come back to Kohya_ss GUI and select the “Copy info to Folders Tab“. Now click on top “Training” then select on “Folders” tab, you will observe all the settings will be done. Now, go to Model Output name and put your character’s name (In our case its “Alexandra_Daddario“).

Next part is to generate captions for each image. These captioning helps the LoRA models to attain more accuracy with precised with results in getting varieties of AI art. But, now you think that you need to make captions for all these?

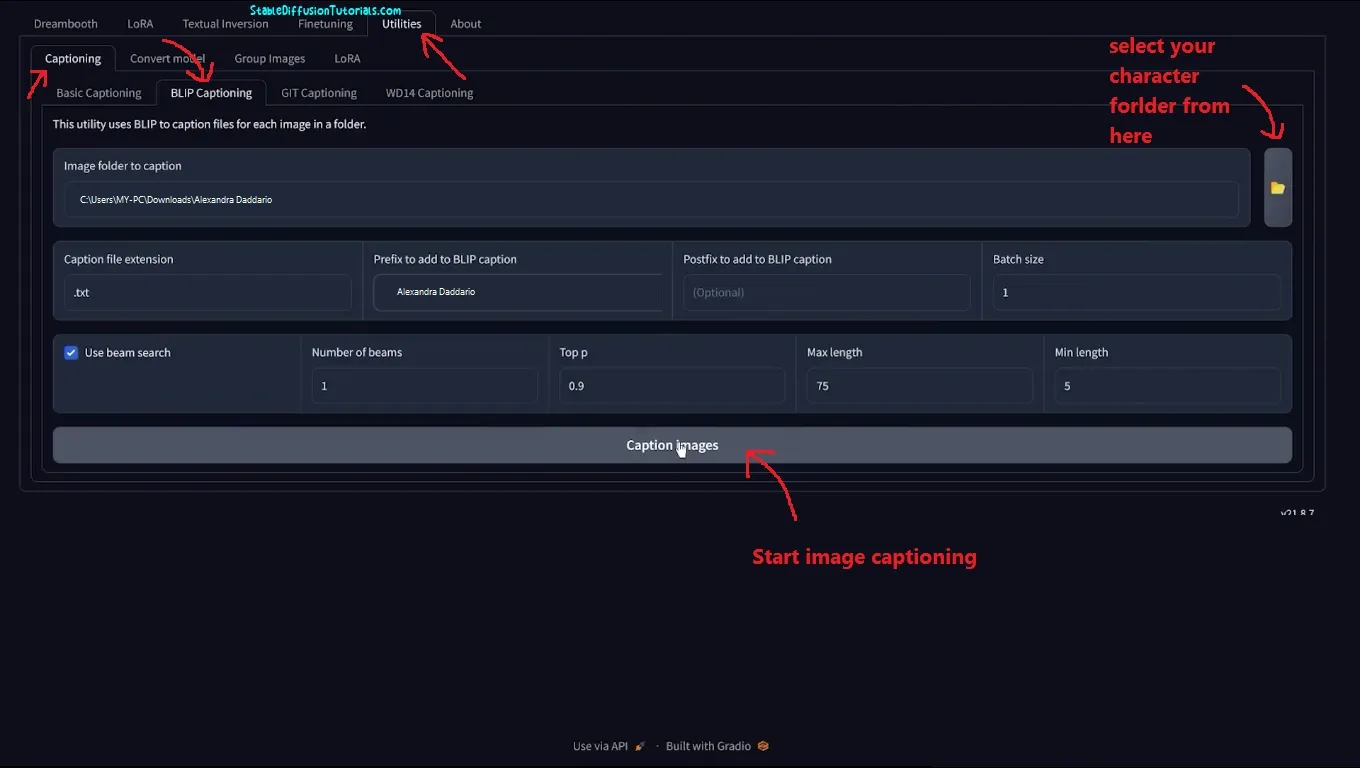

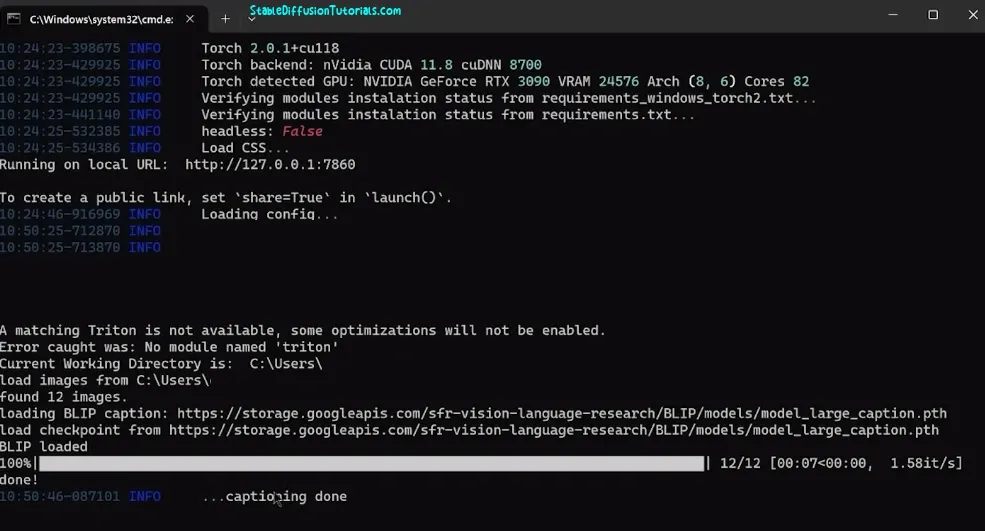

Actually no, you can automatically generate image captions for each image using BLIP model.

For that just click on “Utilities” then again select “captioning” then move to “BLIP captioning“.

Load the path of the image folder. Caption file extension: add “txt“. Prefix to add to BLIP caption: Alexandra Daddario (in our case, you put yours).

And left all the settings as it is and select “Caption Image” to start captioning.

It will take some seconds(in our case it took 5 seconds).

Now, just go to the folder where image captioning was processed. You will get new text files respective of each images defining it.

Since, the BLIP model do not always works perfect. So, you need to open one by one and check whether the generated captions is relatable to the respective image or not. If not then you can add more detailed description to the captions which helps the LoRA model to get more accurate leverages in generating the perfect images.

After image captioning, select all the caption text file and move all into the “img” folder of reguralisation folder inside “20_alexandra_daddario woman“.

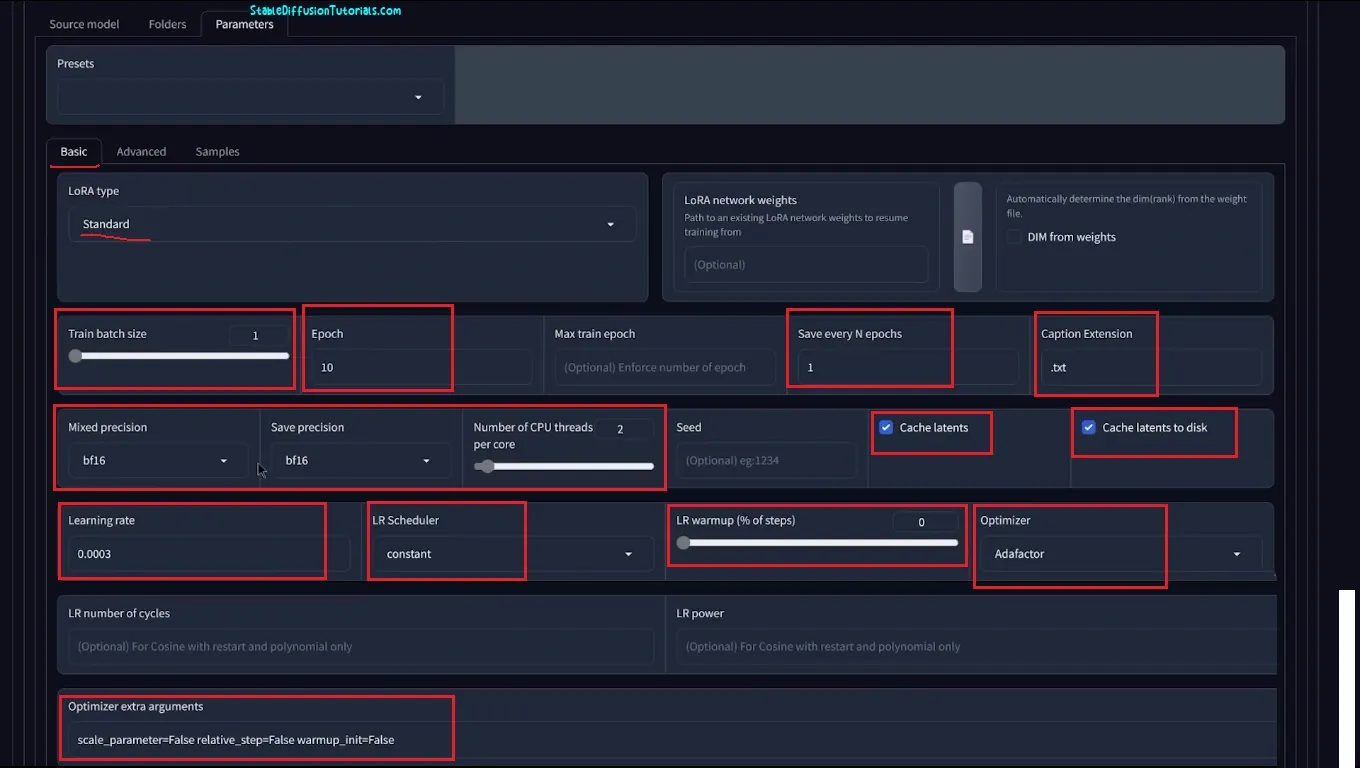

Again, move back to Kohya_ss GUI and select “LoRA” tab and select “Training” and again select Parameters then put these values to their respective options:

–Train Batch Size: 1 ; This is the amount of size which going to be processed in one go during the training. If you try to increase the size, more the GPU power it will consume and also the training will be at faster pace.

–Epoch: 5 ; We chosen 10 to get the highest possible rate. This means how many cycles you want to do training. 10 epochs mean 10 training cycle.

Like the value we used in Repeats value as 20(point 5). Means 20 repeats*10 epochs = 1 cycles

And 20*10*10 = 2000 epochs means 10 cycles

But, to detect our model is over trained or undertrained we need to decide the epochs.

The more you do the more precise your model will be but choosing much higher value will over fit the model. So, this comes with the practice.

–Save every N epochs: 1

–Captions Extension: .txt

–Mixed Precision: f16 (for lower VRAMS), bf16 (3000 series VRAM or TPUs)

–Save Precision: f16 (for lower VRAMS), bf16 (3000 series VRAM or TPUs)

–Number of Cpu thread per cores: 2

–Cache latents: checked

–Cache latents to disk: checked

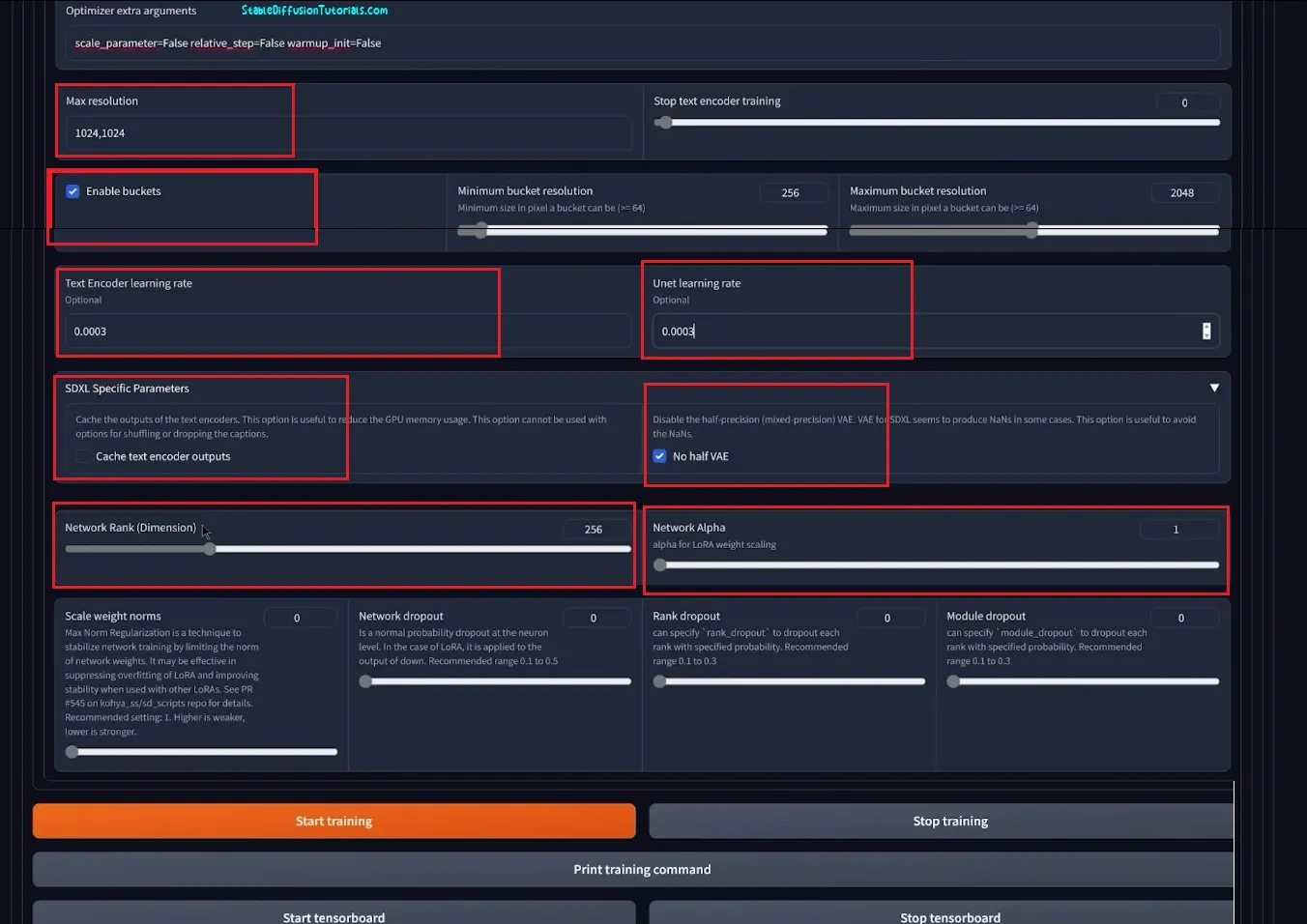

–Learning Rate: 0.0003 (recommended range should be from 0.0001 to 0.0003)

–LR warm up: 0

–Optimizer: Adafactor

–Optimizer extra arguments: scale_parameter=False relative_step=False warmup_init=False

–Max resolution: 1024,1024 (If you have higher resolution gives you model a hihger flexibility in generating different reosution but consumes more GPU power.)

–Enable Buckets: Checked (This option is for taking your all dataset images in higher image ratios and train like they actually available.)

–Text encoder learning rate: 0.0003 (set same value as in Learning rate)

–Unet learning rate: 0.0003 (set same value as in Learning rate)

–SDXL GPU Parameters: checked (this uses cache memory and effecient for GPU memory usage)

–No Half VAE: Checked

–Network Rank: 256 (Adds clarity, detailing and flexibility in generation. Again with higher value the higher size of your model size will be. Thats, why you will often notice the LoRA models on CivitAI with 2MB and 6GB as well.

Setting for 256 gives a more clarity but the model size will be bigger. But this also consumes more GPU power.

–Network Alpha: 1 (This is how much Lora weight you want to get influence in your training)

If you want lower size of model then try with Network Rank and Network Alpha as 32 and 16 respectively.

Now click on “Advanced” tab presented on the top menu:

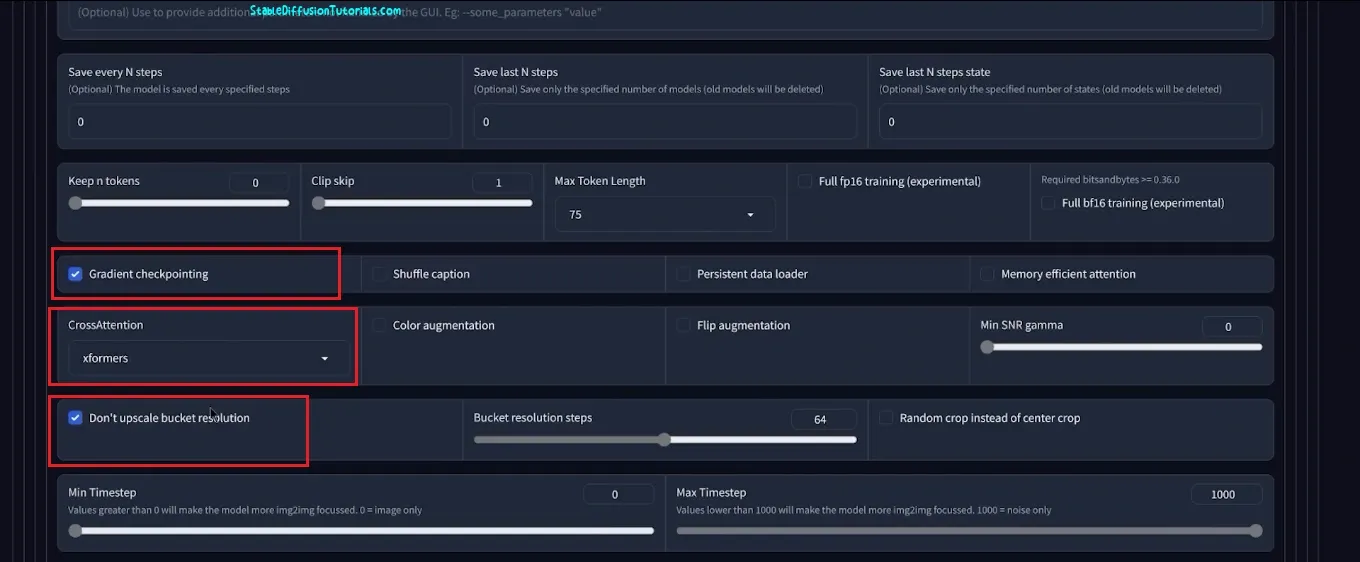

–Gradient Checkpointing: Checked

–Cross Attention: xformers

–Don’t upscale bucket resolution: checked

Leave rest settings as it is.

Phew…These are a lot of settings…is’t it?

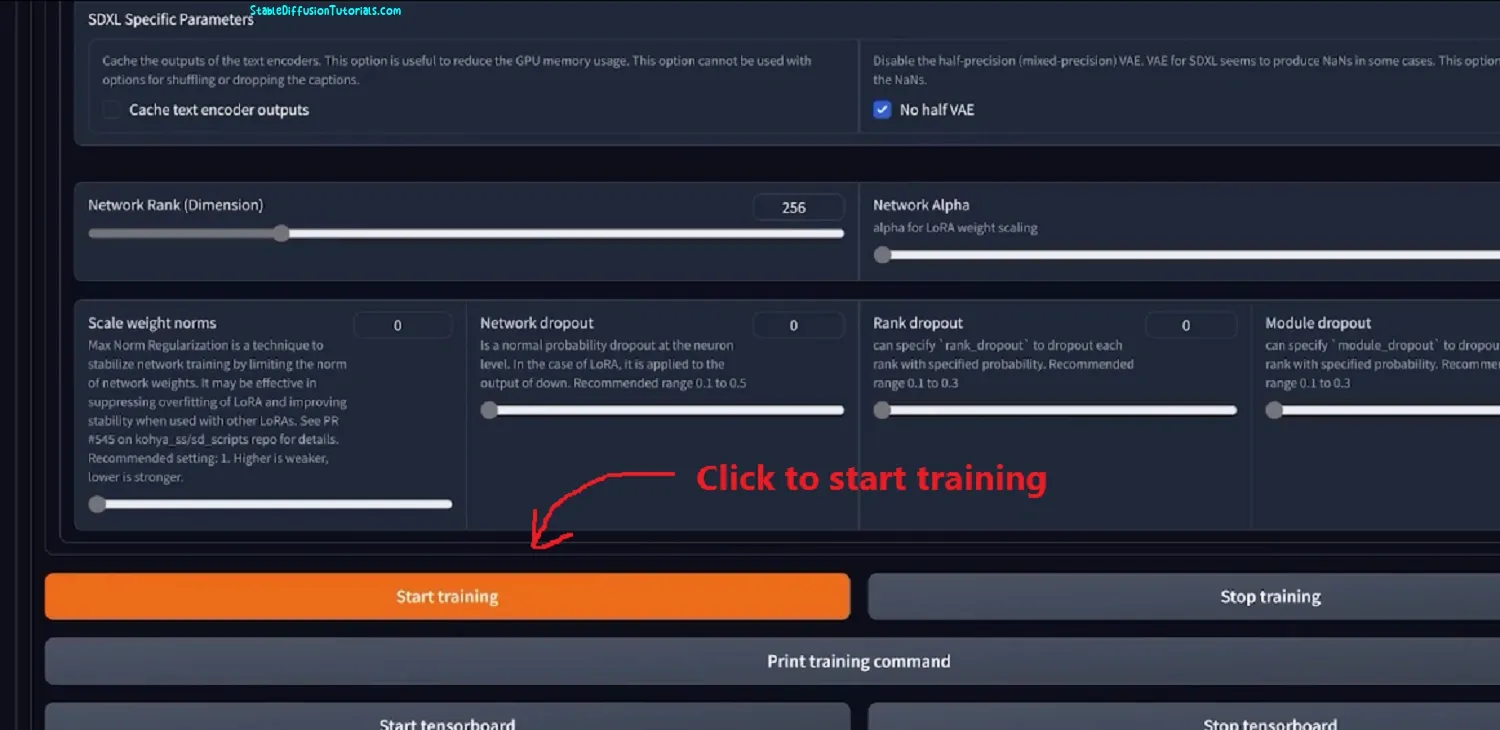

Now just click to “Start Training” button available below.

And a command prompt will appear which will show what’s the current status how much time it will take.

In our case it took around 1.5 hours to train, results to a 5GB LoRA model that is flexible enough to generate images in any art style with clarity.

Why?

Because we have chosen the higher epoch value to 10 to get the perfect highest most flexible model. You can set Epoch to 2 or 5.

We know you come a long way. So, the wait is over!

Lets try to generate images with this model. We have put these prompts to generate multiple images. Here, for generating your image from your trained LoRA, its mandatory to include your class prompt and instance prompt while feeding in prompt box.

The example has been illustrated below.

Prompt: a beautiful Alexandra Daddario, woman, sitting on a chair, red lipstick, night city, highly detailed.

Prompt: a beautiful Alexandra Daddario, woman, wearing jeans, standing near shop, red lipstick, night city, highly detailed, unreal engine, trending on art station,8k

Take in mind we have used the Class prompt as “Woman” and Instance prompt as “Alexandra Daddario” which is also called “Trigger word“. So, whenever we want to generate any images we have put these two tokens into our prompt.