After using

the Stable Diffusion for a long time, one day we were facing many disconnects.

So, we researched the internet and came to know that Google Colab has blocked the use of Automatic1111 with Stable Diffusion due to a large amount of computational power consumption.

But, we found many cool tricks to run and install Stable Diffusion for free on Google Colab and on PC and we have the solution to fix it and we are here to help you learn how you can

do like us.

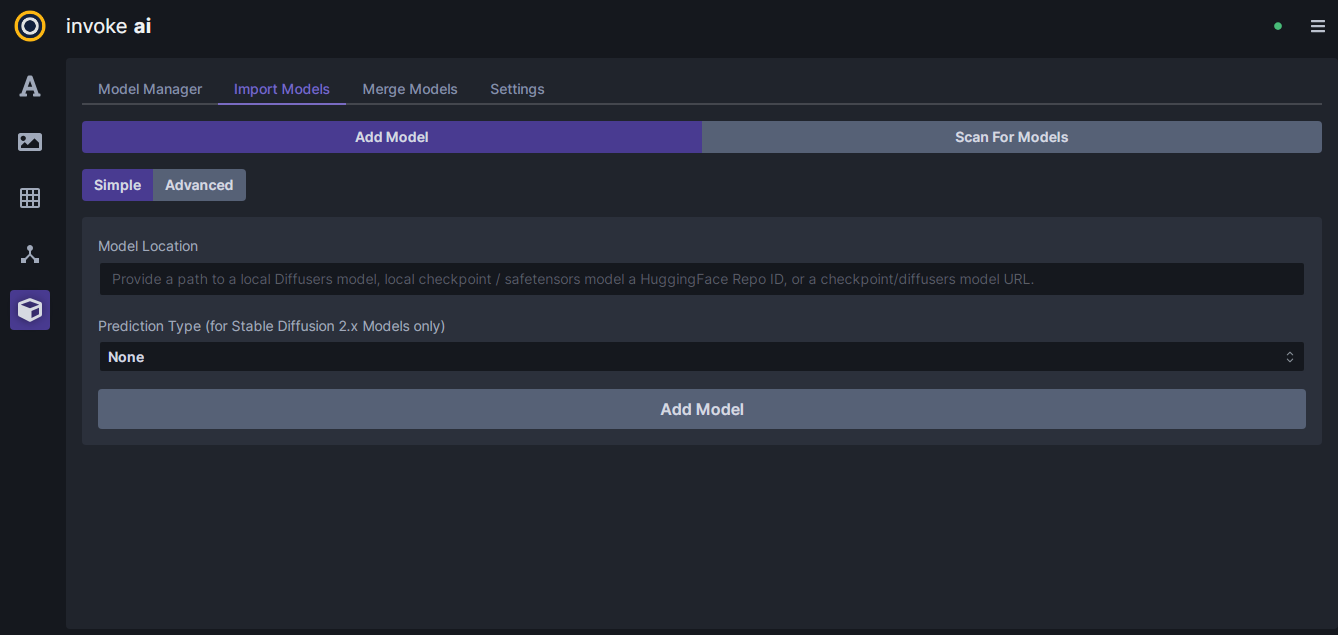

Invoke Ai is a WebUI that helps to create incredible AI images using Stable diffusion. With an easy user interface users can also download and install multiple models with just a single click. It can be hosted on the Cloud or installed on a local machine like Automatic1111.

The Frontend UI has been designed using the Javascript Framework (ReactJS). The User interface is specially designed for professional editors, artist enthusiastic who are engaged to work on their PC, Mobiles, or Tablets.

Some Fantastic Features:

1. It supports the ckpt extension files and Stable diffusion models, which can be downloaded from Hugging Face.

2. It can easily load Stable diffusion Models version 2.0, 2.1, XL, and XL Turbo.

3. Simple User interface for Tablets and mobiles.

4. Connecting Nodes benefits for clear understanding.

5. Customizable pipelines for artists that can be shared instantly.

6. Adds rich metadata within generated images helps during projects.

7. Helpful for drag and drop.

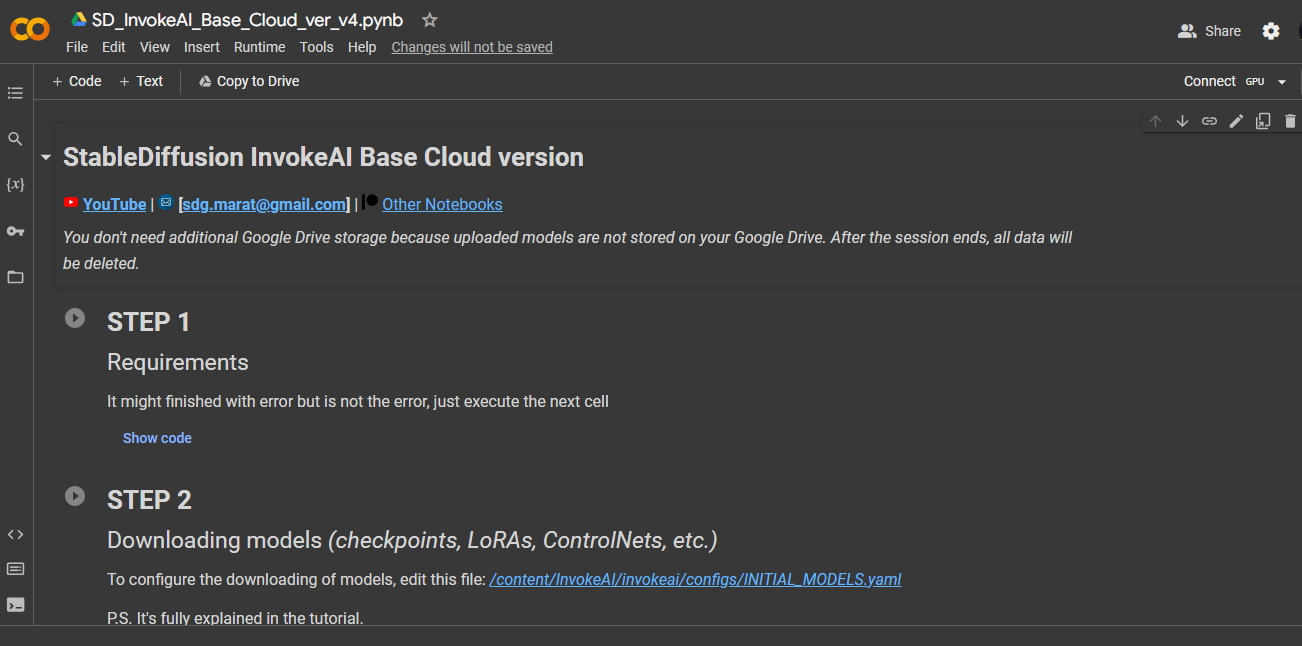

Steps to install Invoke AI on Google Colab:

1. First of all go to the Google Colab link

for setting up the environment :

https://colab.research.google.com/drive/143_3pv8csybgkKnWyDVCi8bhVo16_5AI?usp=sharing

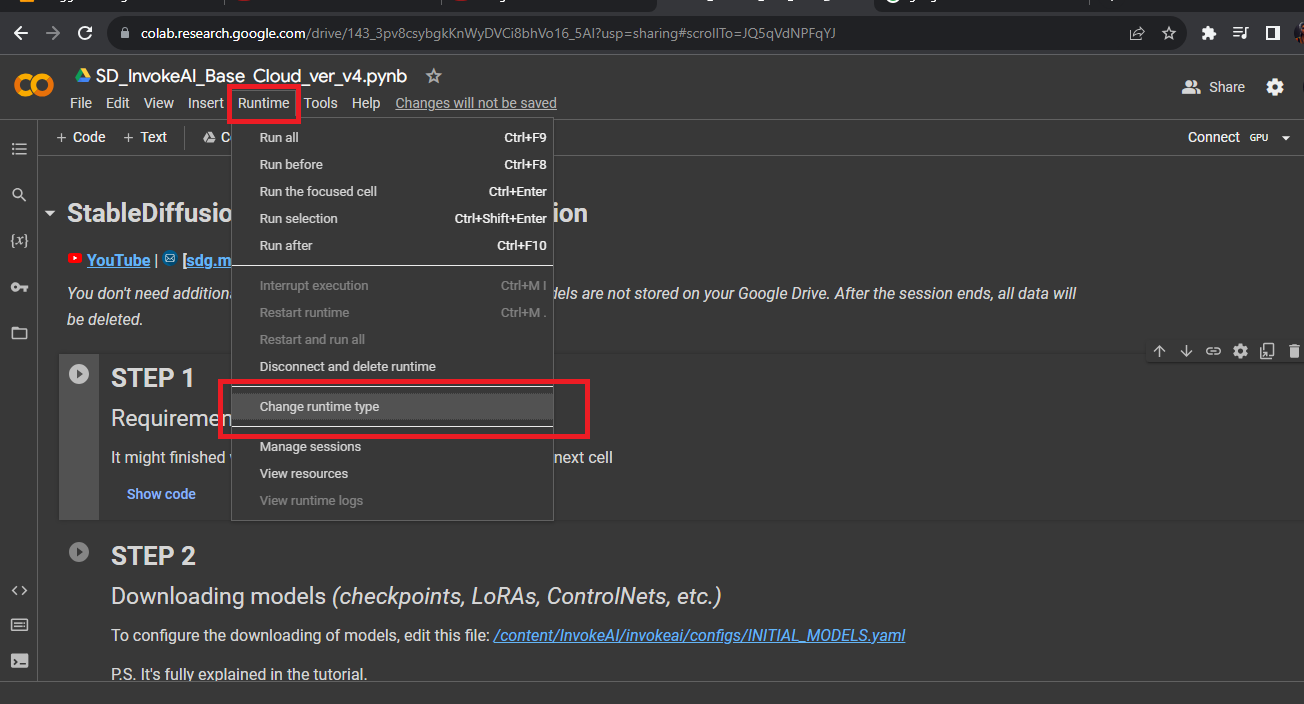

2. Go to run time presented at the menu bar and click Change Runtime Type.

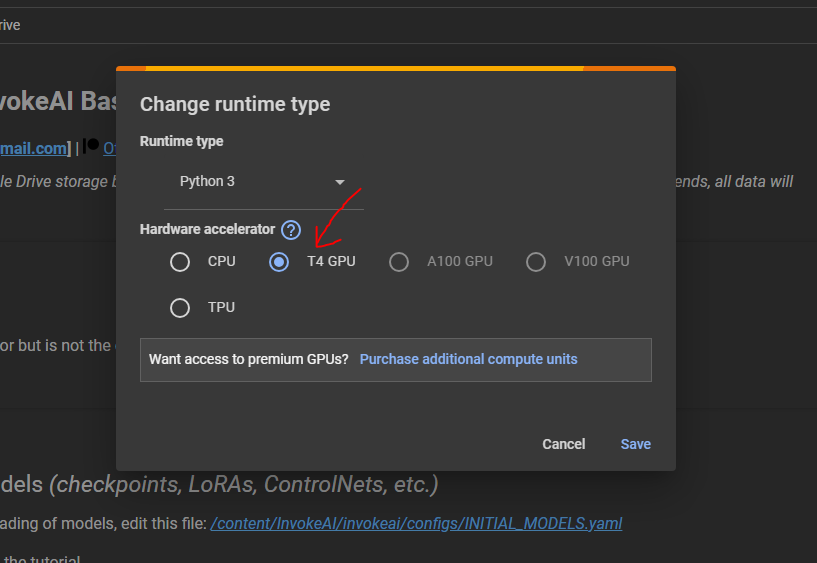

T Then just select T4 GPU (by default it

will be selected as CPU) and click Save.

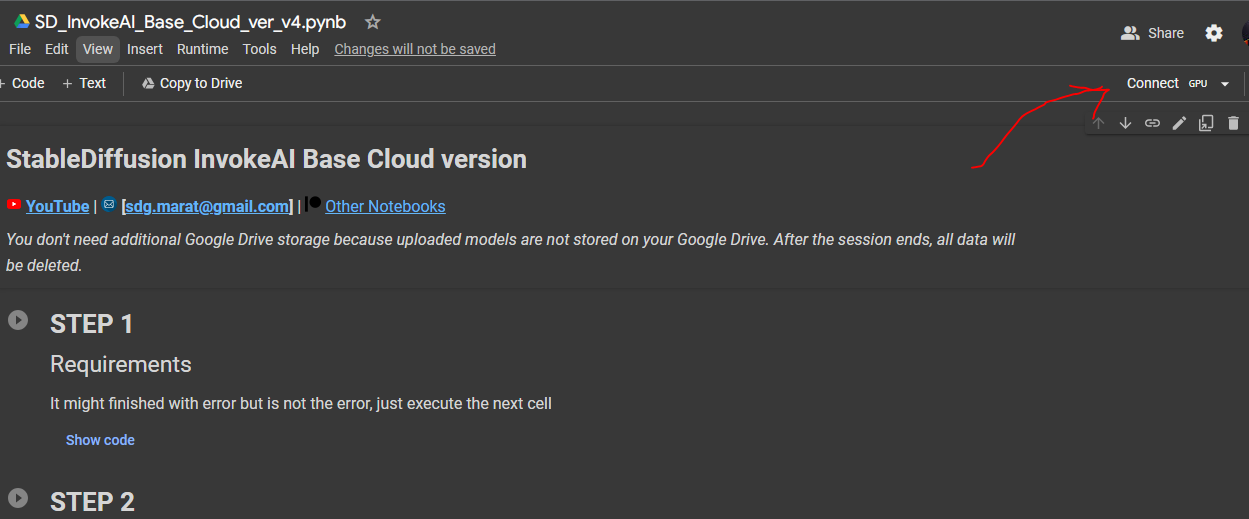

3. Click the Connect button presented in the top right corner, after connecting to the GPU environment.

4. Now, run the first cell (STEP 1) by clicking the play button and then again

click Run Anyway (for confirmation

from the Google Colab terms and conditions).

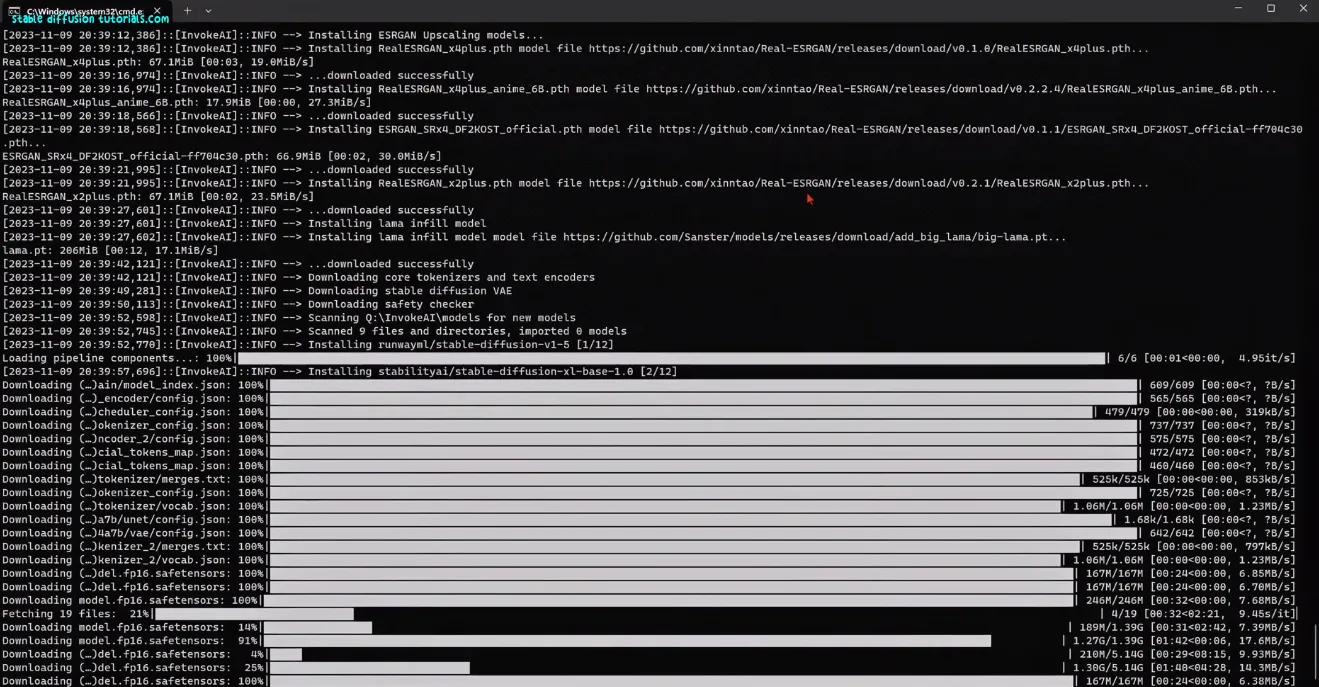

This can consume some time because the models are huge in size which takes time

to download and install in runtime. In our case, it took around 8 minutes.

After getting installed, you can see the green colored check mark which

confirms that your code has been installed.

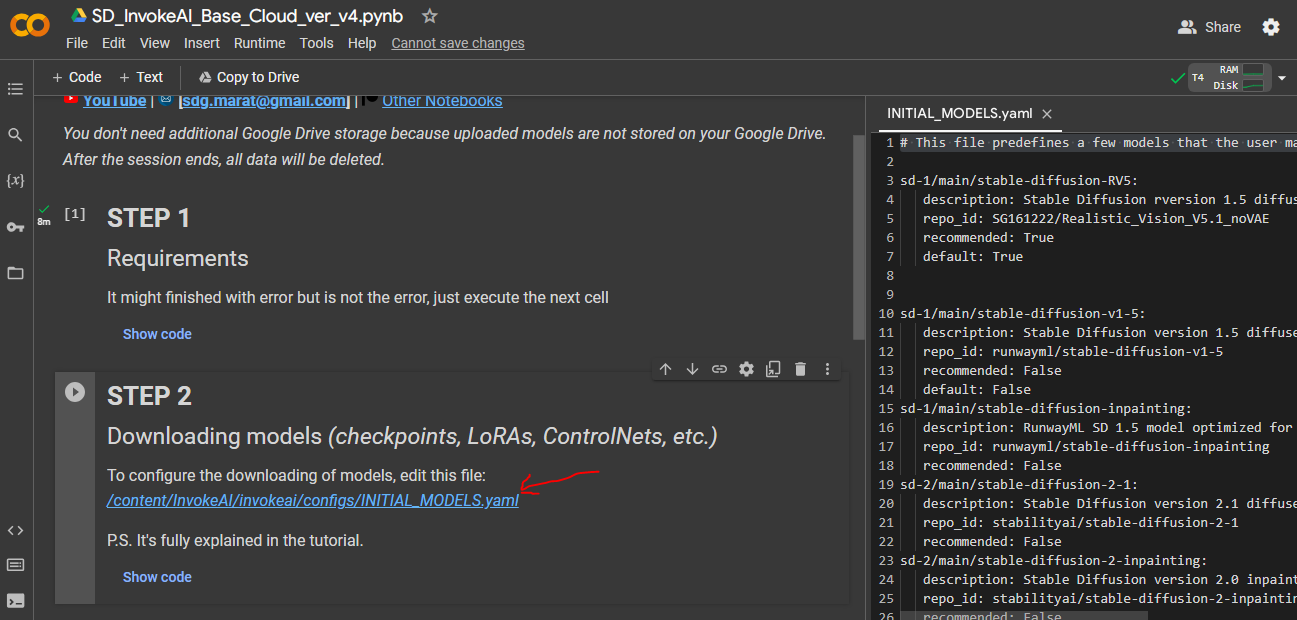

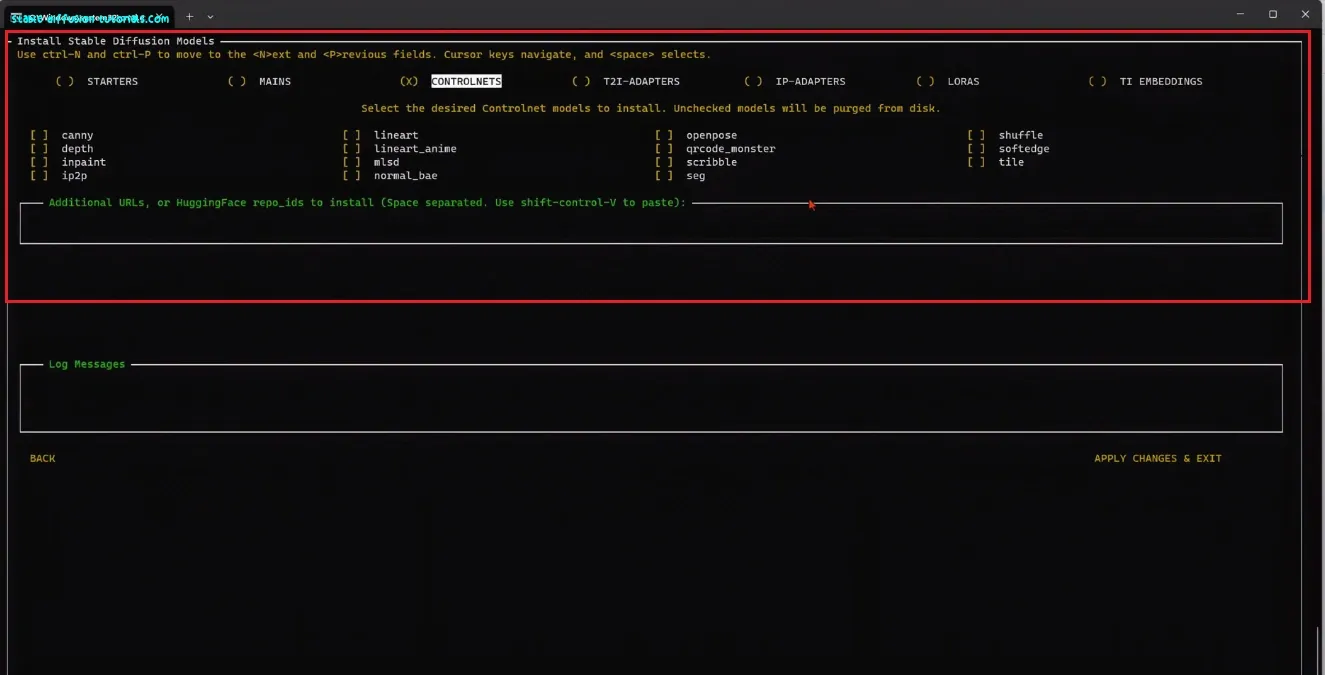

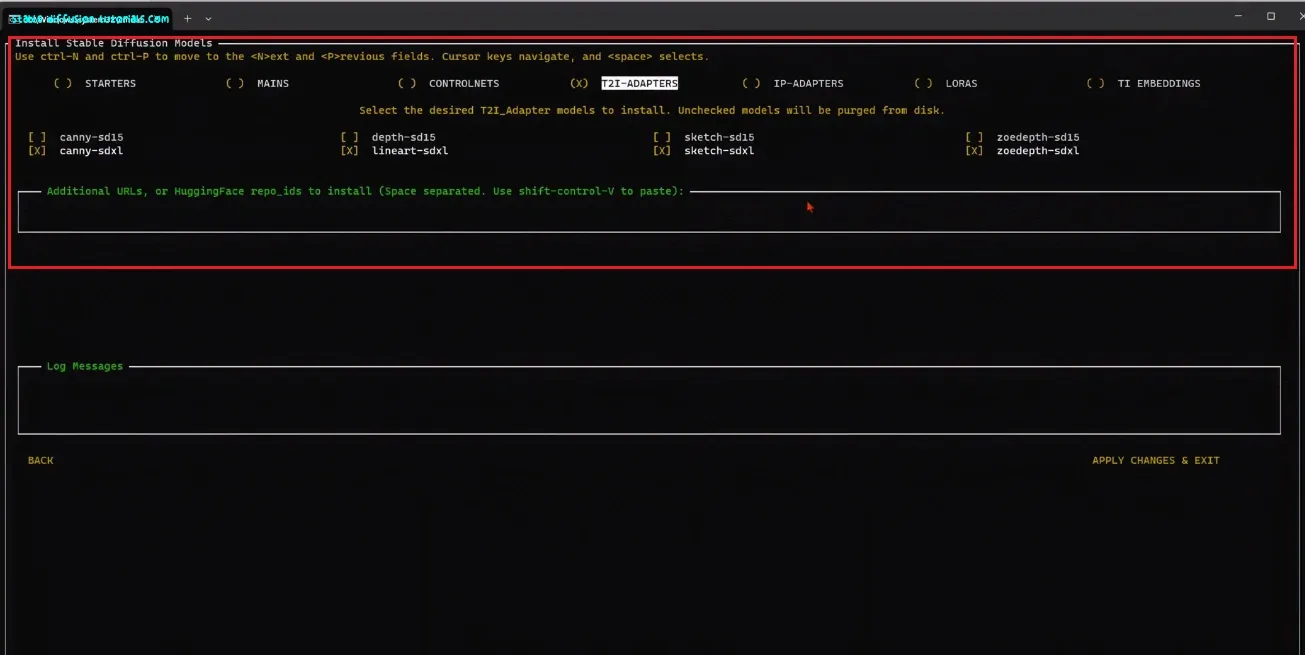

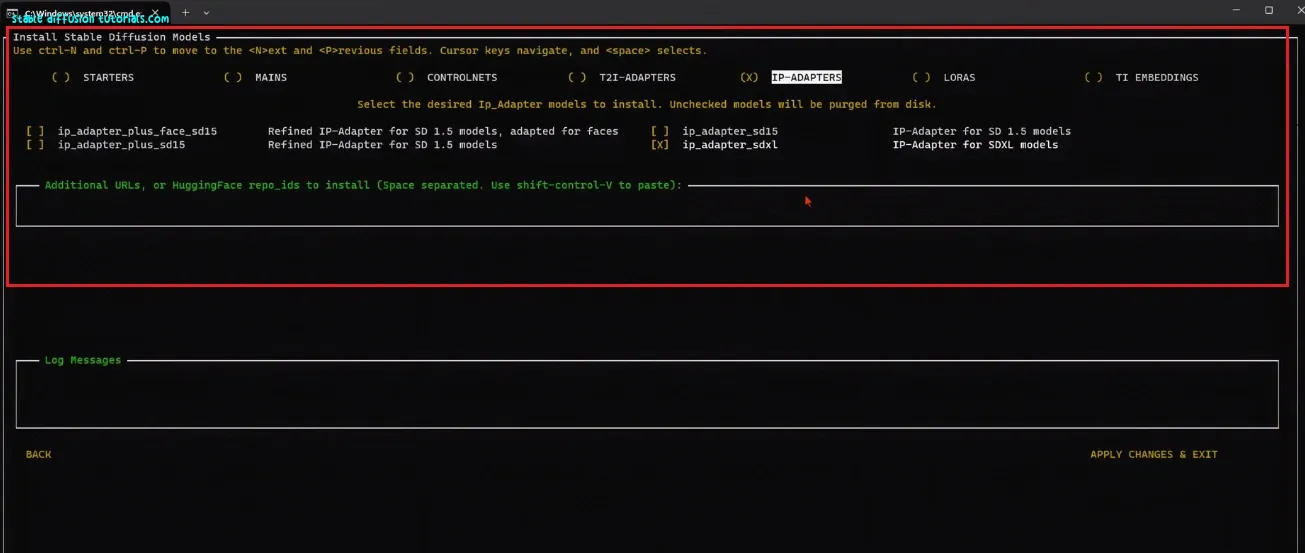

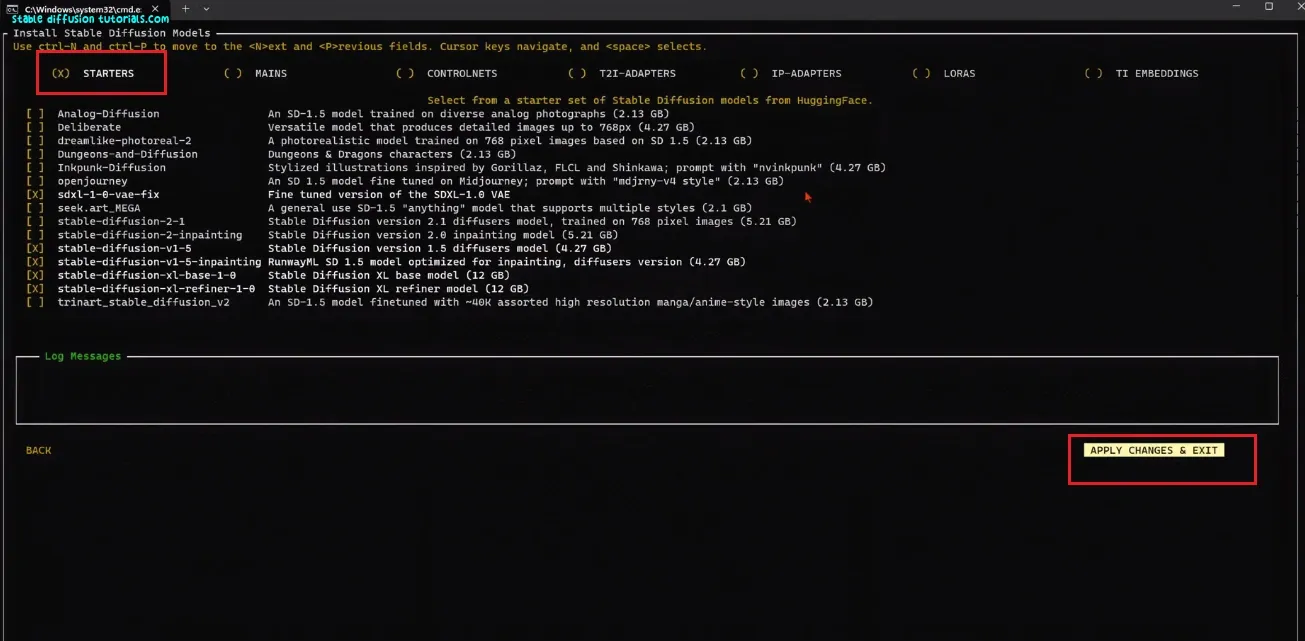

5. Now, before selecting STEP 2,

just click on the blue link that is provided in Step 2. There you can check and

select whatever models you want to install.

So, by default, the Stable Diffusion Realistic version has been selected.

If you want to install any version, simply change from False to True. But, here we are installing the default model.

Now, click on the play button to run STEP -2. This will again consume some time like the previous one. In our case, it took around 4 minutes.

After

getting installed, you can see the green colored check mark which confirms that

your code has been installed.

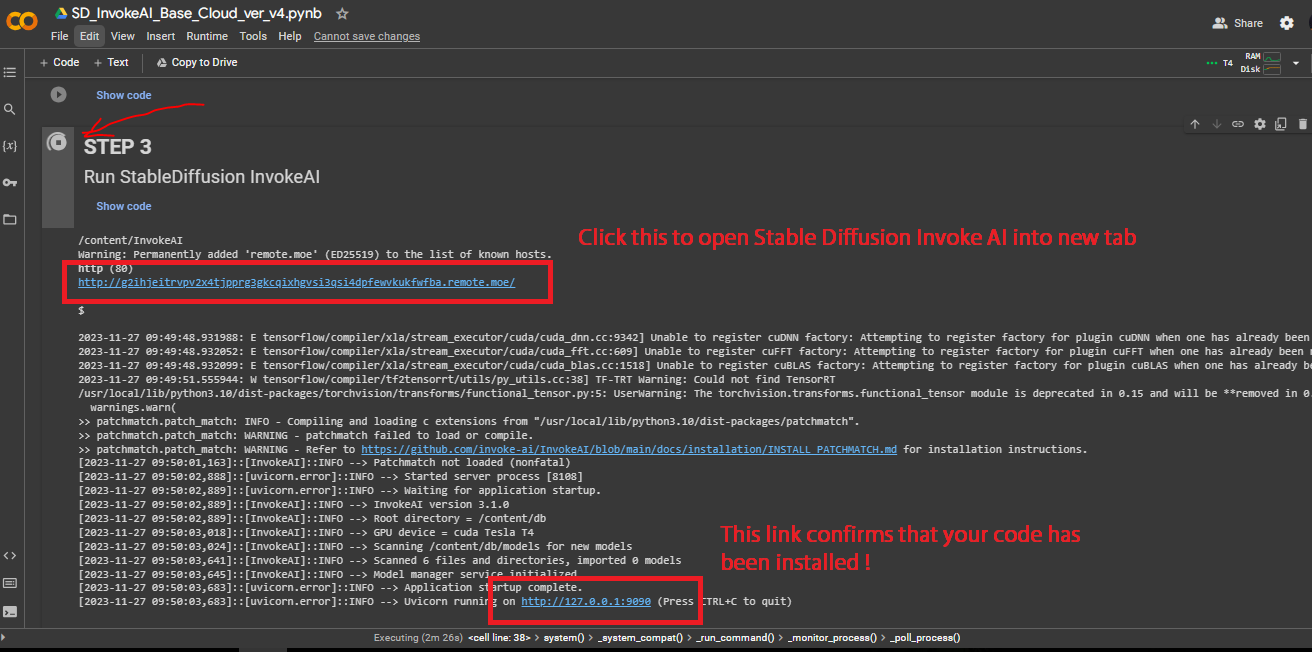

6. At last just run the third cell that

is STEP-3.

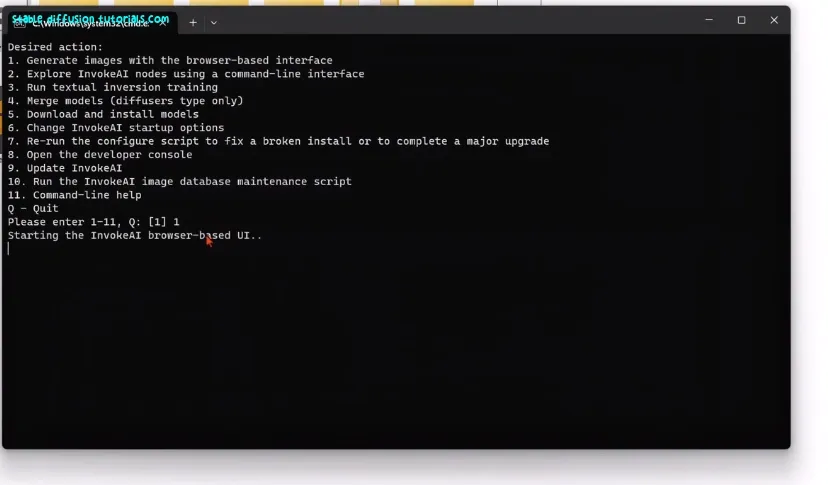

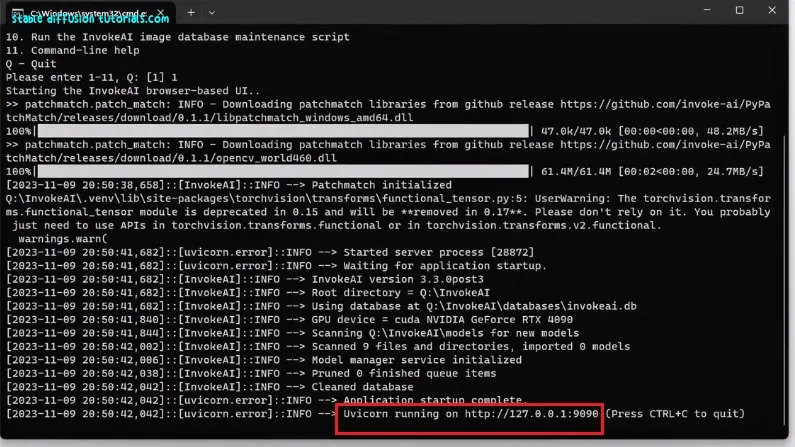

7. Now wait for a moment until you see the

link like this: “https://127.0.0.1…” .

Just

open to the first blue link that will be going to appear after our model gets installed and hosted.

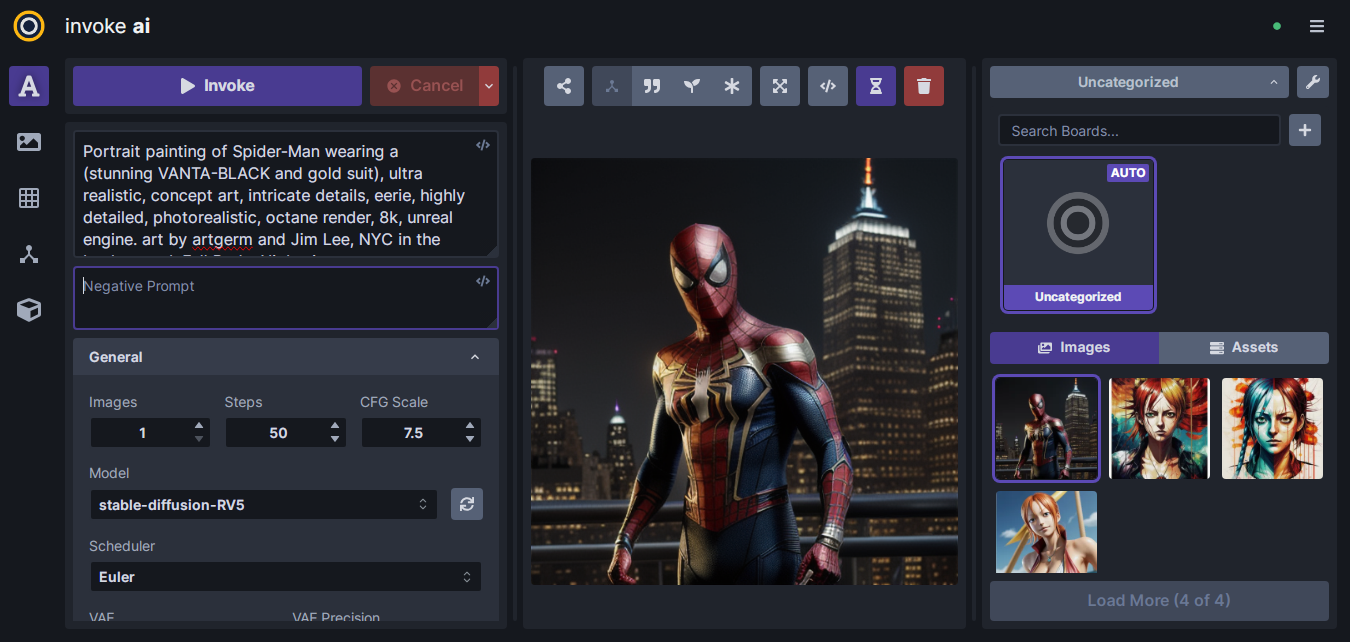

8. You have the new UI provided by

Invoke AI. Here, we can feed :

·

Positive

Prompts

·

Negative Prompts

·

Number

of images

·

Steps

·

CFG

Scale

·

Types

of Stable Diffusion Models

1.

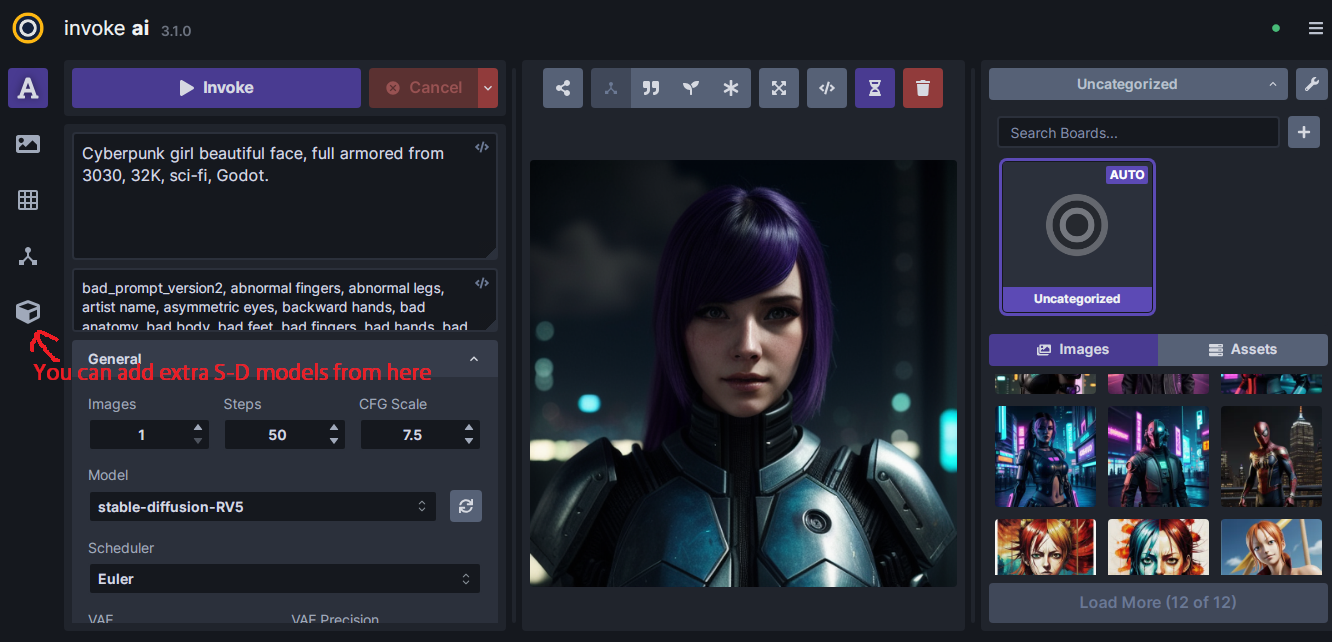

Now, if you want to install and use

your own model then just click on the Model

Manager icon (cube-like symbol) presented over the left panel of the

dashboard.

Select import models and

then on the model section text box

copy and paste the link of your model. We have used the pre-trained model link

from the CIVIT.AI website. Also, as an alternative to it, you can also use the Hugging Face platform to download and use the pre-trained models.

. On, this website you can just select your pre-trained model whatever you like. Go to the Download button right-click on it select copy link address and paste the link onto the model section of Invoke AI.

3. Select Add Model. This will take some time to download and install the

model. So, just wait for some time. A pop message will be seen “Model added”. For verification, you can also

check under the model section what model has been installed. So,

that’s easy its. Isn’t it?

Sometimes, you face errors while installing Invoke AI on Google Colab free account. So, you need switch to the Colab Paid version instead.

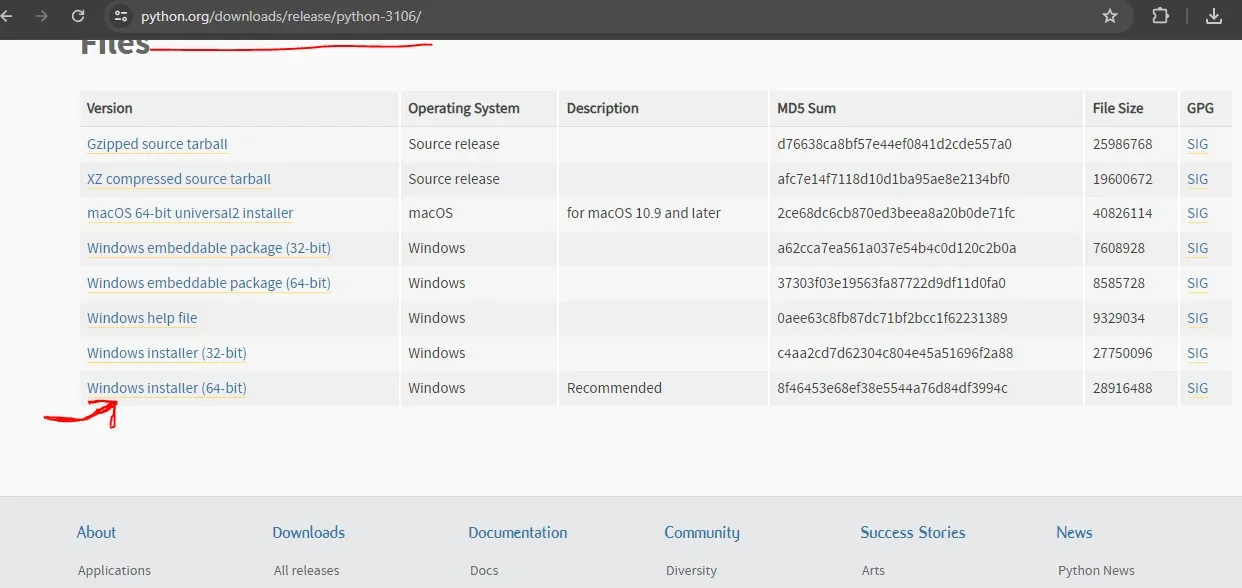

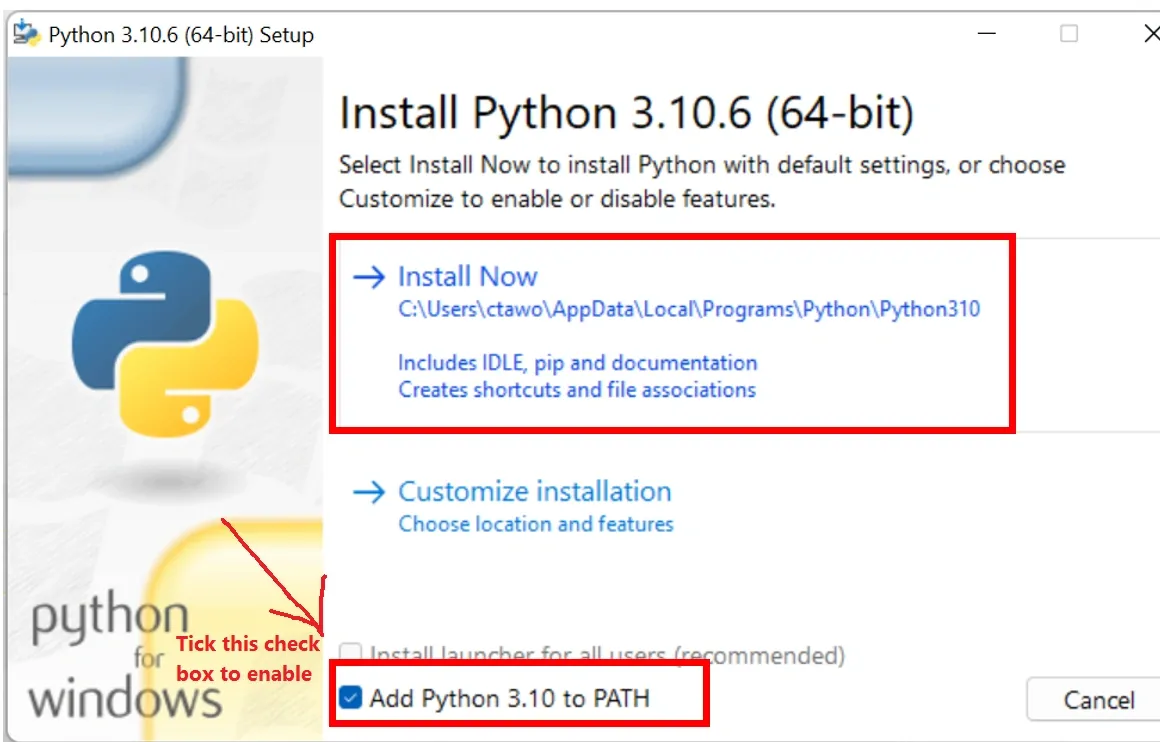

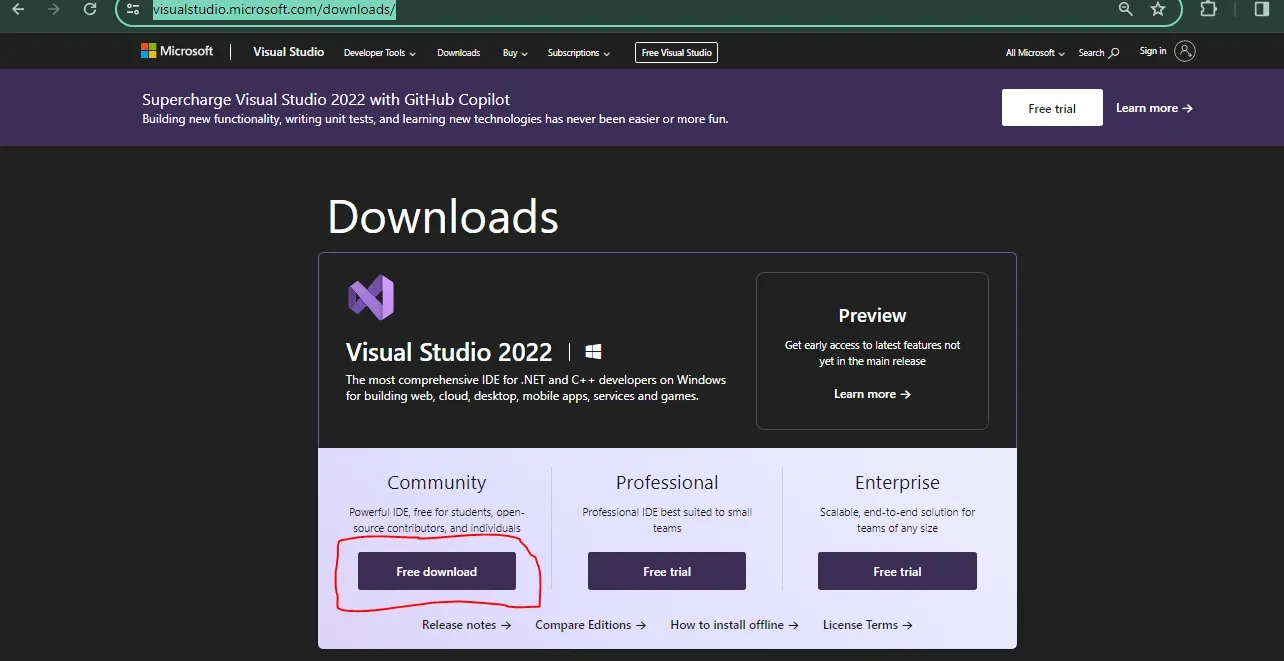

Steps to run Invoke AI on PC

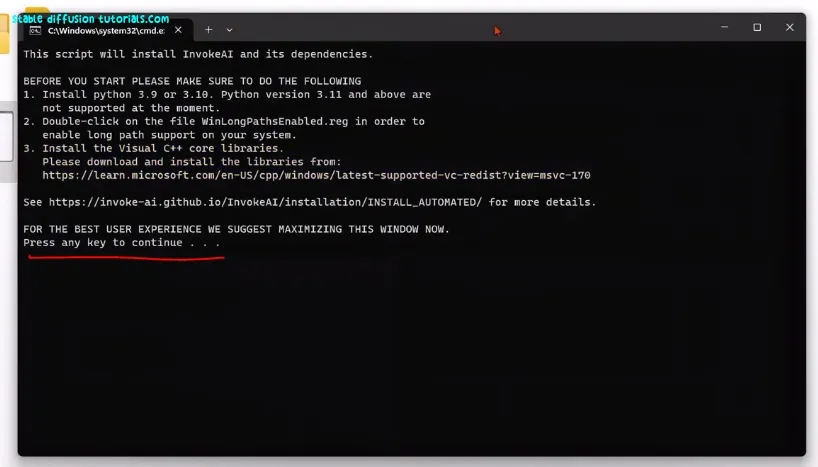

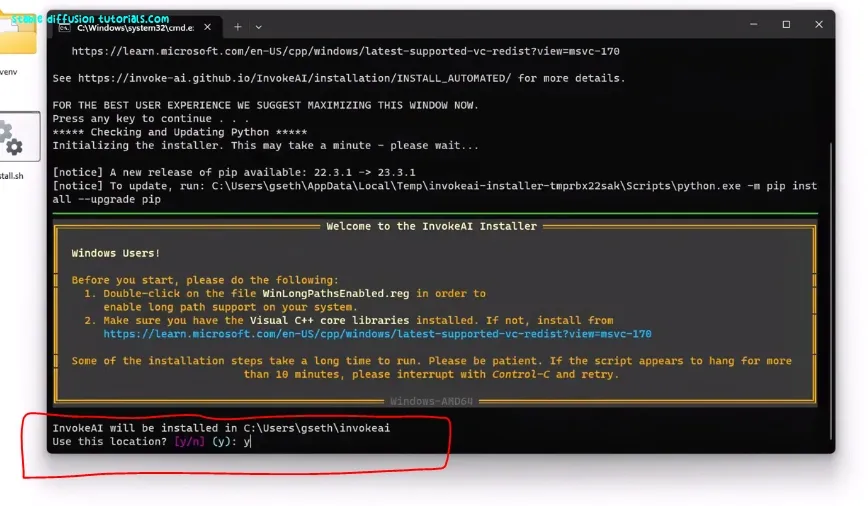

For running Invoke AI locally, you need to take care into mind some requirements and recommendations which are provided below:

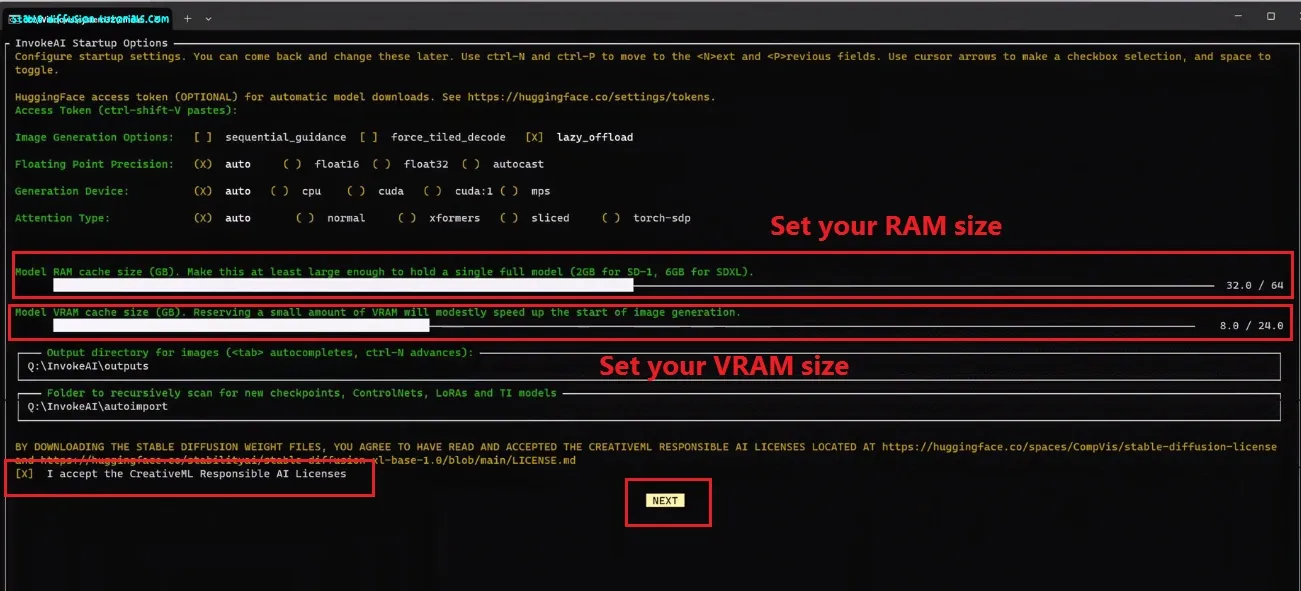

Requirements:

1. Graphics Card – NVIDIA GPU(minimum 4GB VRAM or more). We experienced that using 6 GB works better for SDXL models. If using Mac PC then M1 or M2 chip is recommended, but a little bit slower. For AMD GPU then, use 4GB VRAM or more.

2. RAM – minimum 12GB.

3. Operating system – Windows, MAC, Linux.

4. Disk Size limit- 12GB needed for storing trained models.

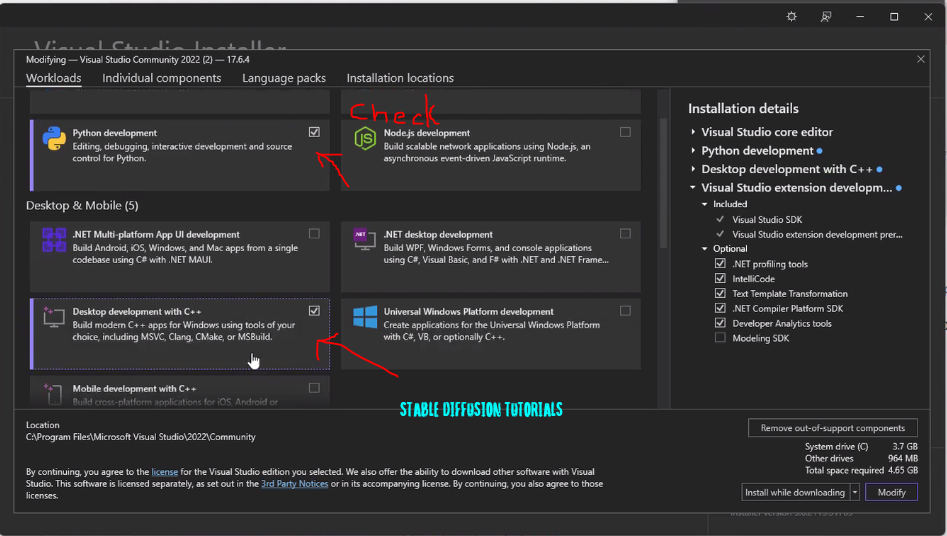

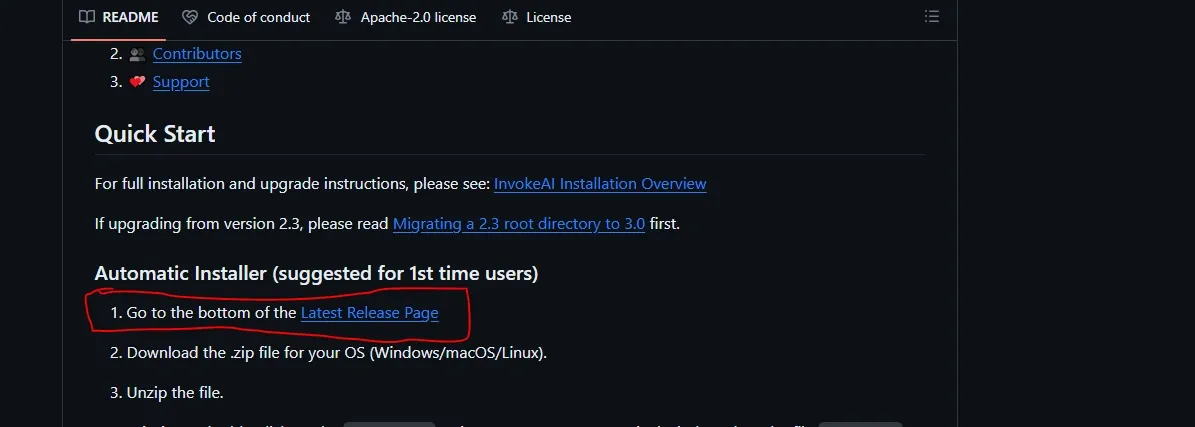

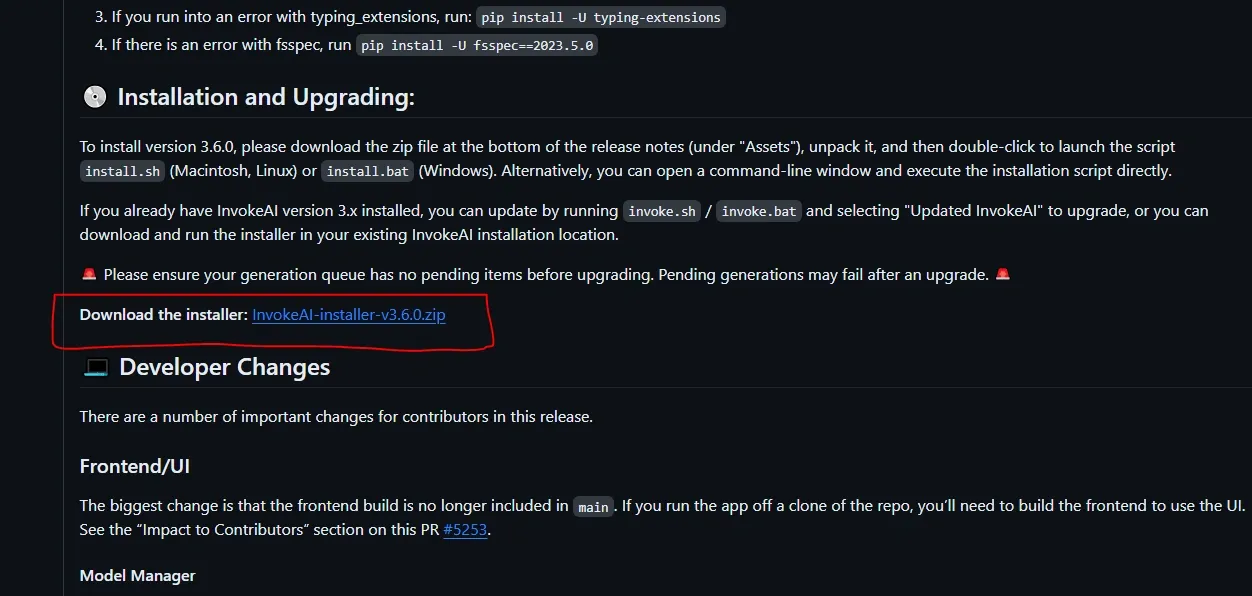

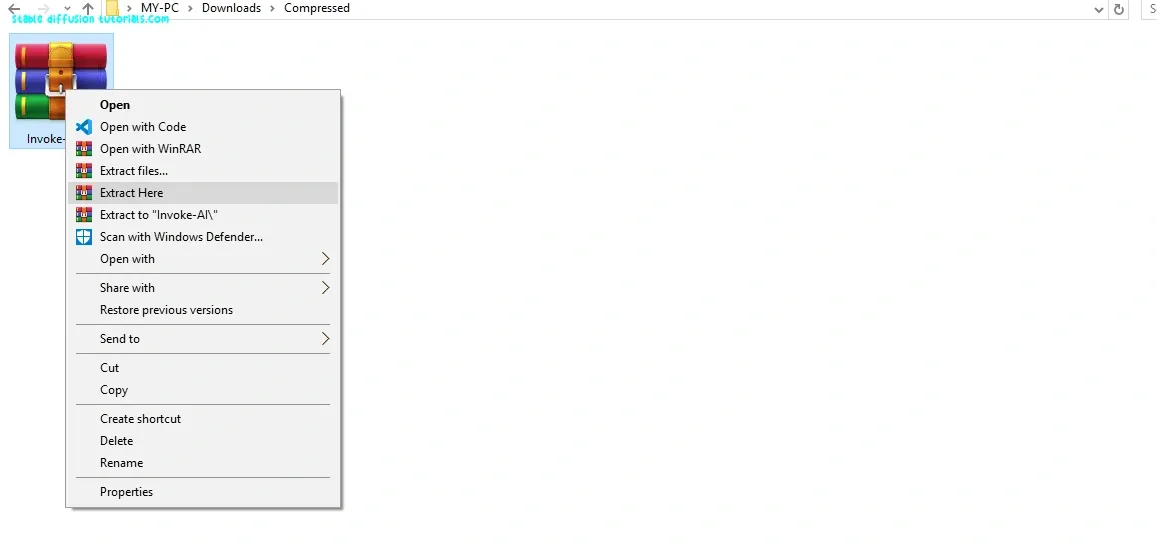

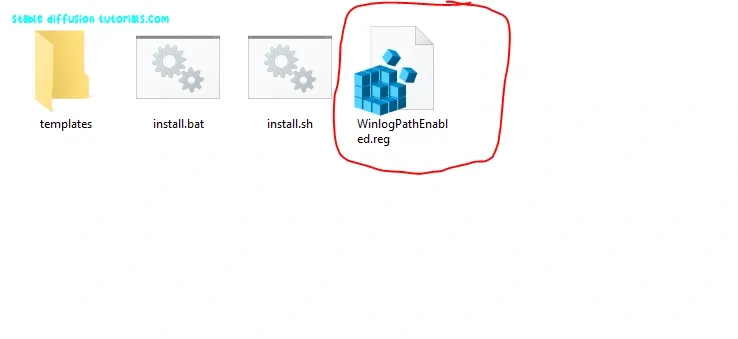

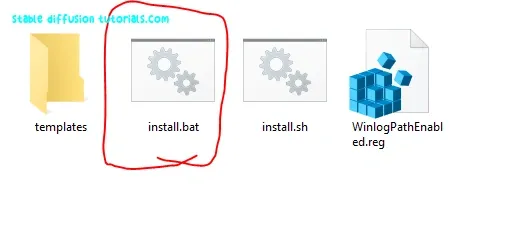

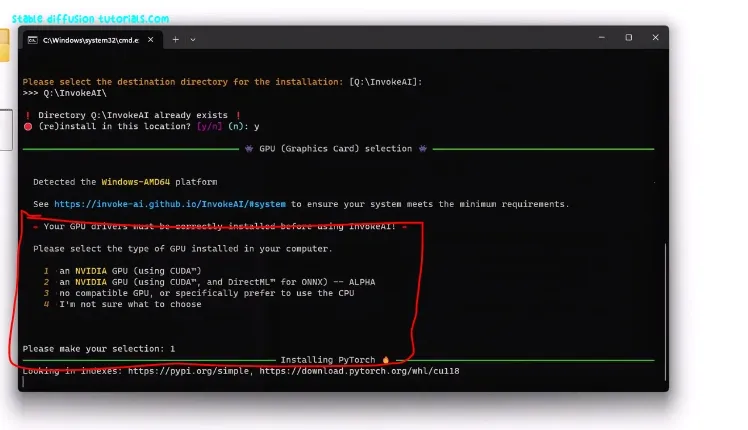

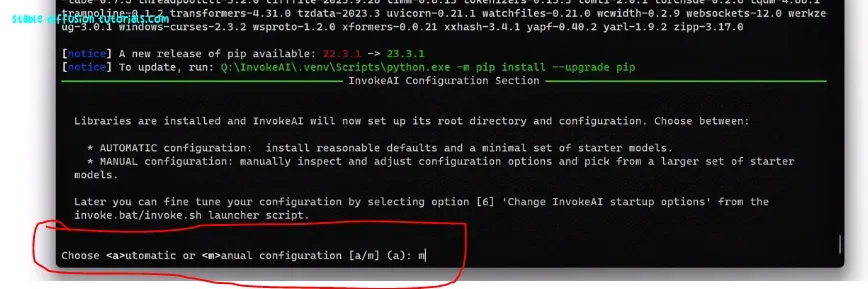

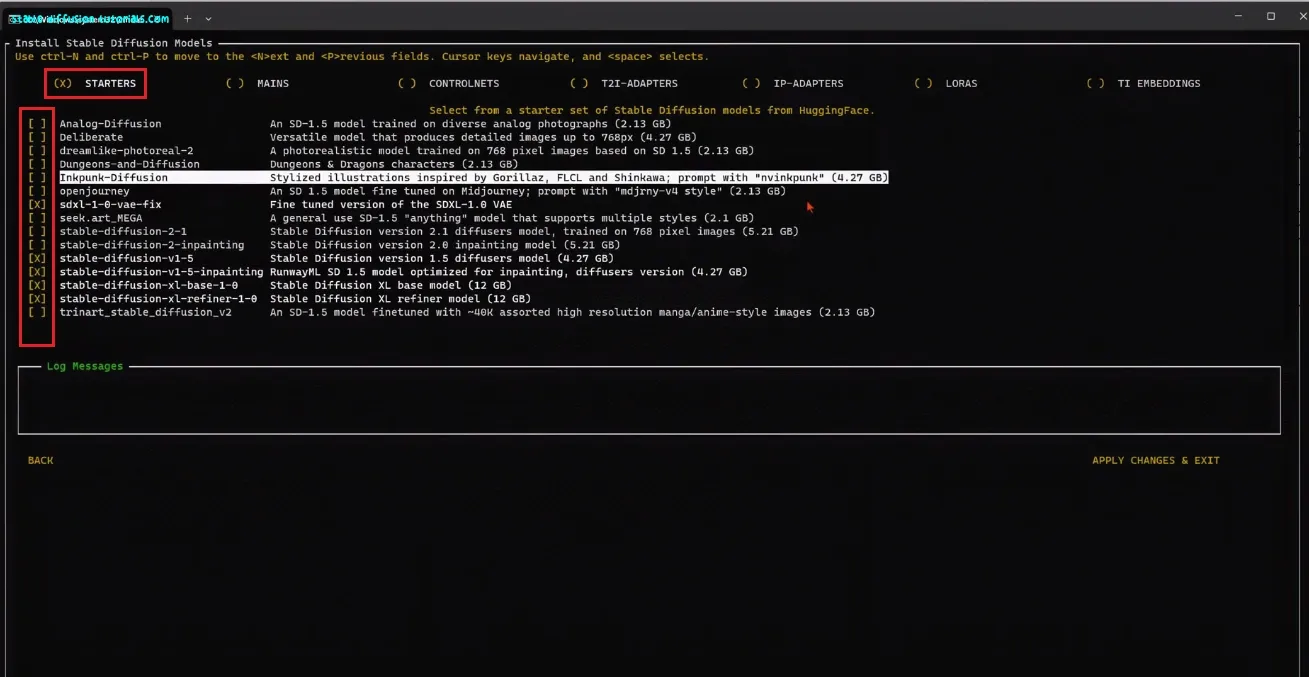

Installation:

Conclusion:

Well, InvokeAI is one of the alternatives to Automatic1111 WebUI helpful for generating images like other WebUI. Like others here artists can download the model and use it in no time with just a few clicks. For more help, you can also join their discord server.