The methodology is to use ControlNext model(released by DV labs research) with SVD V2 (by StabilityAI) to create consistent AI videos. The actual architecture just been cloned the way AnymateAnyone works. The model has been trained on better, higher-quality videos with humans pose alignment to create more realistic specially the dancing videos.

More Consistent VideoGen : ControlNext + SVD 😍

.

.

Now available on ComfyUI. 😌Kijai Custom Nodes: 👇

https://t.co/MwP1keQ4nG pic.twitter.com/FPZihnfrQz— Stable Diffusion Tutorials (@SD_Tutorial) August 28, 2024

The training and batch frames has been increased to 24 to handle the adaptation of video generation in generic fashion. Additionally, the height and width also been increased to a resolution of 576 × 1024 to meet the Stable Video Diffusion benchmarks. You can do in-depth research using relevant research papers.

Now, you can run this model on your machine with ComfyUI using custom nodes.

Installation:

1. First of all, install ComfyUI and update it by clicking “Update all“.

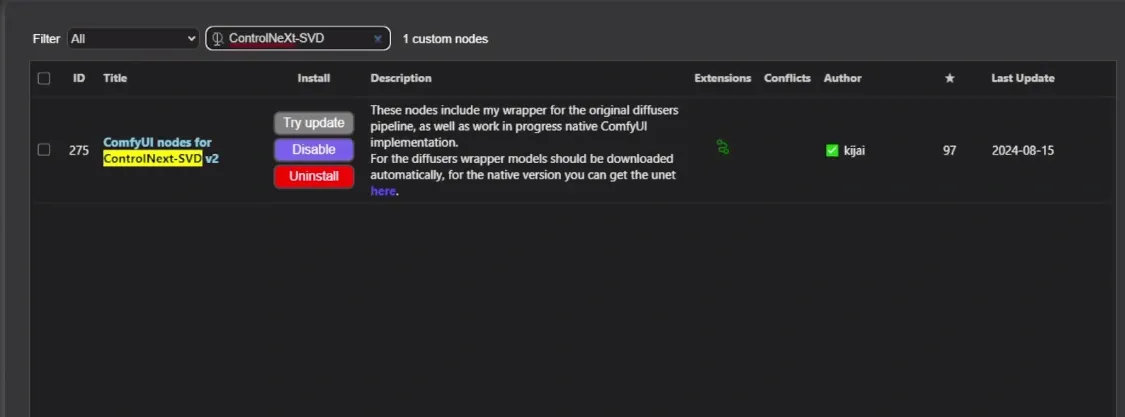

2. You have to install custom nodes by Kijai. So, navigate to ComfyUI manager and hit “Custom nodes manager“. Then, search for “ControlNeXt-svd” by kijai and click “Install” button to install it.

3. Then just restart ComfyUI to take effect.

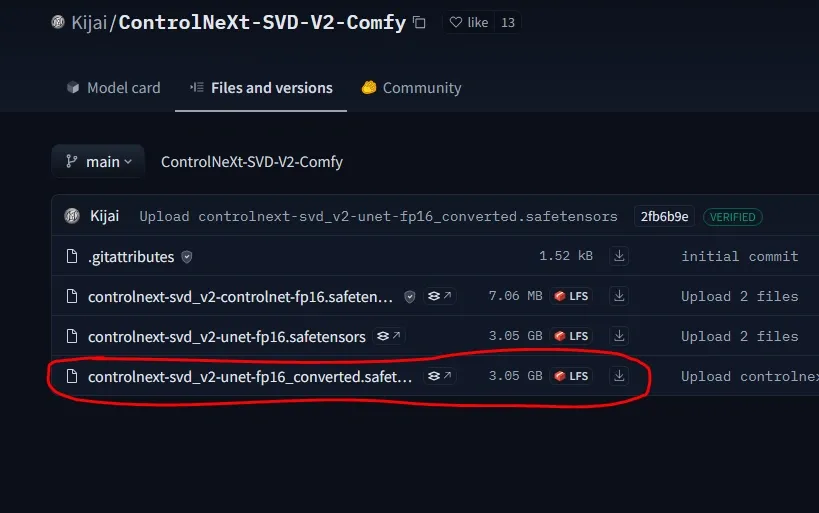

4. Now download the respective model (controlnext-svd_v2-unet-fp16_converted.safetensors) from Kijai’s Hugging Face. Save it inside “ComfyUI/models/unet” folder.

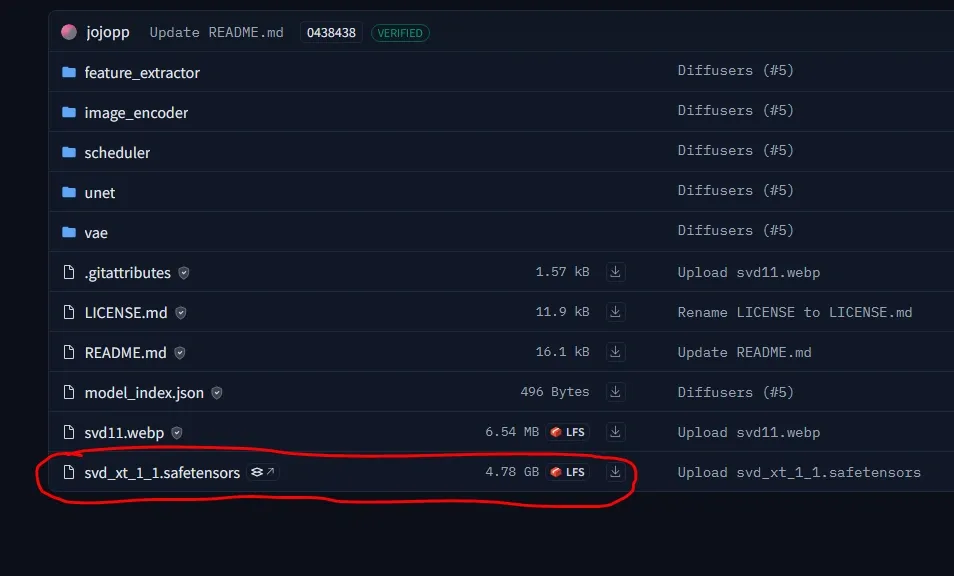

5. Next download SVD XT v1.1 model from Stability Ai’s hugging face. And just put it inside “ComfyUI/models/checkpoints/svd” folder.

6. Finally, the workflow can be found inside your “ComfyUI/custom_nodes/ComfyUI_ControlNeXt-SVD/example” folder.

Here, you will get two workflows. First one is for ComfyUI and other for diffusers. Just drag and drop in ComfyUI.