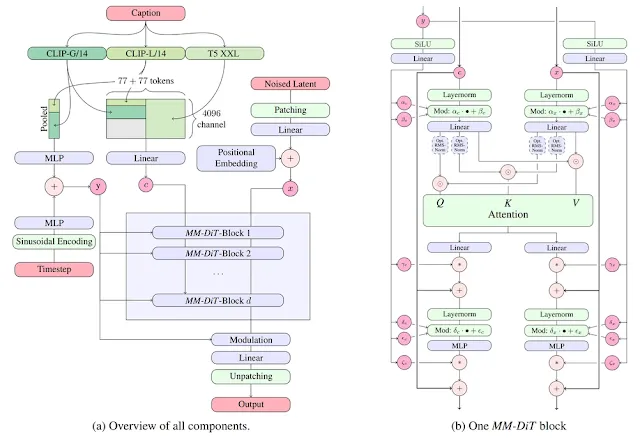

Stable Diffusion 3 has been announced on February 22nd, 2024 but released on June 12th, 2024 that is the most improved text-to-image model reported by StabilityAI. This model is capable to generate images with text, understands the prompts better as compared to the earlier diffusion models. It works on the concept of diffusion transformer model and flow matching which can be found in their research paper.

StabilityAI team has confirmed that the training will be done under the responsible boundary in terms of testing, evaluation, and deployment so that it don’t get misused by the bad actors.

|

| Credit- StabilityAI’s Hugging Face |

Also one major changes is that they have collaborated with AMD and NVIDIA which will help the users to get more optimization to work with these models. Well, actually remember those early days when nobody knows how to tackle with those multiple installation errors.

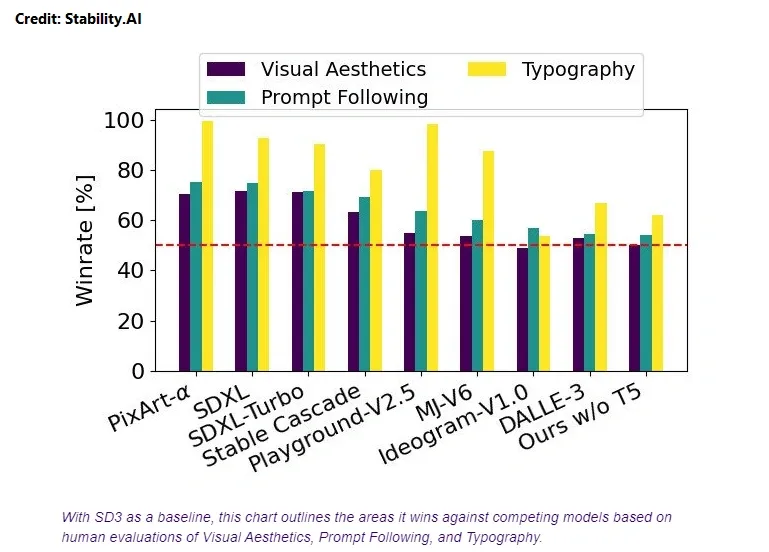

The above chart showing different metrics of different image generation model in terms of Visual Aesthetics, Prompt following, and Typography.

|

| Credit: StabilityAI’s repository |

The model has not been released to use yet for the open community but, it can be accessible using API (for membership people). However, we have already made tutorial on how to download and install Stable Diffusion3 on PC, you should checkout that as well.

Now, lets dive into the testing and compare it with other popular image generation models and see what we have now.

Feature:

- Improved performance in multiple subject identification

- Enhanced Image Quality

- Understands prompt better

- Great in text creation

- Parameters ranges from 800 Million to 8 Billion

- More Safety from bad actors

- Suitable for both commercial and non-commercial usage

Comparison with multiple image generation models

We have used different results to differentiate with Stable Diffusion3. Here, we are using image generated from MidjourneyV6, Dalle3, Stable Cascade and Stable Diffusion XL(SDXL).

We decided to take a bunch of images for Stable Diffusion 3 and prompts from the StabilityAI’s platform and do the comparisons.

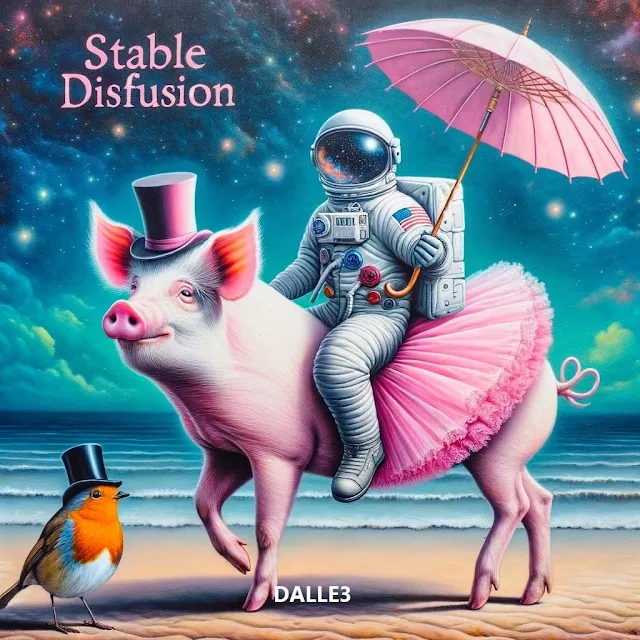

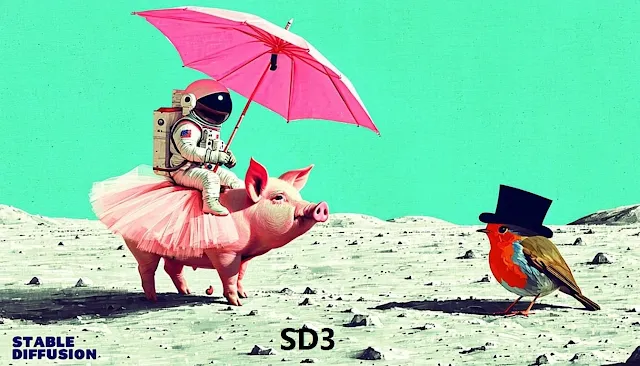

Example1: Stable Diffusion 3 vs Dalle3 vs Midjourney v6

Here, we are doing comparison with Dalle3 and Midjourney v6.

The result generated by Stable diffusion 3 is much impressive as compared to other two image generation models. The important aspect is the text which is clearly understandable and intelligently added into the art. This means that this model is capable to handle multiple subjects with its description.

Example2: Stable Diffusion 3 vs Stable Cascade vs SDXL