It’s been a long time since we have used Stable Diffusion to generate incredible art. However, generating images with low VRAM takes much time.

No, problem, because there is a way to optimize Automatic1111 WebUI which gives a faster image generation for NVIDIA users. Tensort RT is an open-source python library provided by NVIDIA for converting WebUI for faster inference. This is usually used for Large Language models to optimize the performance.

This is primarily focused for them who don’t want to get into the complicated stuff for C++ or CUDA. For using TensorRT as an extension first of all you need to install and optimize the required engine.

Requirements:

-Only for Windows OS.

-NVIDIA GPUs

-NVIDIA Studio Driver Updated

-Specially for Automatic1111

-Stable Diffusion 1.5, Stable Diffusion 2.1, LCM. For SDXL, SDXL Turbo (12 GB or more VRAM recommended).

Advantage:

-Adds the feature to transform the UNet module from your loaded model into Tensor RT

-Loras models(Non SDXL) will be retained

-Adetailer is working

-Regional prompter

Disadvantage:

-Hypernetworks not tested

-Control Nets are not supported after conversion

Its recommended to make your NVIDIA driver up to date for optimized performance.

Conversion Process with TensorRT:

1. First you should have Automatic1111 installed if not yet.

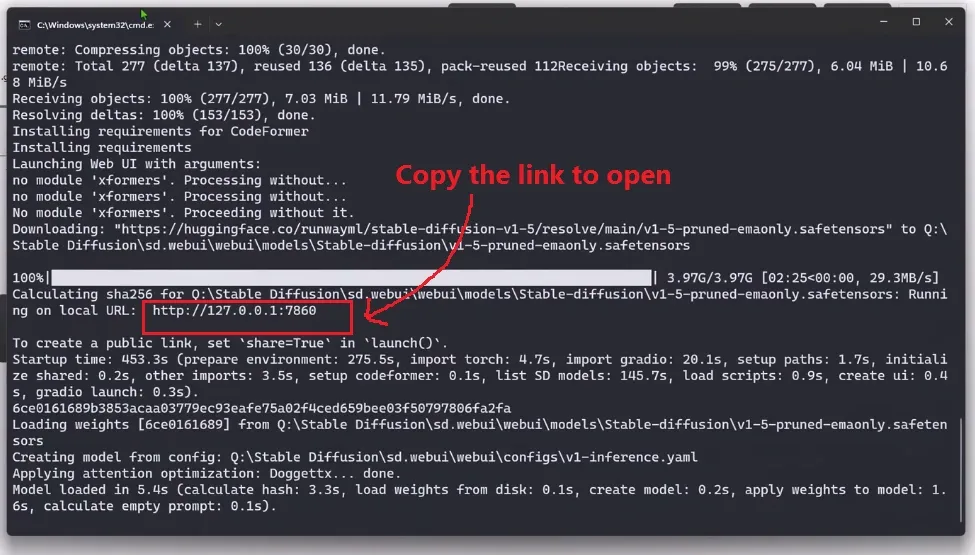

2. As usual open your Automatic1111 by clicking the “webui.bat” file followed by copying the local url and pasting it into the browser.

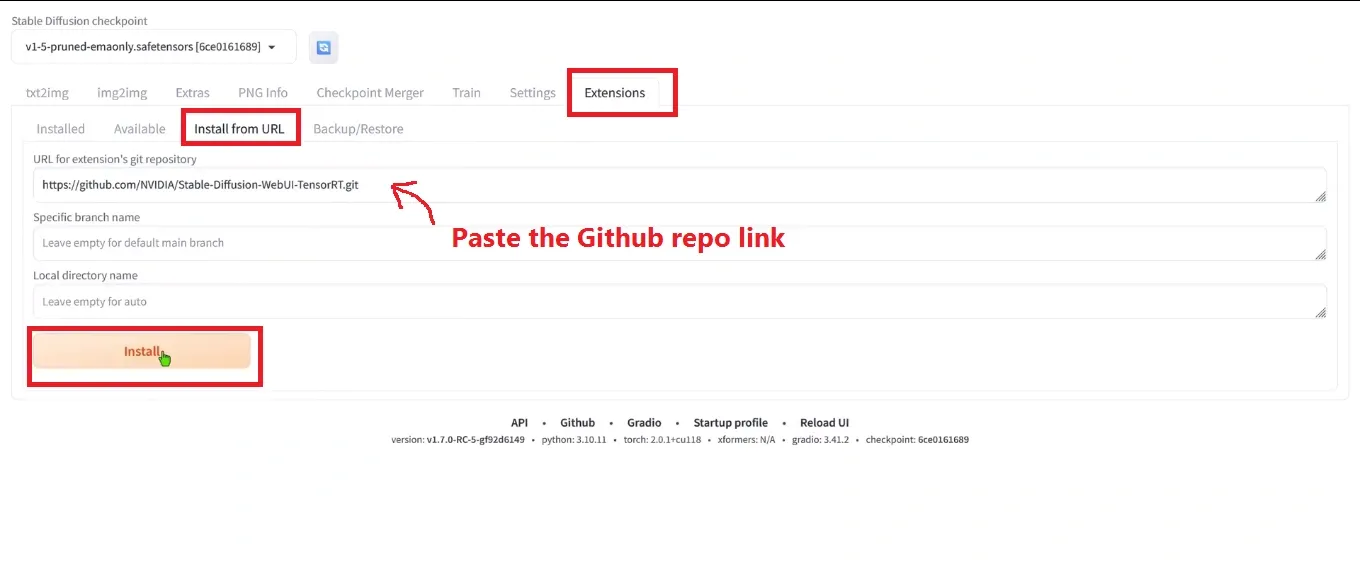

3. Move to the Extension tab and select the option “Install from URL“.

4. Copy the link provided below and paste it into the URL for extension’s git repository section:

https://github.com/NVIDIA/Stable-Diffusion-WebUI-TensorRT.git

And click the “Install” button. In our case, it took around 7 minutes.

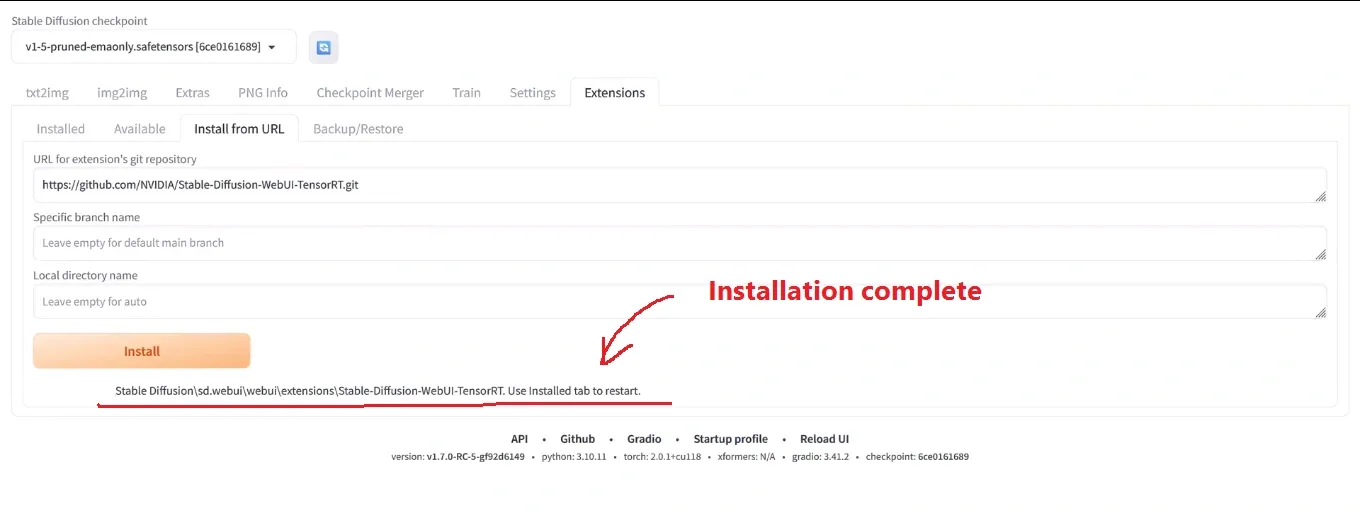

After completion, you will get the confirmation message like we have illustrated in the image above.

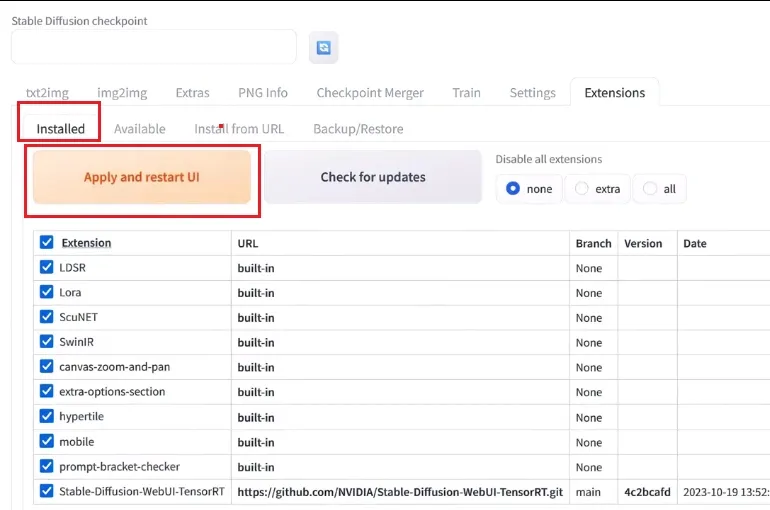

5. After getting installed, just restart your Automatic1111 by clicking on “Apply and restart UI“.

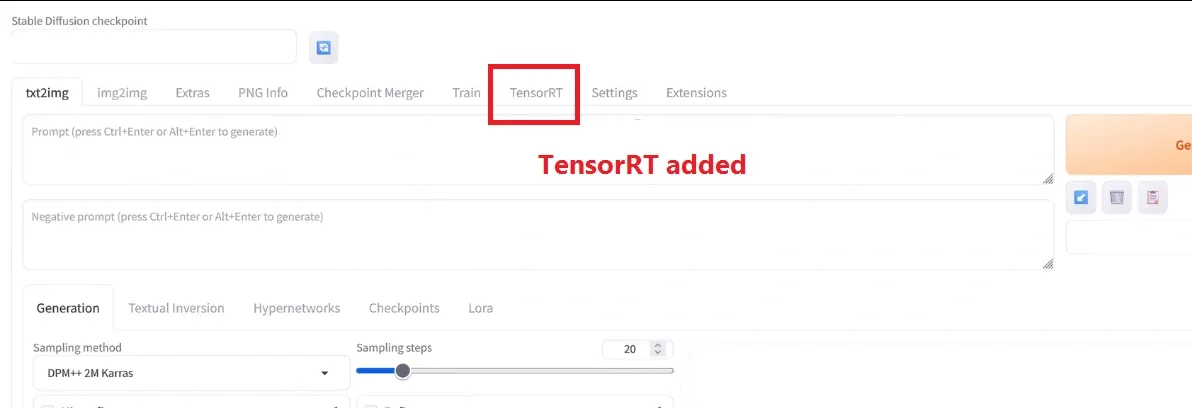

After restarting, you will see a new tab “Tensor RT“.

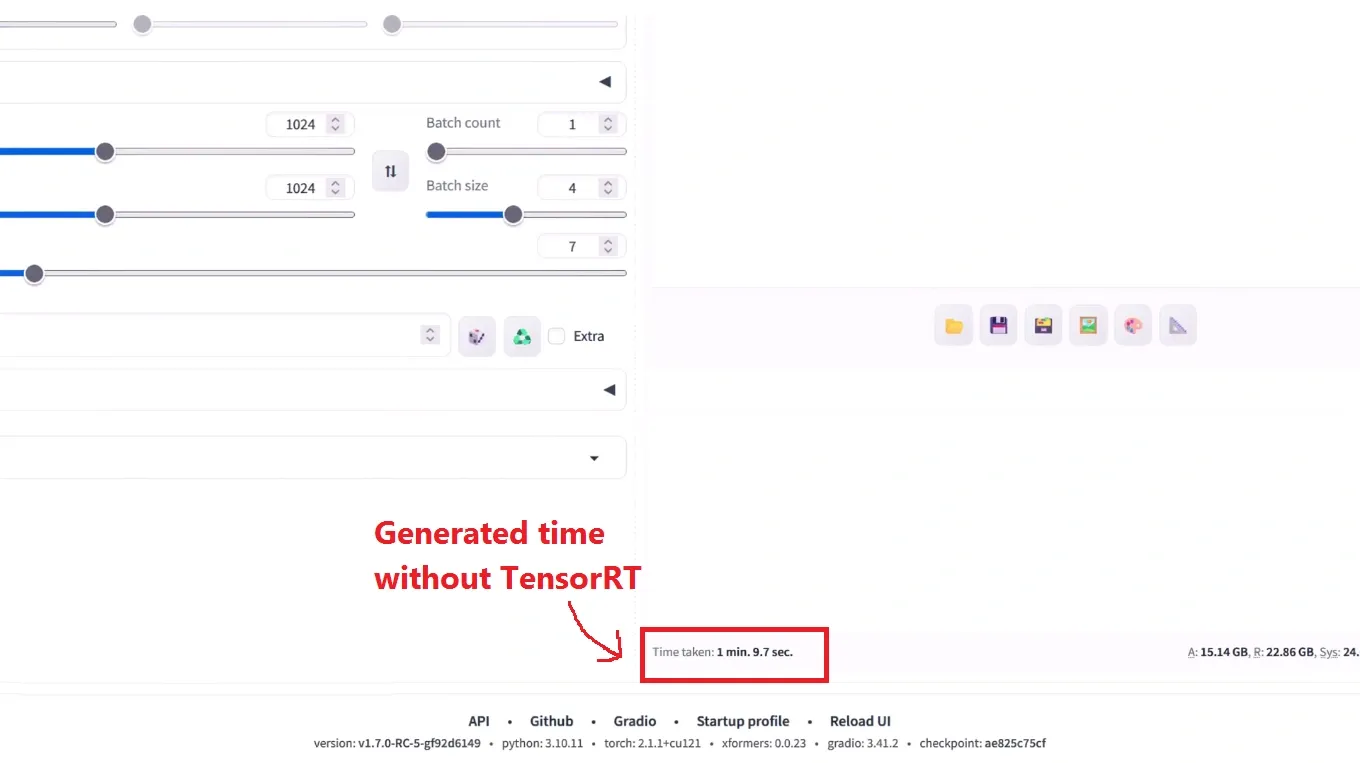

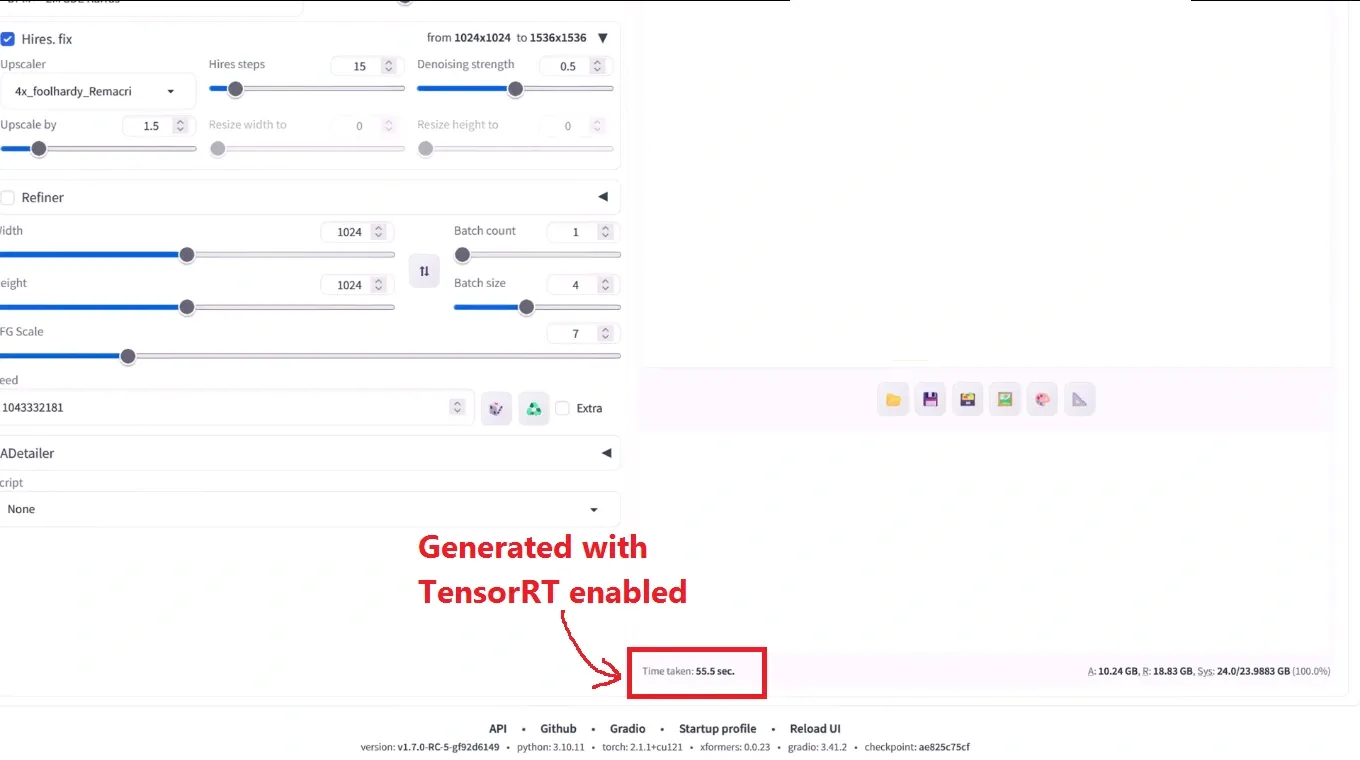

Let’s try to generate with TensorRT enabled and disabled. For testing we are using same prompts with SDXL base model.

Error fixing:

We have experienced some errors while installing TensorRT. So, if you found these types of errors while installing then try this manual process and fix bugs like we have explained below.

1. First delete the “venv” (virtual environment)folder available inside your Automatic1111 folder.

2. Go to the extension folder and also delete the “Stable-Diffusion-WebUI-TensorRT” folder.

3. Then move to the WebUI folder and open the “webui.bat” file for reinstalling the files. After that close the command prompt.

4. Again start your command prompt by moving to the address bar and type “cmd” and press enter (Make sure you don’t open Windows PowerShell).

5. Now type the command to activate the virtual environment:

venvscriptsactivate.bat

For confirmation that you are in the correct environment, you will see an environment name inside round brackets ().

Now type the next command for confirmation of TensorRT library uninstallation:

pip uninstall tensorrt

Again type this to clear the cache:

pip cache purge

Now copy and paste the below command for installation for TensorRT:

pip install –pre –extra-index-url https://pypi.nvidia.com tensorrt==9.0.1.post11.dev4

Again uninstall these libraries because these causing the errors for not getting tensorrt installed:

pip uninstall -y nvidia-cudnn-cu11

Next is to install the onnx runtime library by typing these commands:

pip install onnxruntime

Now install onnx library by typing:

pip install onnx

Atlast type these commands:

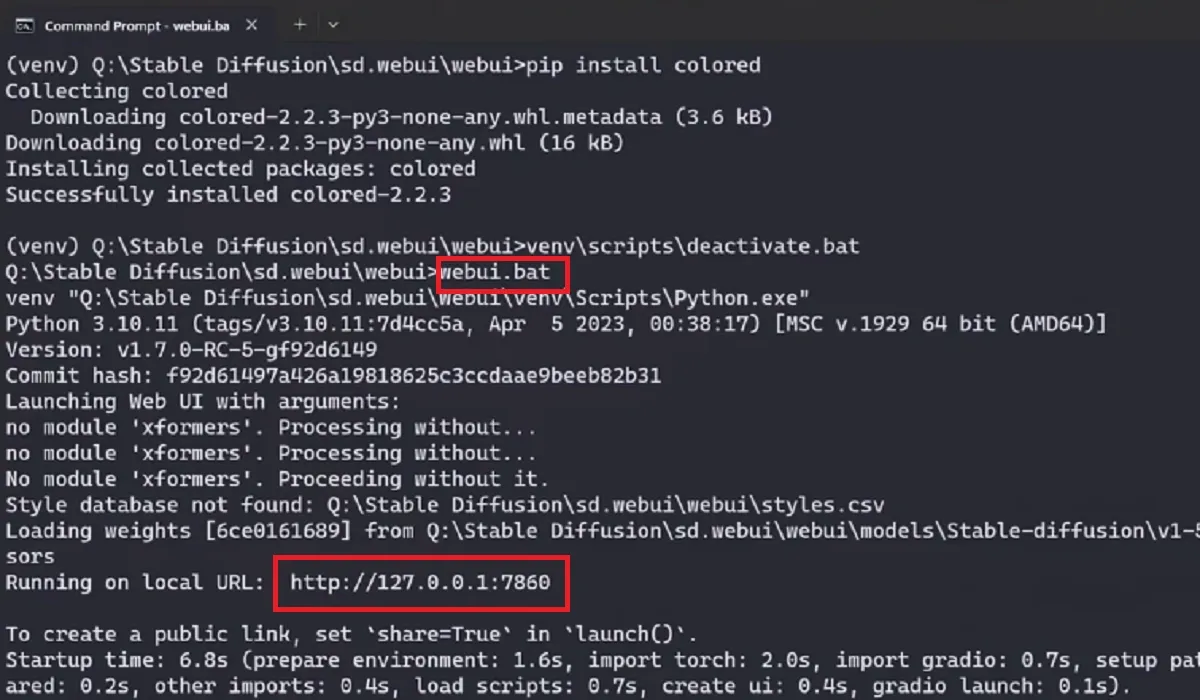

pip install colored

Now deactivate the environment by typing as follows where “venv” is the environment name, in your case may be different:

venvscriptsdeactivate.bat

Open Automatic1111 on the browser by typing :

webui.bat

6. Move to the extension tab and select the option “Install from URL“.

7. Copy the link provided below:

https://github.com/NVIDIA/Stable-Diffusion-WebUI-TensorRT.git

And click the “Install” button. This will take some minutes to get installed. After completion, you will get the confirmation message to restart Automatic1111 to take effect.

8. Now, just restart your Automatic1111 and you will see a new tab “Tensor RT“.

Extra Tip:

Update Xformers:pip3 install -U xformers –index-url https://download.pytorch.org/whl/cu121

Now for installing updated Cuda and Pytorch:

pip3 install torch torchvision torchaudio –index-url https://download.pytorch.org/whl/cu121

Conclusion:

NVIDIA tensortRT is really one of the best way to optimize Stable Diffusion WebUI. There are some extensions that you will lose like ControlNet and for SDXL or SDXL Turbo models its recommended to use higher GPU configuration.