Lip sync is one of the tedious tasks for video generation models. Various AI models are there for video generation but lip sync has always been a challenging task for any AI model. But, now we have a technique called Wav2Lip used for lip-syncing. This uses your audio as input with a target video and you will get your lip-synced video in a few minutes.

Lip sync going better: Wav2Lip-GFPGAN 😯😀

Combine Lip Sync AI and Face Restoration AI to get ultra high quality videos.

Colab notebook attached for testing:👇🧐https://t.co/07KHVs51XZ pic.twitter.com/dnQEvRxtER

— Stable Diffusion Tutorials (@SD_Tutorial) March 28, 2024

The interesting part is that it can be used as an Automatic1111 extension and ComfyUI node. Let’s see how to install Wav2Lip and create a video with lip sync.

Installation in Automatic1111/ForgeUI:

1. First, you need to install the updated version of Automatic1111 or ForgeUI.

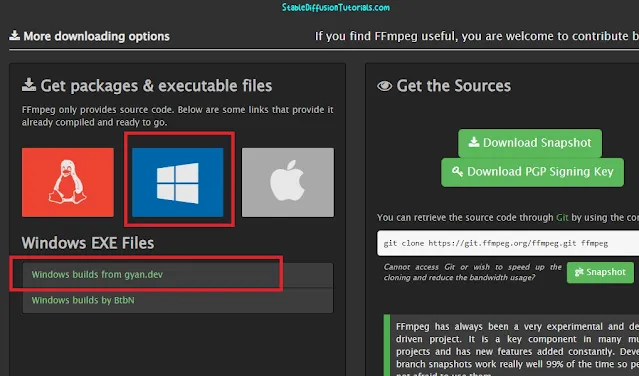

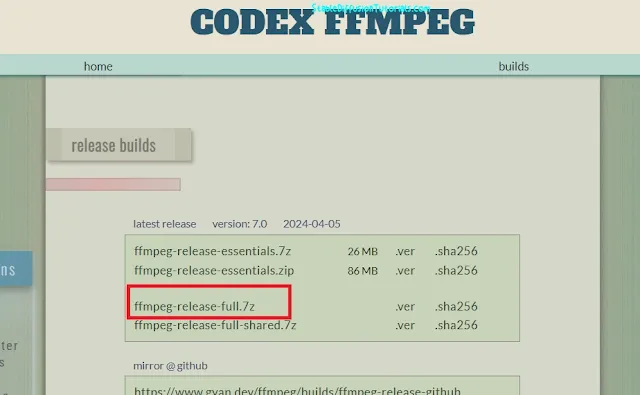

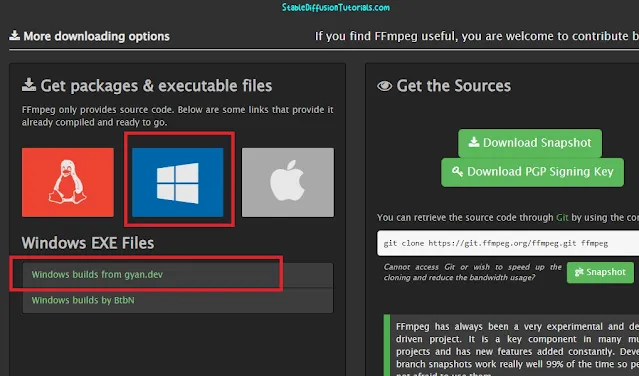

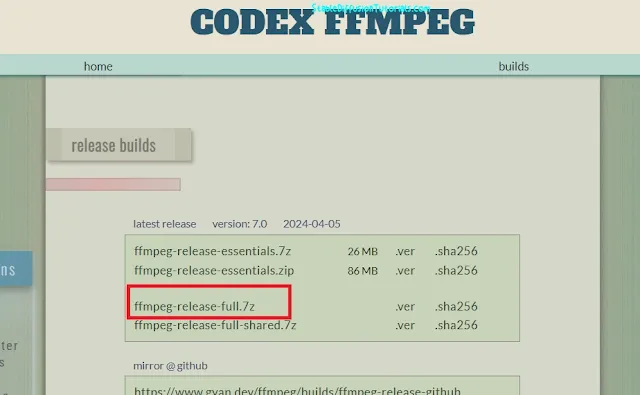

2. Download ffmpeg from its official website. Make sure to choose the selection according to your Operating System. In our case, its Windows OS. For extracting, you can use 7zip /WinRar software. Search for an exact ffmpeg file that can be found inside the “bin” folder.

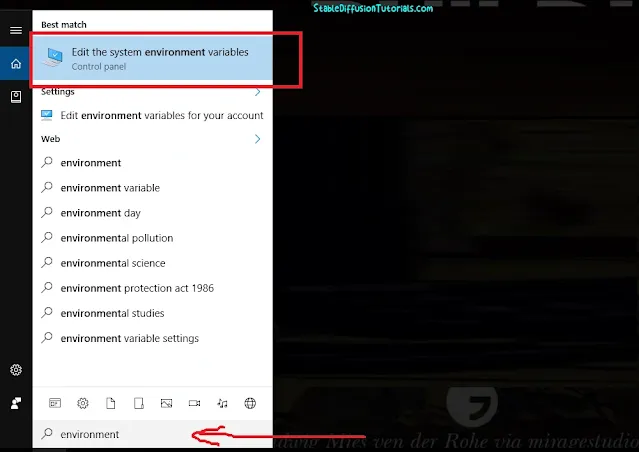

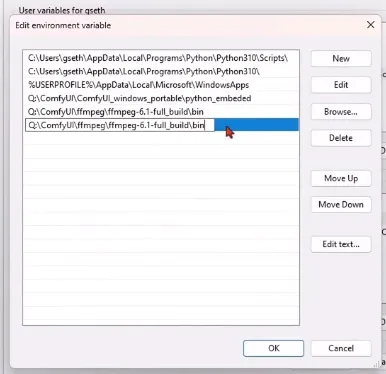

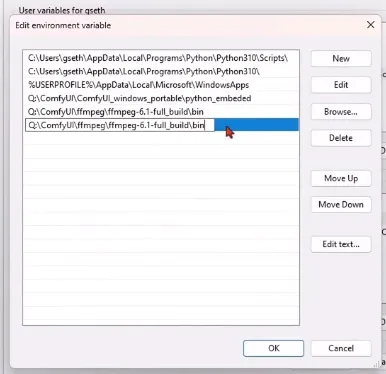

Now, search for “environment variables” from your taskbar and just set its path into environment variables by copying the downloaded file’s path location present in bin folder.

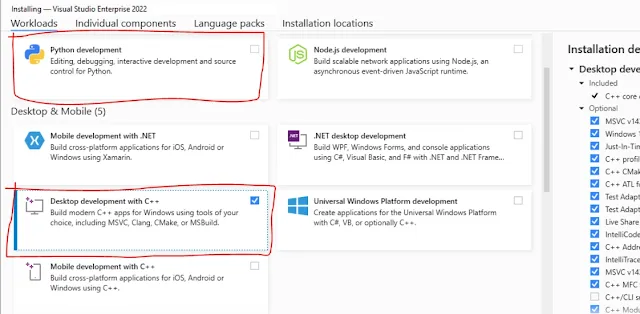

3. Download Visual Studio and while installing you should enable the “Python” and “C++ package” options.

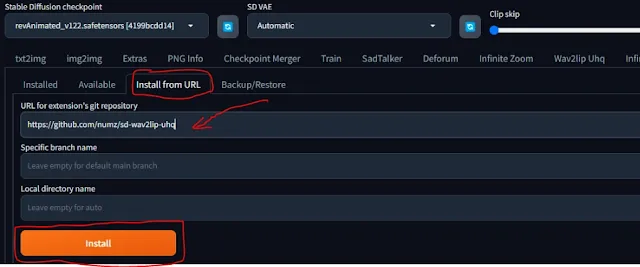

4. Because the extension is unavailable in Automatic1111, we have to install it from the URL section. Copy the URL of the Wav2Lip repository provided below.

https://github.com/numz/sd-wav2lip-uhq

Open Automatic1111/ForgeUI. Move to the “Extension” tab, select the “Install from URL” option, and paste the copied URL.

Then click on the “Install” button to start the process. This will take some time to download the perquisites and you can see the background process in the command line terminal.

5. After installation, navigate to the “Installed” tab, then restart your Automatic1111 by hitting the “Apply and RestartUI” button to take effect.

6. Now, you will get a new tab added “Wav2Lip UHQ” inside your Stable Diffusion WebUI.

7. Next is to download these respective models and rename the downloaded files as mentioned below (especially for the s3fd model to as “s3fd.pth”):

| Model | Download | Installation folder path |

|---|---|---|

| Wav2Lip (Highly accurate ) |

Link | extensionssd-wav2lip-uhqscriptswav2lipcheckpoints |

| Wav2Lip + GAN (Slightly inferior, but better visual quality) |

Link | extensionssd-wav2lip-uhqscriptswav2lipcheckpoints |

| s3fd (Face Detection pre trained model) |

Link | extensionssd-wav2lip-uhqscriptswav2lipface_detectiondetectionsfds3fd.pth |

| landmark predicator (Dlib 68 point face landmark prediction) |

Link | extensionssd-wav2lip-uhqscriptswav2lippredicatorshape_predictor_68_face_landmarks.dat |

| face swap model (model used by face swap) | Link | extensionssd-wav2lip-uhqscriptsfaceswapmodelinswapper_128.onnx |

8. Enjoy, the face swapping with lipsync AI video generation.

Installation in ComfyUI:

1. First, you should have the ComfyUI installed on your machine.

2. Download ffmpeg for managing and creating videos and set up its environment variables. For zipped file extraction, you can use 7zip or WinRar. Search for an exact file that can be found inside the “bin” folder.

3. To set it, search for “environment variables” from your taskbar and just set its path into environment variables by copying the downloaded ffmpeg file’s path location.

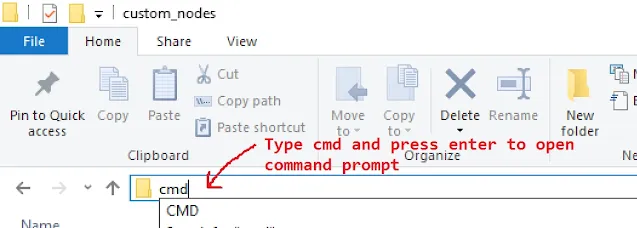

4. Move to ComfyUI’s custom nodes folder and open the command prompt by typing “cmd” on the address bar and clone the repository by typing the command below:

git clone https://github.com/ShmuelRonen/ComfyUI_wav2lip.git

5. Now, again use these commands to install the required dependencies that will be going to download the necessary libraries:

pip install -r requirements.txt

6. Download the Wav2Lip_gan model file from the Hugging Face repository.

7. Save the downloaded model into the “custom_nodesComfyUI_wav2lipWav2Lipcheckpoint” folder.

8. Then, just restart your ComfyUI to take effect.

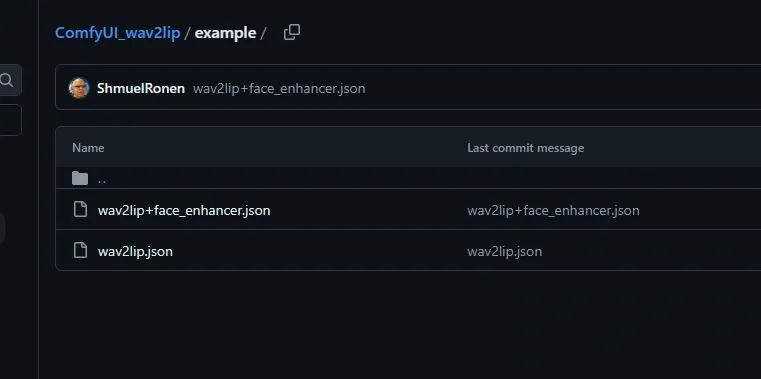

9. Now for getting the basic workflow, it’s available inside the “example” folder of the cloned repository or just download from the official repository.

Simply select, drag, and drop into ComfyUI.

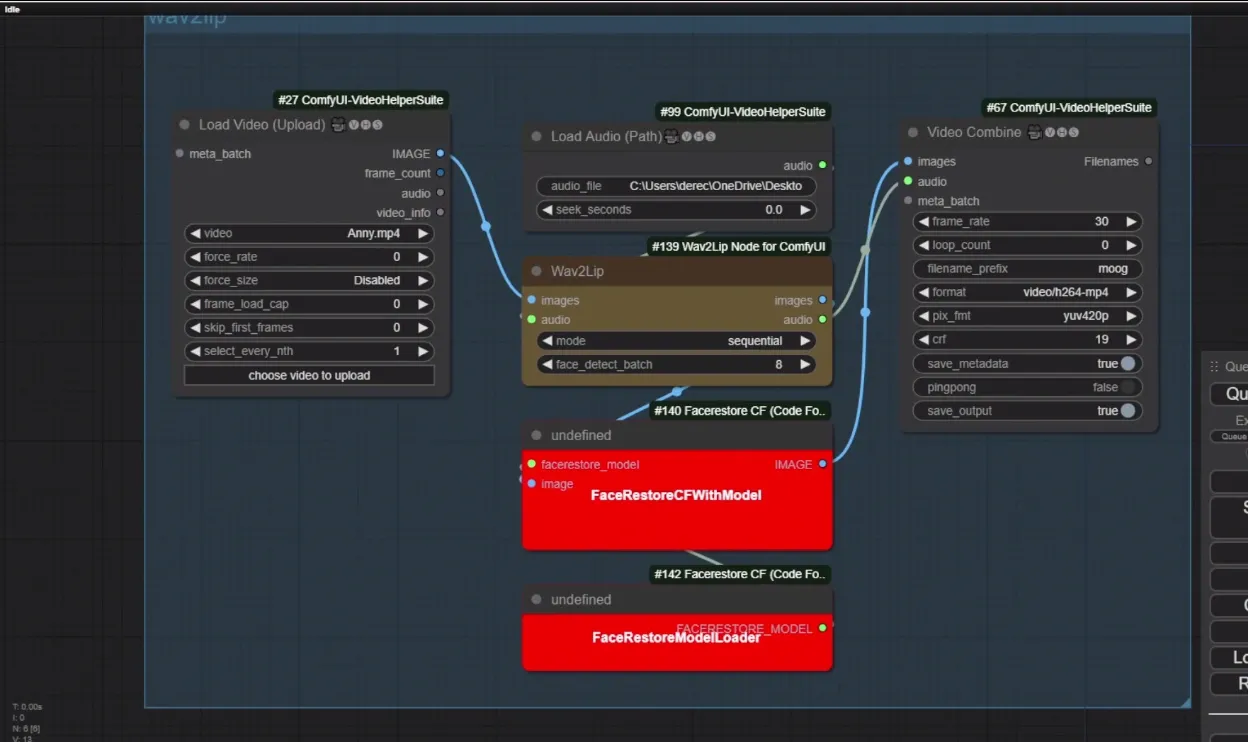

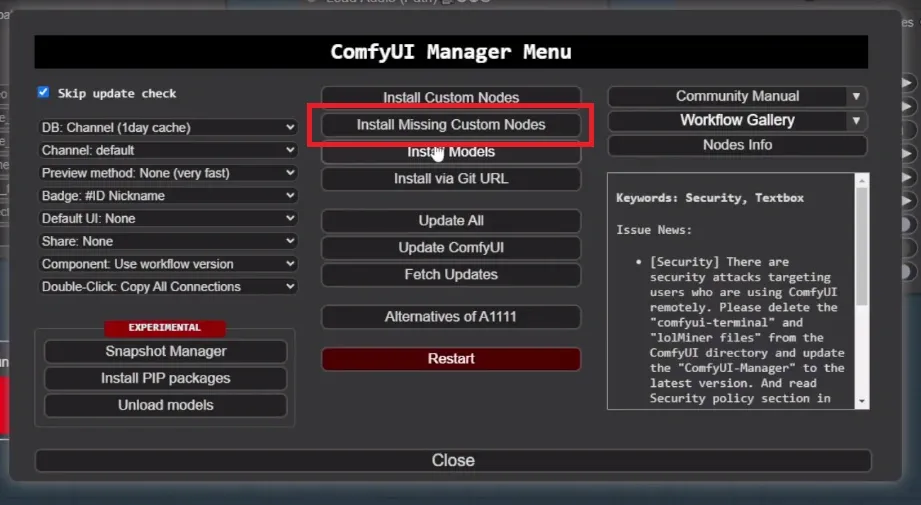

10. After opening the workflow, you will get some missing nodes in red colored. Install these missing nodes from the ComfyUI manager by selecting the “Install missing custom nodes” option.

11. Then select the install button to install the nodes listed. Once installed, restart your ComfyUI.

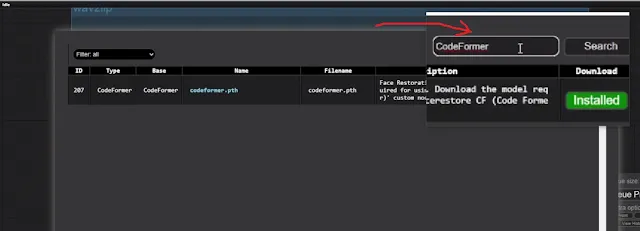

12. At last, you need to install the “Codeformer” library for extra support by selecting the “Install models” from the ComfyUI manager.

Now, simply load your audio and target video, and within a few minutes, you will get your lip-synced video.