Flux is one of the most powerful models we experienced with. But, the problem is that it has so much refined that you can’t get the generation with realism. But, this can be solved by fine-tuning Flux model with LoRA. Follow our tutorial to install Flux Dev or Flux Schnell to know more about it.

Not only this, LoRA can be used to train models in any style you want like Realism, Anime, 3d Art, etc which we have discussed in our LoRA model training tutorial.

You will require NVIDIA-based RTX3000/4000 series cards with at least 24GB VRAM.

This method supports both the Flux Dev (registered under non-commercial license) and Flux Schnell model (registered under Apache2.0). So, take it into consideration that if you use this to train, the same license will reflect to the trained one.

Installation:

0. Install Git from official page and you should have Python greater than 3.10.

1. Create a new directory anywhere with relative meaning. We created it as “Flux_training“. You can choose whatever you want.

2. Inside your new folder, Type “cmd” by moving to the address bar of the folder location to open a command prompt.

3. Into the command prompt, copy and paste the commands one by one to install the dependent Python libraries:

(a)For Windows users:

This is used to clone the repository:

git clone https://github.com/ostris/ai-toolkit.git

Move into the ai-toolkit directory:

cd ai-toolkit

Updating submodule:

git submodule update –init –recursive

Creating a virtual environment:

python -m venv venv

Activating virtual environment:

.venvScriptsactivate

Installing torch:

pip install torch torchvision –index-url https://download.pytorch.org/whl/cu121

Installing required dependencies:

pip install -r requirements.txt

Now, close the command prompt.

(b)For Linux Users:

git clone https://github.com/ostris/ai-toolkit.git

cd ai-toolkit

git submodule update –init –recursive

python3 -m venv venv

source venv/bin/activate

pip3 install torch

pip3 install -r requirements.txt

Setup Model API:

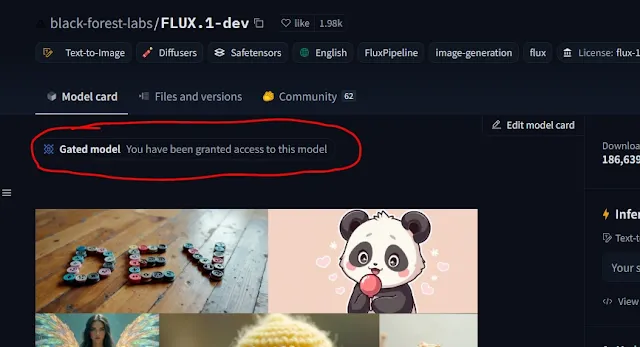

1. First you need to accept the terms and agreements on Hugging Face, otherwise, it will not allow you to set up.

To do this, login to Hugging Face and accept the terms and conditions of the Flux Dev version.

After accepting you will see this message as shown above.

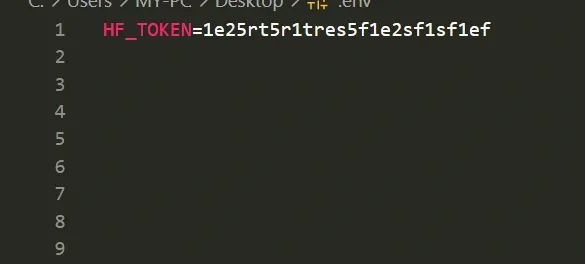

2. Then create your API key from Hugging Face.

3. Move into the “ai-toolkit” folder. Create a file named “.env” as an extension file using any editor. Copy and Paste your API key from the Hugging Face dashboard to the .env file like this “HF_TOKEN=your_key_here” as illustrated in the above image. The API key shown above is just for illustration purposes. You need to add yours.

Workflow:

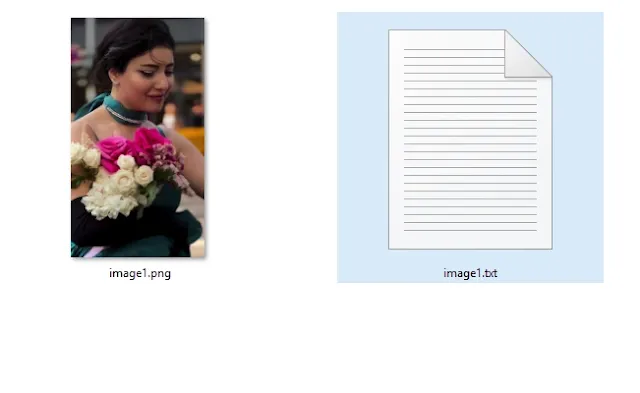

1. Prepare and store your dataset as the reference style of images with relative text in any new folder. You should use only the JPEG/PNG/JPG image format only. Minimum 10-15 will work best.

We want to generate art with realism, so we saved realistic images inside “D:/downloads/images” (you can choose any location). You do not need to resize the images. All the images will be automatically handled by the loader.

2. Now, create the text file as well mentioning details about the image. This will influence the model you train, so be creative and descriptive. Save them in the same folder.

For instance: If the image is “image1.png” then the text file should be “image1.txt“.

You can also add the [trigger] word in this form into a text file. For instance: “[trigger] holding a sign that says ‘I LOVE PROMPTS’“.

Don’t, know what is trigger word then you should go through our LoRA training tutorial.

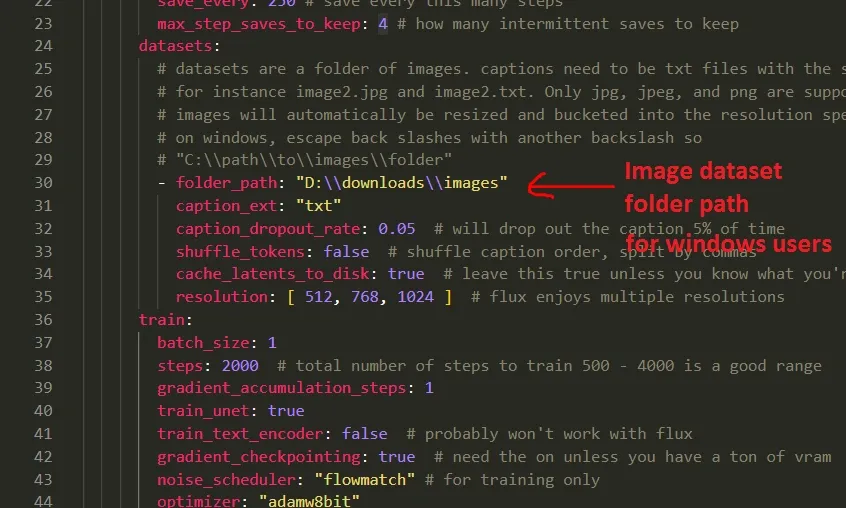

3. Now navigate to the “config/examples” folder and search for “train_lora_flux_24gb.yaml” file. Just copy this file using right-click and move back to the config folder and paste it there.

Then rename it to whatever relative name. We renamed it to “train_Flux_dev-Lora.yaml“.

4. Now you need to add the path location of your image data set folder.

(a) Windows users should edit the newly renamed yaml file. You can use any editor like Sublime Text or Notepad++ but we are using VS Code. All the details are available in the same file.

After opening the file, just copy the path of your image dataset folder by right-clicking, selecting the copy path or properties option, and paste it as it is like we have shown above.

This is our folder path location, yours will be different. Edit like we mentioned in the above-illustrated image and save the file.

(b) Linux users should add the path location like as usual in the yaml file.

5. Again move to “ai-toolkit” folder. Open the command prompt typing “cmd” on folder path location to to open command prompt.

Activating virtual environment:

.venvScriptsactivate

6. Finally, to start training, move back to command prompt and execute the file using the command, here “train_Flux_dev-Lora.yaml” is our file name, in your case, it’s different(whatever you choose).

For Windows users(replace the <<your-file-name>> with your filename):

python run.py config/<<your-file-name>>

In our case this is the command:

python run.py config/train_Flux_dev-Lora.yaml

Prompt used: a women sitting inside restaurant, hyper realistic , professional photoshoot, 16k

Prompt used: a tiny astronaut hatching from the egg on moon, realistic, 16k

Prompt used: a lava girl hatching from the egg in a dark forest, realistic, 8k