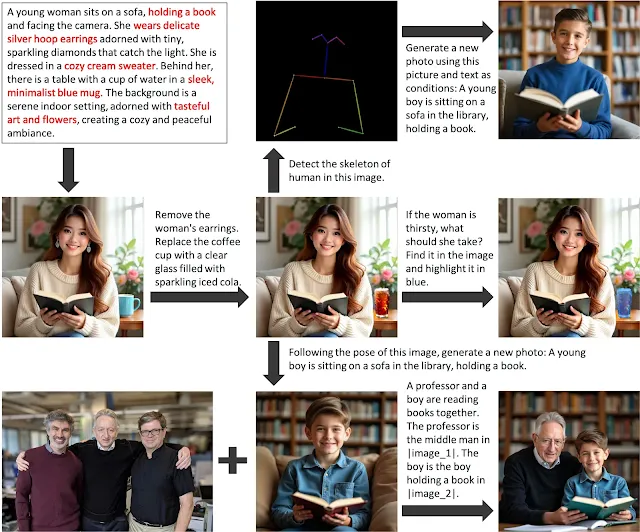

Traditional diffusion models uses various mechanisms for image modification like ControlNet, IP-Adapter, Inpainting, Face detection, pose estimation, cropping etc. Omnigen released by Vector Space labs comes with all in one pack.

It uses arbitrarily multi-modal instructions like we use to do with ChatGPT (NLP technique). Interested people can refer their research paper for in-depth understanding. Its come with fine-tuning capability that is one of the great news for the community. They are working to release more optimized model in the coming future that can be from their hugging Face repository.

Let’s see how to work in ComfyUI.

Table of Contents:

Installation

1. Install ComfyUI if not yet installed.

2. Move to your ComfyUI Manager select “Custom Nodes Manager” and search for “ComfyUI-Omnigen” by author “1038lab” and click Install button.

Alternative:

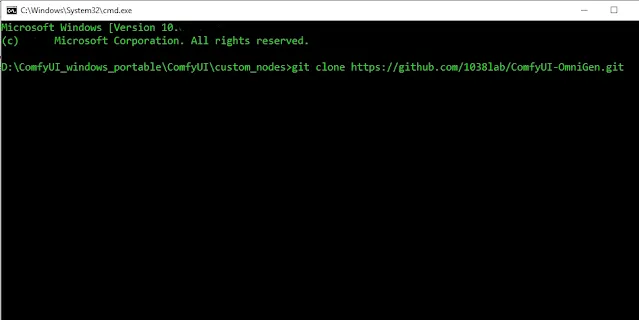

You can also use manual installation. Move to your “ComfyUI/custom_nodes” directory.

Open command prompt by typing “cmd” to top of folder address bar. Then, clone the repository using command provided below:

git clone https://github.com/1038lab/ComfyUI-OmniGen.git

3. The related model will automatically downloaded in the background if you run the basic workflow for the first time. You can check the real-time downloading status in the ComfyUI’s terminal.

Alternative:

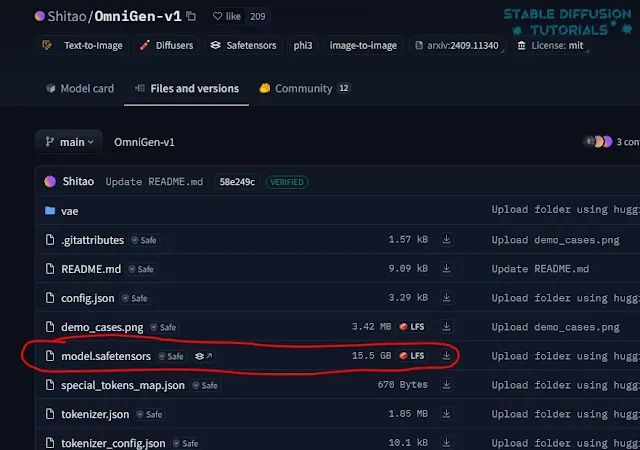

Manually download the respective model(model.safetensors) having 15.5GB size from Hugging Face repository.

After downloading, rename it to anything relatable like- “omnigen-all-in-one.safetensors“. Then, save it inside “Comfyui/models/LLM/OmniGen-v1” folder.

4. Restart ComfyUI to take effect.

Workflow

1. The workflows can be found inside your “ComfyUI/custom-nodes/ComfyUI-OmniGen/Examples” folder. Drag and drop to ComfyUI.

2. To work with the workflow first we need to understand how the basic things work. So, here we have provided with placeholder that are in the form of html tags.

These will be set as “<img><|image_*|></img>” where you need to put –

<img> = This is the image opening tag.

<|image_*|> = Put your placeholder. here, *(asterisk) means put any number of image prompt you like. Ex- <|image_1|> or <|image_2|> …. so on.

</img> = Its for image closing tag.

CFG=2.5

Image guidance=1.6

Inference Steps=50

You can make the model to understand better by putting right placeholder. For instance your prompt will be like –

Example 1 = “A woman holds a bouquet of flowers wearing gown and faces the camera in California streets. The woman is <img><|image_1|></img>.”

Example 2 = “Two woman are enjoying party in the Cafeteria. A woman is <img><|image_1|></img>. Another woman is <img><|image_2|></img>.“

Recommended tips

1. You should only use same image dimensions(width and height) while doing image editing and Controlnet processing.

2. Reduce the CFG value if the image generated with saturation. Default value is 2.5. Just be closer to it to get the better results.

3. Use detailed prompting that more relates to your generation will yielding better results.

4. Working with image editing, place the image prompt before the editing prompts. Like if you want to remove some thing like “book” from image, use prompt structure “<img><|image_1|></img> remove cup” an not like this – “remove cup <img><|image_1|></img>“