After a long wait the ControlNet models for Stable Diffusion XL has been released for the community.

This is the officially supported and recommended extension for Stable diffusion WebUI by the native developer of ControlNet.

The current update of ControlNet1.1.400 supports beyond the Automatic1111 1.6.0 version. So, you need to update the Automatic1111, if not yet.

Features included:

- Support for SDXL1.0

- Simple plug and play (no manual configuration needed)

- Training can be done

- Multiple ControlNet Working

- Multiple options like T2I Adapter, Composer, Control LoRA etc.

- Guess mode/Non-Prompt mode

Steps to Install in Automatic1111/Forge:

1. First of all, you need to have downloaded and installed the Automatic1111 WebUI if not yet and update it.

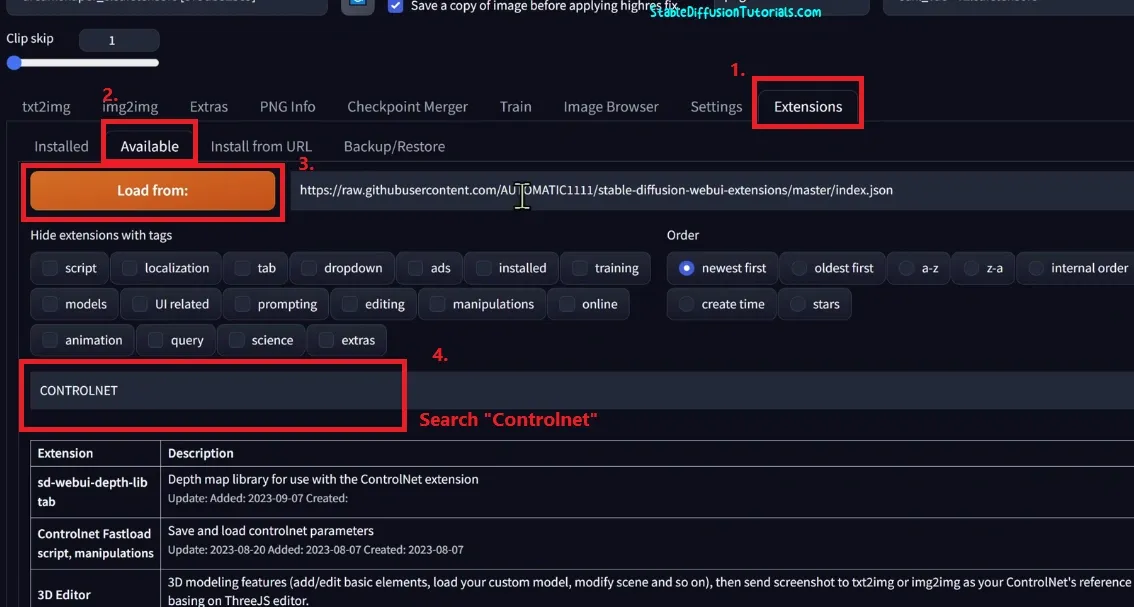

2. Open the Automatic1111 WebUI, move to the “Extension” tab, then select the “Load from” button.

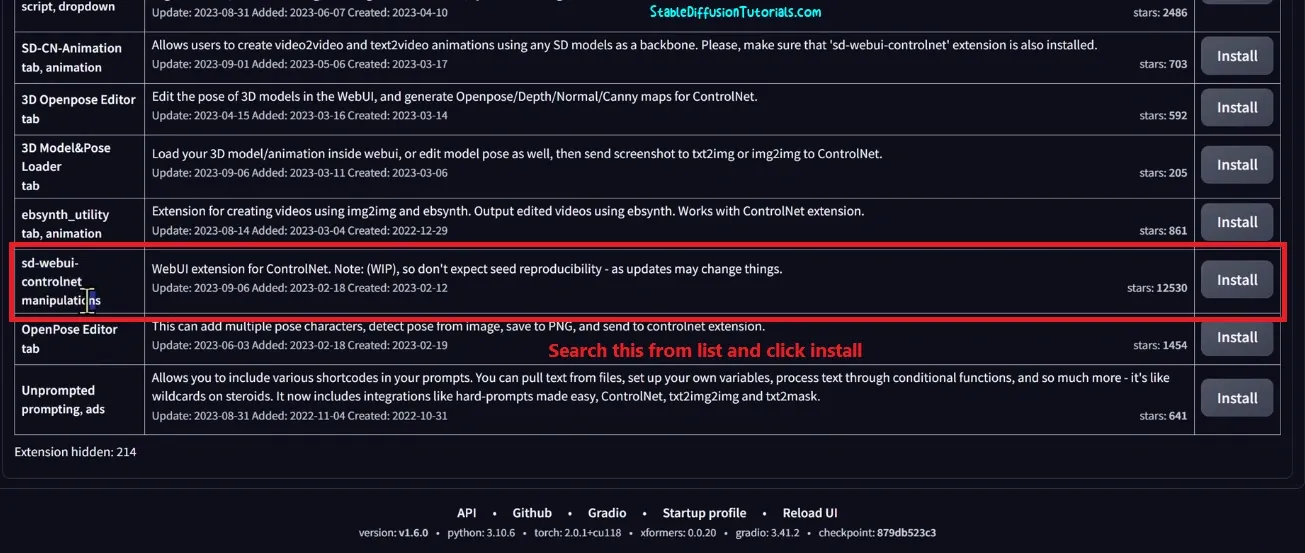

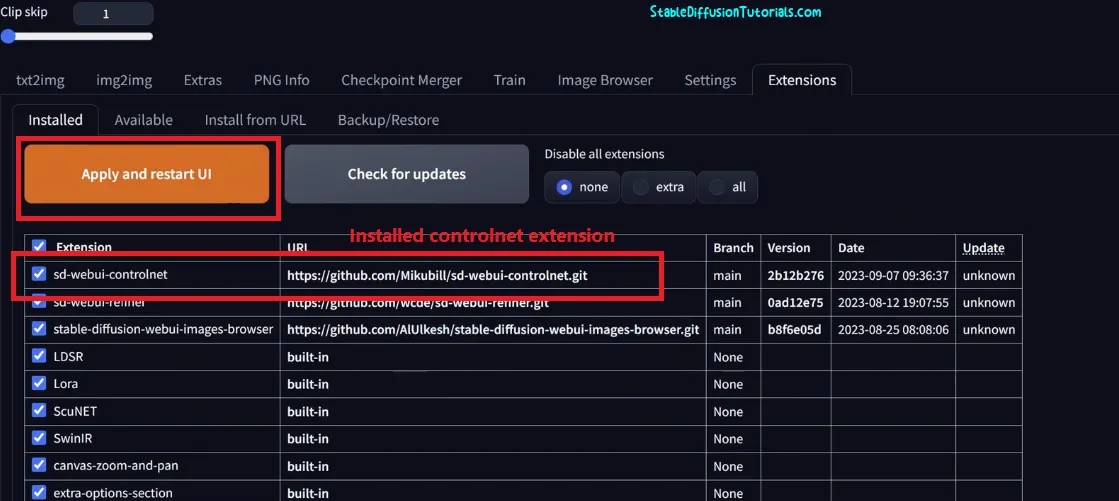

3. Now, you need to search for “Control Net” on search bar, then click “Install” button. But, if you have already have the ControlNet installed then its need to be updated before using Stable Diffusion XL Select the “Check for Updates“. This will make the ControlNet to up to date.

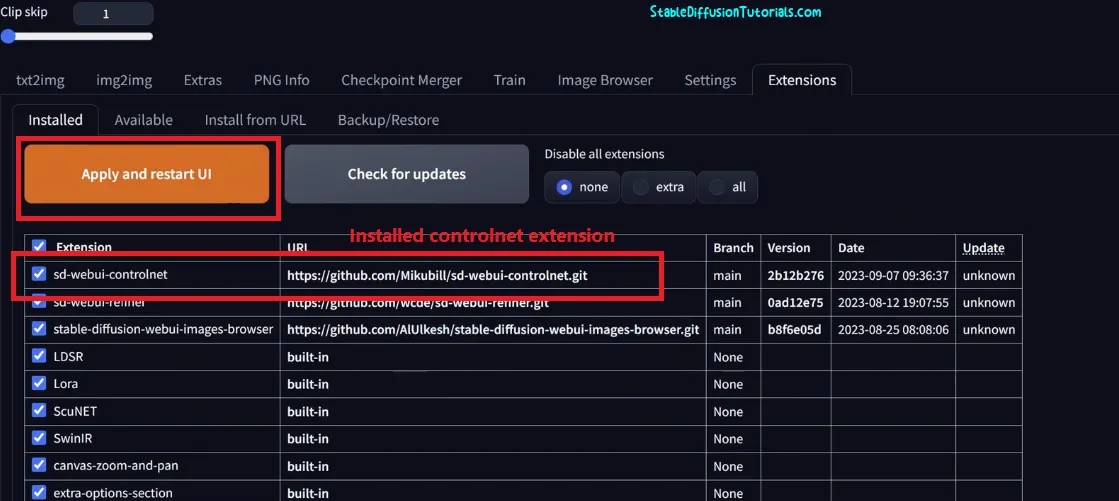

4. After installing the extension will be seen on the extension list. Now, restart Automatic1111 WebUI by click on the “Apply and Restart UI” button to take effect.

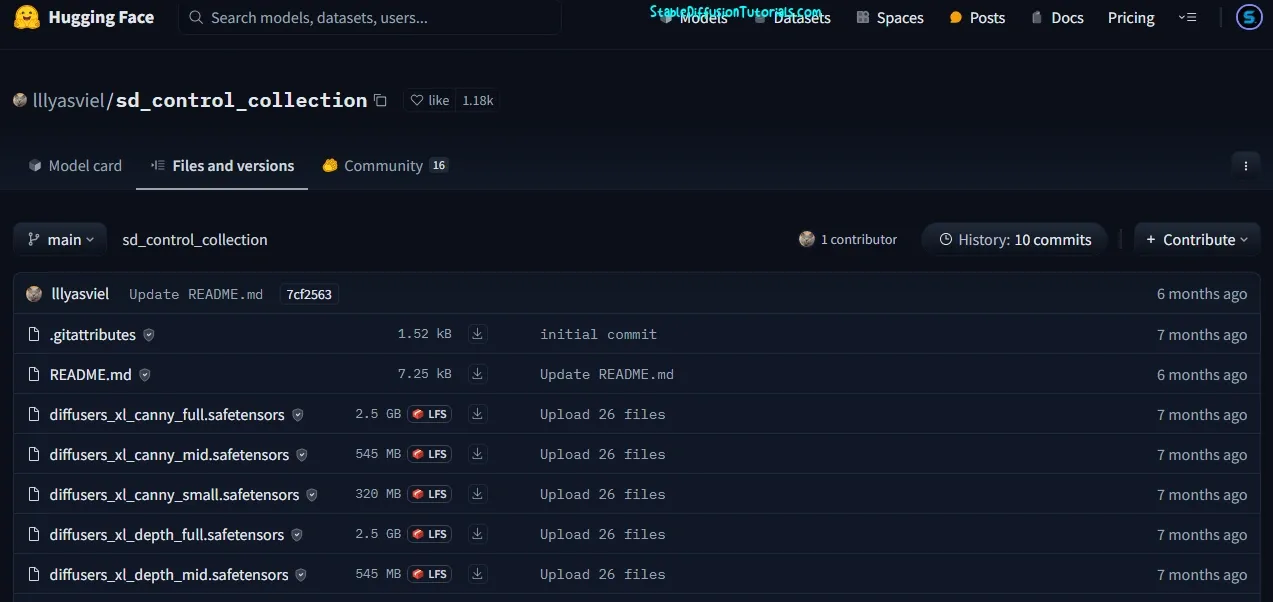

5. Now, we have to download some extra models available specially for Stable Diffusion XL (SDXL) from the Hugging Face repository link (This will download the control net models your want to choose from).

6. Now, we have to download the ControlNet models. So, move to the official repository of Hugging Face (official link mentioned below).

https://huggingface.co/lllyasviel/sd_control_collection/tree/main

The updated model list given below (You need to download as per your requirement):

- diffusers_xl_canny_full.safetensors

- diffusers_xl_canny_mid.safetensors

- diffusers_xl_canny_small.safetensors

- diffusers_xl_depth_full.safetensors

- diffusers_xl_depth_mid.safetensors

- diffusers_xl_depth_small.safetensors

- ioclab_sd15_recolor.safetensors

- ip-adapter_sd15.pth

- ip-adapter_sd15_plus.pth

- ip-adapter_xl.pth

- kohya_controllllite_xl_depth_anime.safetensors

- kohya_controllllite_xl_canny_anime.safetensors

- kohya_controllllite_xl_scribble_anime.safetensors

- kohya_controllllite_xl_openpose_anime.safetensors

- kohya_controllllite_xl_openpose_anime_v2.safetensors

- kohya_controllllite_xl_blur_anime_beta.safetensors

- kohya_controllllite_xl_blur_anime.safetensors

- kohya_controllllite_xl_blur.safetensors

- kohya_controllllite_xl_canny.safetensors

- kohya_controllllite_xl_depth.safetensors

- sai_xl_canny_128lora.safetensors

- sai_xl_canny_256lora.safetensors

- sai_xl_depth_128lora.safetensors

- sai_xl_depth_256lora.safetensors

- sai_xl_recolor_128lora.safetensors

- sai_xl_recolor_256lora.safetensors

- sai_xl_sketch_128lora.safetensors

- sai_xl_sketch_256lora.safetensors

- sargezt_xl_depth.safetensors

- sargezt_xl_depth_faid_vidit.safetensors

- sargezt_xl_depth_zeed.safetensors

- sargezt_xl_softedge.safetensors

- t2i-adapter_xl_canny.safetensors

- t2i-adapter_xl_openpose.safetensors

- t2i-adapter_xl_sketch.safetensors

- t2i-adapter_diffusers_xl_canny.safetensors

- t2i-adapter_diffusers_xl_depth_midas.safetensors

- t2i-adapter_diffusers_xl_depth_zoe.safetensors

- t2i-adapter_diffusers_xl_lineart.safetensors

- t2i-adapter_diffusers_xl_openpose.safetensors

- t2i-adapter_diffusers_xl_sketch.safetensors

- thibaud_xl_openpose.safetensors

- thibaud_xl_openpose_256lora.safetensors

Here, there are multiple models available, but it depends on your requirement what your real use case is. Different ControlNet models options like canny, openpose, kohya, T2I Adapter, Softedge, Sketch, etc. are available for different workflows.

Like if you want for canny then only select the models with keyword “canny” or if you want to work if kohya for LoRA training then select the “kohya” named models. Now, if you want all then you can download all of the models. Its as simple as that. But, we are downloading all the models one by one.

3. Now after downloading save the downloaded files into your “stable-diffusion-webuiextensionssd-webui-controlnetmodels” folder or “stable-diffusion-webuimodelsControlNet” folder.

Now, move to the “Installed” tab section and restart your Automatic1111 by selecting on “Apply and Restart UI” to take effect.

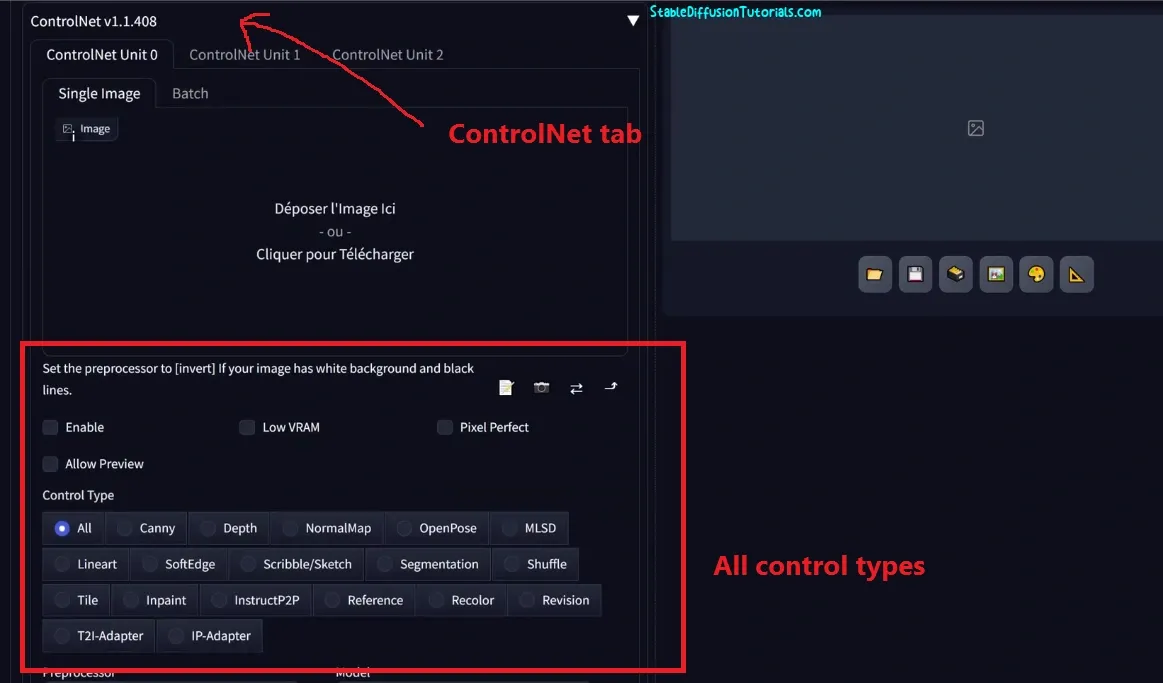

After installation you will see a new ControlNet appeared into the Automatic1111 WebUI having all the features. Now if you don’t know how to work and master in ControlNet, then we have already made tutorial on this, you should definitely check this out.

If you are getting with Xformers error or you haven’t downloaded and upgraded it then you should do it for better inference speed.

Settings for VRAM optimization:

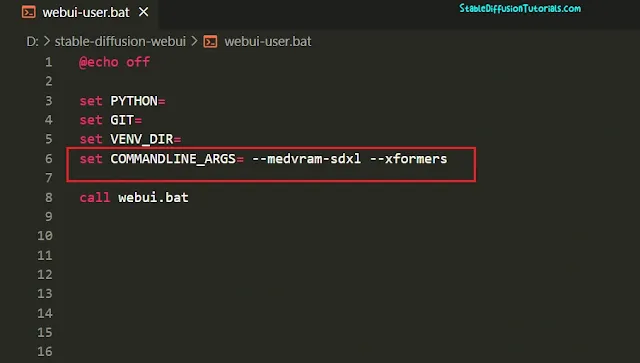

For getting the perfect optimization with your VRAM, its instructed to follow the setup into your bat file. This will make your WebUI to run at optimized rate with suitable configuration. For the optimization, make sure you also have Xformers installed.

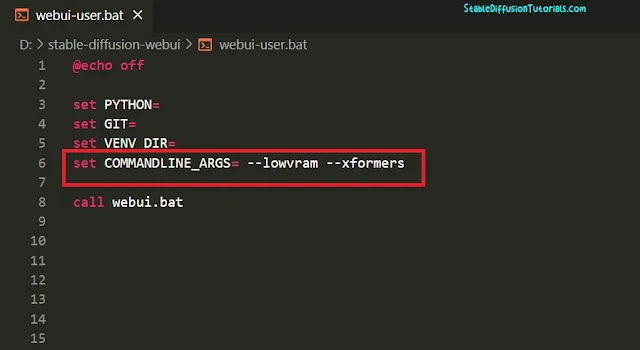

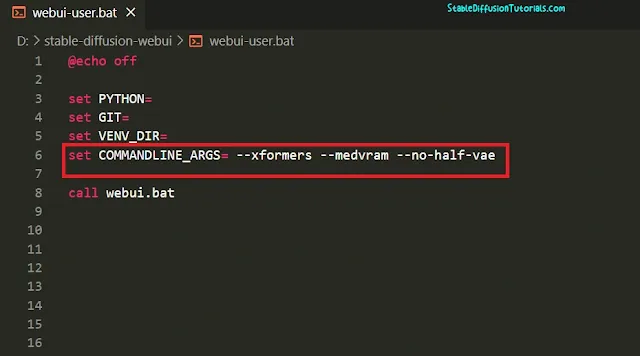

1. If you are using low VRAM (8-16GB) then its recommended to use the “–medvram-sdxl” arguments into “webui-user.bat” file available into the “stable-diffusion-webui” folder using any editor (Notepad or Notepad++) like we have shown on the above image.

This actually influence the SDXL checkpoints which results to load the specific files helps to lower the VRAM memory.

2. If you are using less than 8GB VRAM (4-8GB) then its recommended to use the “–lowvram” arguments into “webui-user.bat” like we have shown on the above image.

3. Another recommendation is if you don’t get any performance optimization, using the “–no-half-vae” flags can also be a good option. The example has been provided on the above image.

One of the members was using RTX 3080 Ti 12GB with “–medvram-sdxl –xformers –no-half –autolaunch” as arguments and he was getting 32minutes of generation time with “Canny” feature.

After using “–no-half-vae” as arguments the generation time dropped to drastic level.

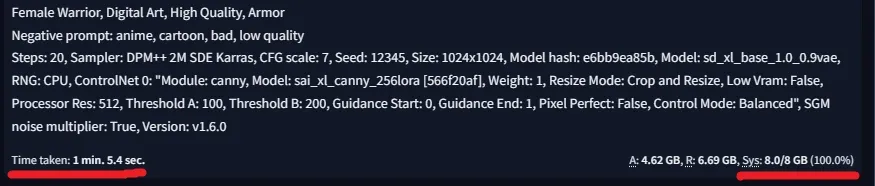

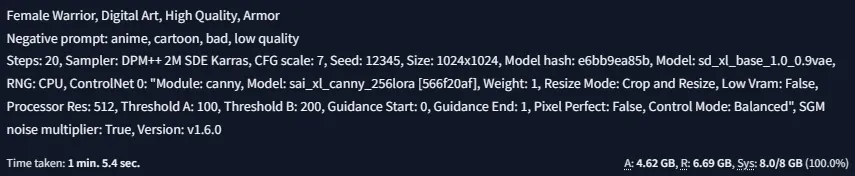

Optimized Results as Example:

We are sharing performance optimization with faster generation on different machines. All the results are from the official page of ControlNet:

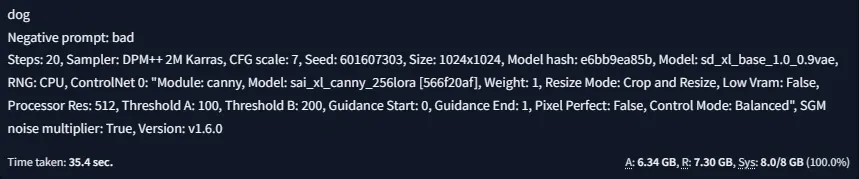

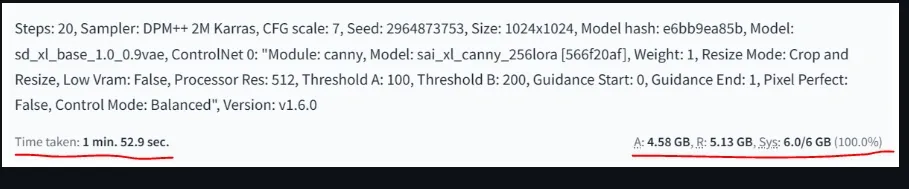

1. RTX 3070ti laptop GPU

–lowvram –xformers

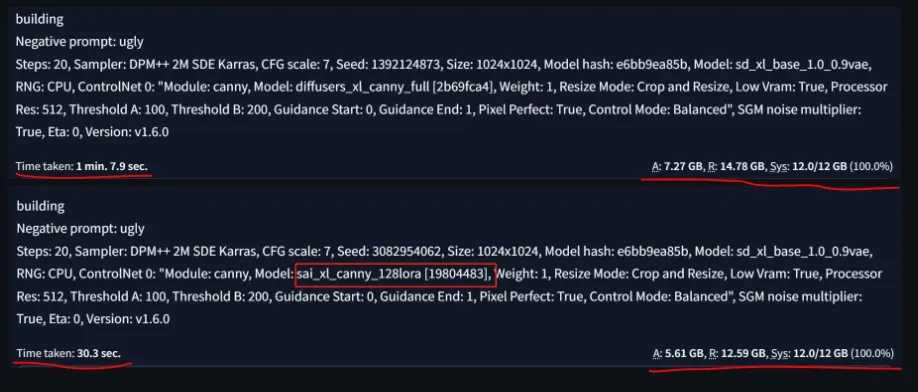

2. RTX 3070ti laptop GPU

–lowvram –xformers

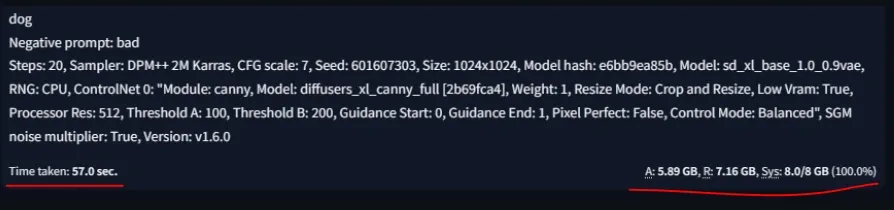

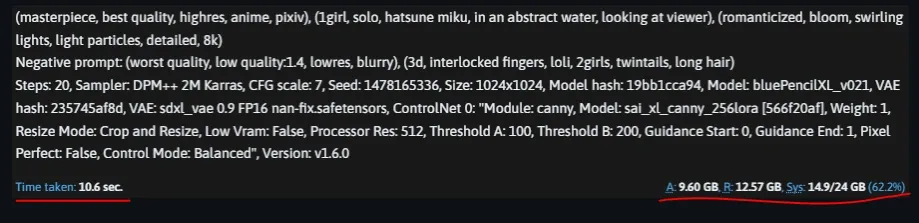

3. RTX 3070ti laptop GPU

–medvram-sdxl –xformers

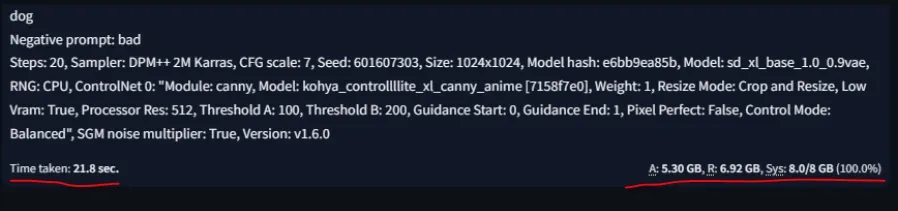

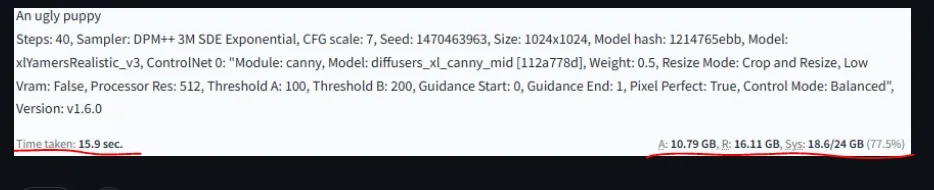

4.RTX 3070ti laptop GPU

–medvram-sdxl –xformers

5.RTX 3070ti laptop GPU

–medvram-sdxl –xformers

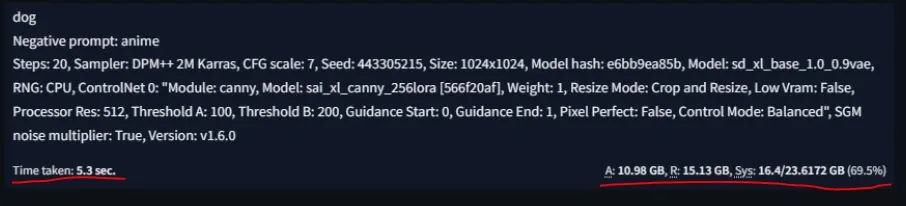

6. RTX 4090

–xformers

7. 3060 laptop GPU

–lowvram –xformers

8. RTX 3080 12G

–xformers –medvram-sdxl

9. RTX 3090 24G

–xformer

10. RTX 3090 24GB

–xformers

Conclusion:

Whenever their is something you want to do with image modifications or video generation in similar style from the reference, ControlNet proved to be the most powerful tool.

Now, with the ControlNet for Stable Diffusion XL the output become more realistic as compared to the older Stable Diffusion1.5.