Whether you are a social media creator or an enterprise, this will save you from spending massive amounts of time and money in making your own advertisement videos with great consistency in image morphing.

MimicMotion developed by Tencent, is a high-quality video generation framework based on motion guidance that overcomes the consistency problem. There are other diffusion-based video generation models like AnimateDiff and Animate Anyone. However, they fail to attain the super-consistent frame generation.

This model uses confidence-aware pose guidance to generate video more smoothly and naturally. Remember those weird deformed hand glitches that are somewhat solved with the framework. It also uses a progressive latent fusion technique to generate longer videos without stressing your GPU.

Sounds Cool, Right?

You can take the in-depth details and information about the model from its research paper. Now it can be easily integrated and used in ComfyUI. Let’s see how to do this.

Installation:

1. First, you need to install ComfyUI and get the ComfyUI basic understandings.

Now, update ComfyUI if you already installed by navigating to ComfyUI Manager and click “Update ComfyUI” and “Refresh” to clear browser cache.

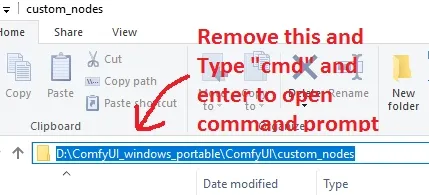

2. Now clone the repository by moving into the “ComfyUI/custom_nodes” folder and open your command prompt by typing “cmd” on the address bar.

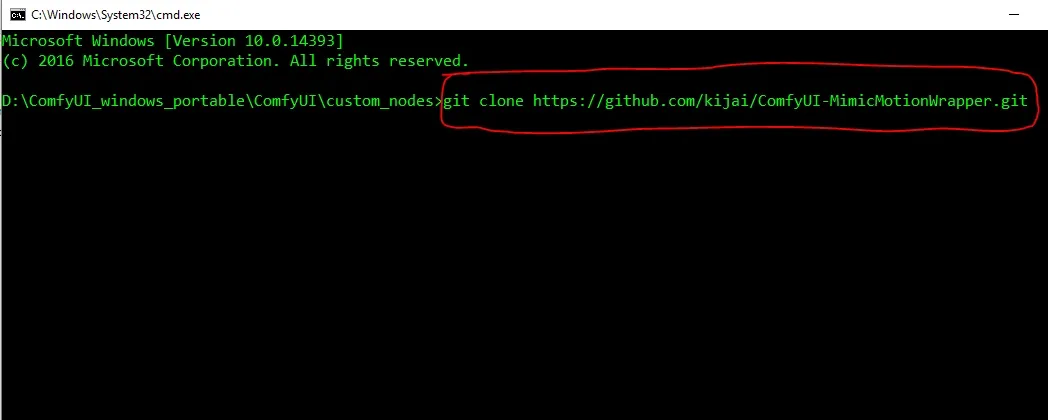

The cloning command is provided below.

git clone https://github.com/kijai/ComfyUI-MimicMotionWrapper.git

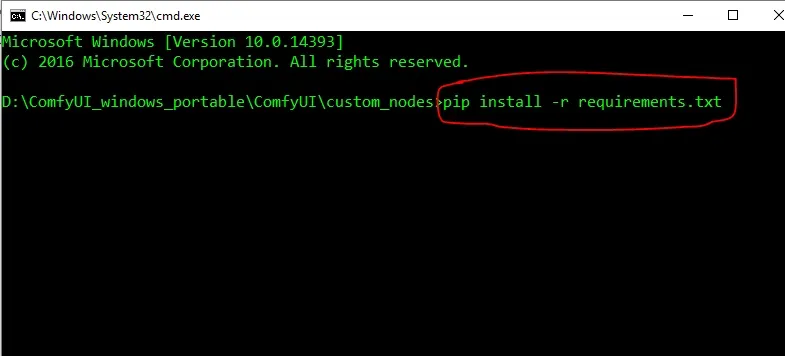

Now, in your command prompt just copy and paste the command provided below to install the required dependencies:

pip install -r requirements.txt

Alternative:

For ComfyUI portable version, this command can also be used to install the requirements:

python_embededpython.exe -m pip install -r ComfyUIcustom_nodesComfyUI-MimicMotionWrapperrequirements.txt

This will download all the dependencies to their respective directory.

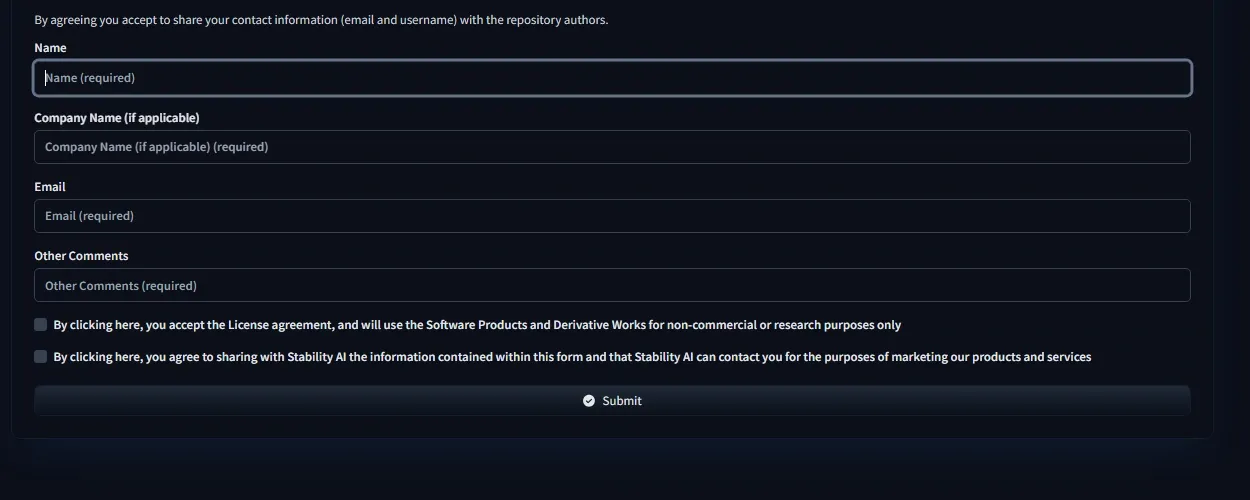

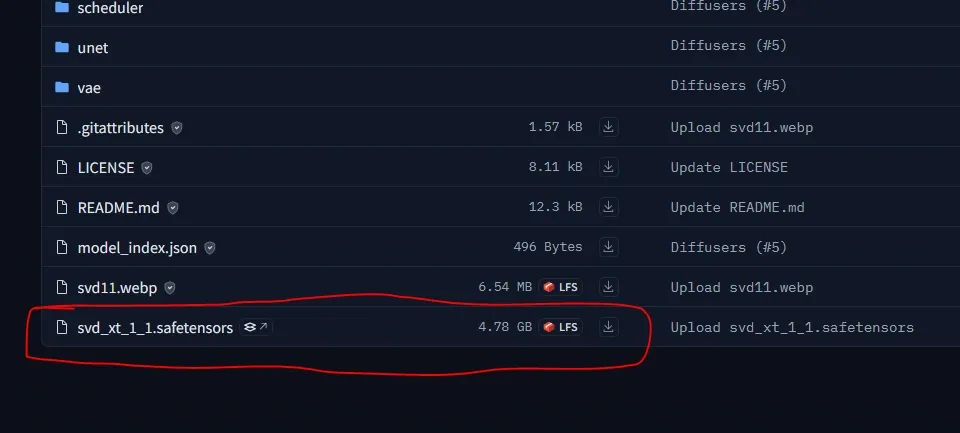

To download this Image-to-Video (SVD) model, log in to Hugging Face, and accept the terms and conditions.

3. This model requires fp16(float 16) version which is around 4 GB version of SVD XT 1.1. Download the SVD(Stable Video Diffusion) model from StabilityAI’s Hugging face repository and save it into the “ComfyUI/models/diffusers” folder.

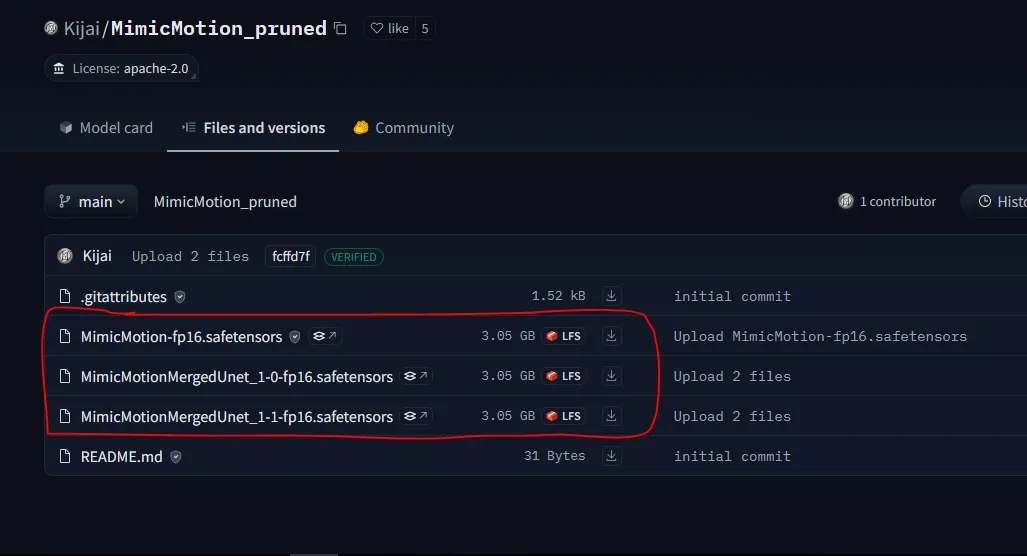

4. Next, download the MimicMotion model from Hugging face repository. You only need to download one of them. The developer regularly updates the same Mimic motion model with a little tweaking. for instance, you can see the first one is the basic one (fp16 version). The second includes the UNet fp16 version and the third one is again UNet merged with fp16 as 1.1.

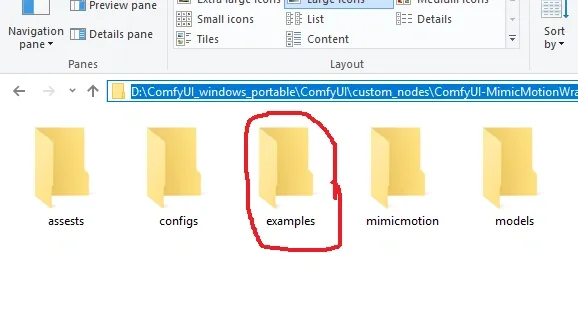

5. Next is to get the MimicMotion workflow already provided into your “ComfyUI/custom_nodes/ComfyUI-MimicMotionWrapper/examples” folder or alternatively, you can download it from the respective link.

Important Points to consider:

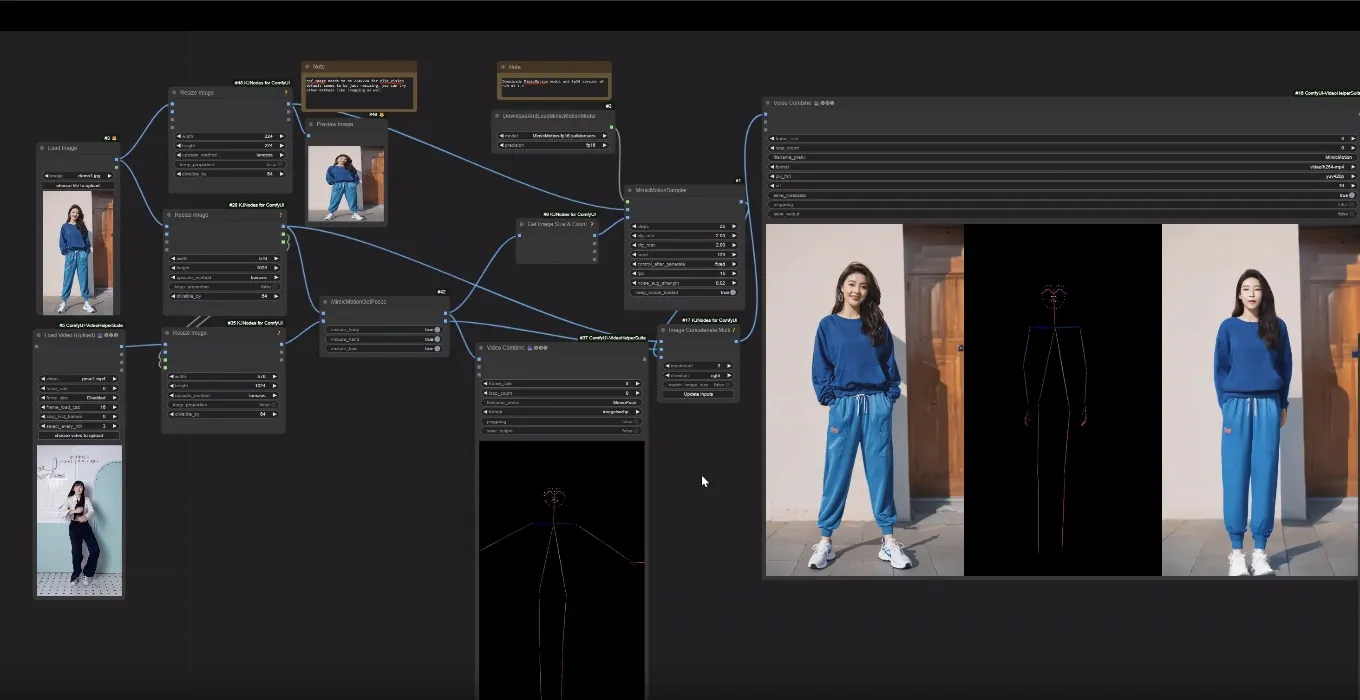

|

| Source- Github repository |

You should always use the same dimension from the reference image otherwise you will encounter DWPose deformation. Currently, it renders videos with a maximum of 16 frames at a 576×1024 resolution or vice-versa as it uses Stable video Diffusion as the base framework.

If you face an out-of-memory error, you must reduce the number of frames for generating the output.

Conclusion:

MimicMotion made work so simple in generating consistent frame generation which is proved to be the problem solver for AI creators.